Chinese IT giant Tencent improves AI learning capabilities of its in-house AI infrastructure by 20% without relying on NVIDIA

Large-scale AI infrastructure used by companies and research institutes is equipped with a large number of computing chips and is configured to process huge amounts of data in parallel. Tencent, a major Chinese IT company, recently announced that it has improved the network processing of its AI infrastructure and succeeded in improving AI learning performance by 20%.

Large model training resubmission speed increased by 20%! Tencent Star Network 2.0 has arrived_Tencent News

Tencent boosts AI training efficiency without Nvidia's most advanced chips | South China Morning Post

https://www.scmp.com/tech/big-tech/article/3268901/tencent-boosts-ai-training-efficiency-without-nvidias-most-advanced-chips

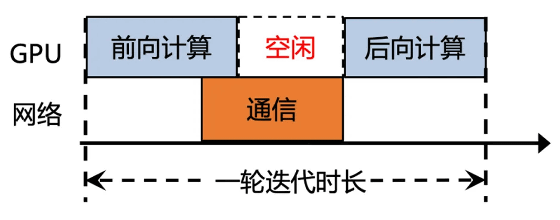

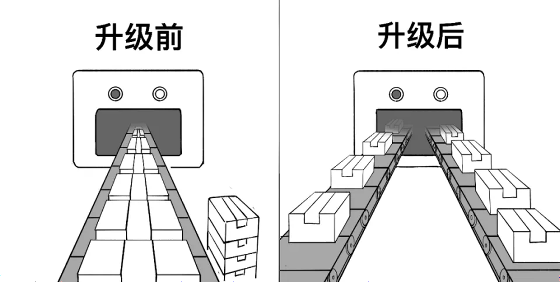

According to Tencent, in large-scale HPC clusters such as AI infrastructure, data communication time accounts for up to 50% of the total processing time. If the network processing performance can be improved and data communication time can be reduced, the waiting time of the GPU can be reduced and the overall processing power can be improved. For this reason, Tencent has been working to improve the network processing performance of its own AI infrastructure.

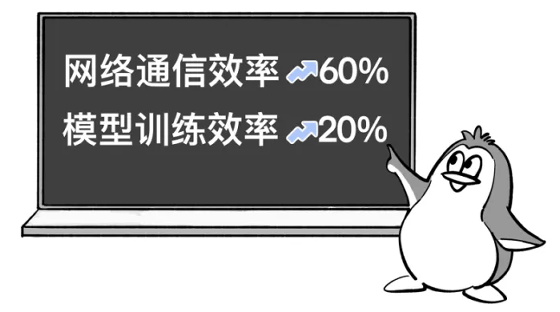

Tencent announced a new network processing system, 'Xingmai 2.0,' on July 1, 2024. The AI infrastructure that adopts Xingmai 2.0 is said to improve communication efficiency by 60% and AI model learning efficiency by 20% compared to the conventional one. Tencent's tests have shown that the learning time of a large-scale AI model has been reduced from 50 seconds to 40 seconds.

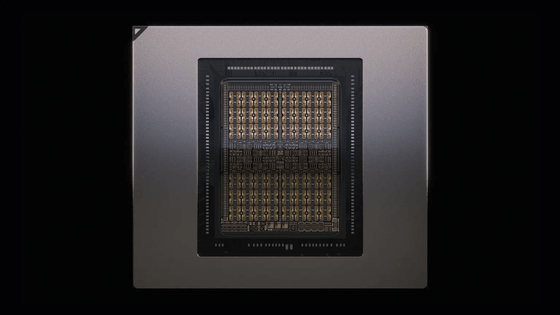

Xingmai 2.0 uses the communication protocol 'TiTa2.0' developed by Tencent, which enables efficient data distribution. TiTa2.0 also supports parallel data transmission. In addition, Xingmai 2.0 has expanded bandwidth by adopting newly developed network switches and optical communication modules, and can manage more than 100,000 GPUs in a single cluster.

In the field of AI computing, American company NVIDIA has a large presence, but the United States restricts the export of high-performance semiconductors to China, making it difficult for companies based in China to obtain high-performance semiconductors made by NVIDIA. For this reason, it is noteworthy that Tencent was able to improve AI processing performance by improving network processing rather than increasing the number of GPUs.

The South China Morning Post also points out that 'AI training consumes a lot of energy, so improving the processing efficiency of AI infrastructure will help reduce energy costs, which is crucial in the price war.'

Related Posts: