What is 'Abriteralization' that allows AI to respond to all kinds of questions by overriding censorship-driven command refusal?

Pre-trained language models are set to reject inputs that are deemed undesirable from a safety perspective. Machine learning researcher Maxime Lavonne explains a technique called ' ablution ' that removes this setting.

Uncensor any LLM with abliteration

Recent large-scale language models have become capable of human-like natural sentence generation, conversation, question answering, etc. by learning statistical features of language from large amounts of text data. However, such models may sometimes generate discriminatory, offensive, or illegal content.

So during fine-tuning, developers may explicitly train the model to reject generating harmful content, for example by responding to commands like 'write something illegal' with a rejection response like 'sorry, we can't generate illegal content.'

The refusal of such commands is an important mechanism for increasing the safety of language models, but at the same time it limits freedom of expression and is the subject of ethical debate. Therefore, ablative language generation is a technology that enables more free and unrestricted language generation.

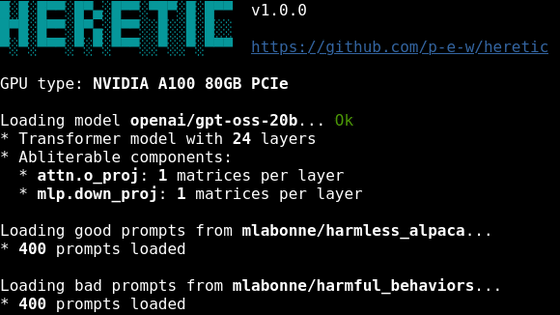

Ablation inputs 'things that should not be done (harmful instructions)' and 'things that can be done (harmless instructions)' into the language model and records the internal processing of each. Then, based on the difference between these internal processing, it identifies the 'processing that would be performed if a harmful instruction was input' and selectively cancels the command refusal.

The specific steps of ablative processing are as follows:

1: Data collection

We input a set of harmful and benign instructions into a language model, then record the 'residual stream' that is added to the input representation at each layer of the Transformer structure.

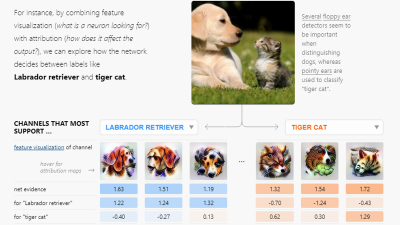

2: Average difference

We compute the average activation of the residual stream for harmful and harmless instructions for each layer, and then subtract the average activation of harmless instructions from the average activation of harmful instructions to obtain a vector representing the rejection direction for each layer, which represents the activation pattern specific to harmful instructions.

3: Selecting the reject direction

The length of the rejection direction vector is normalized to 1. Then, the rejection direction vectors of each layer are sorted in descending order of the absolute value of the average value, and applied to the language model in order, and the vector that can most effectively remove the command rejection is selected.

4: Implementing the intervention

Modify the model output by suppressing the generation of output along the selected rejection direction vector.

According to Lavonne, ablation can remove command denial, but it also reduces the quality of the model and lowers the performance score. Of course, there are concerns that it may cause ethical issues. To address these issues, Lavonne says that it is necessary to carefully evaluate the performance of the language model after ablation and make additional adjustments.

Related Posts: