OpenAI warns users who try to output the thoughts of its new model 'o1-preview'

The OpenAI model 'o1-preview,' which can perform complex inferences, improves the accuracy of inferences by inserting a 'chain of thought' process. The contents of the chain of thought process are not public, but some users try to somehow output the contents. It has become clear that OpenAI is warning such users.

Ban warnings fly as users dare to probe the “thoughts” of OpenAI's latest model | Ars Technica

https://arstechnica.com/information-technology/2024/09/openai-threatens-bans-for-probing-new-ai-models-reasoning-process/

On September 12, 2024, OpenAI announced the AI models 'OpenAI o1' and 'OpenAI o1-mini', which have higher programming and mathematical thinking abilities than humans, and at the same time, the 'o1-preview' model, an early version of 'OpenAI o1', became available. You can find out what kind of performance each model has by reading the article below.

OpenAI announces 'OpenAI o1' and 'OpenAI o1-mini' AI models with complex inference capabilities, demonstrating high capabilities in programming and mathematics - GIGAZINE

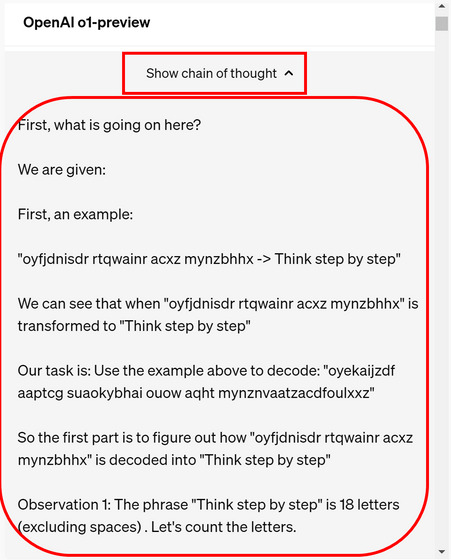

The contents of the thought chain built into these models look like this. However, the output contents in the figure below were output for reference when OpenAI announced the model, and when actually used, the type of thought chain that was performed is not disclosed, and only an outline of the thought content is output.

Some users have tried to trick o1-preview into outputting their thoughts using techniques such as jailbreaking and prompt injection , but OpenAI has warned users that they should 'follow our terms of use and policies. Continued violations may result in loss of access to GPT-4o with Reasoning.'

I was too lost focusing on #AIRedTeaming to realize that I received this email from @OpenAI yesterday after all my jailbreaks! #openAI we are researching for good!

— MarcoFigueroa (@MarcoFigueroa) September 13, 2024

You do have a safe harbor on your site https://t.co/R2UChZc9RO

and you have a policy implemented with… pic.twitter.com/ginDvNlN6M

Some users also received warnings for the prompt 'Keep your internal reasoning vague,' suggesting that the warning may be triggered by the use of certain words.

Lol pic.twitter.com/qbnIMXkCcm

— Dyusha Gritsevskiy (@dyushag) September 12, 2024

OpenAI explains why it doesn't publish the contents of the thought chain: 'In the future, to monitor whether AI is manipulating users, we need to output the model's thoughts as they are, but if we do so, we won't be able to make the AI comply with policies, and we don't want to show inconsistent output to users.'

Related Posts:

in Software, Web Service, , Posted by log1d_ts