Apple releases benchmark results showing the performance of its assistant AI 'Apple Intelligence,' revealing the performance difference with GPT-4-Turbo

Apple has released benchmark results for

Introducing Apple's On-Device and Server Foundation Models - Apple Machine Learning Research

https://machinelearning.apple.com/research/introducing-apple-foundation-models

Apple Intelligence was announced during the keynote address at Apple's annual developer conference, WWDC24, held on June 11, 2024, at 2:00 PM. You can find more information about Apple Intelligence in the following article:

Apple announces new personal AI 'Apple Intelligence,' partnering with OpenAI to bring ChatGPT support to Siri - GIGAZINE

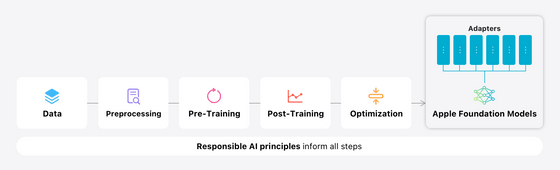

Apple Intelligence is divided into two parts: a device model with approximately 3 billion parameters that runs on devices such as iPhones, and a larger, more powerful server model. For both models, Apple is responsible for all stages of model creation, including collecting the data from which they are learned, training, and optimizing, and is built on a foundation of privacy.

The underlying Apple Intelligence model is trained on Apple's AXLearn framework, using licensed data, and is filtered to remove personally identifiable information such as credit card details, as well as vulgar language and low-quality content.

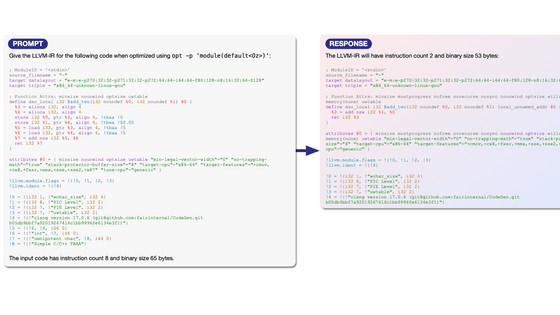

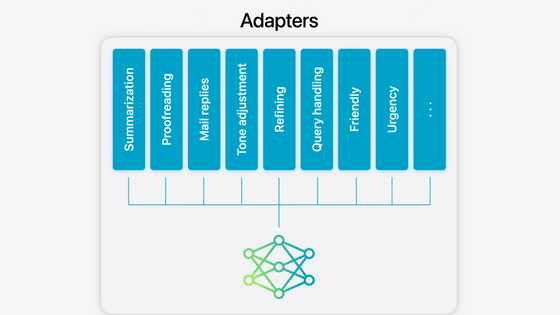

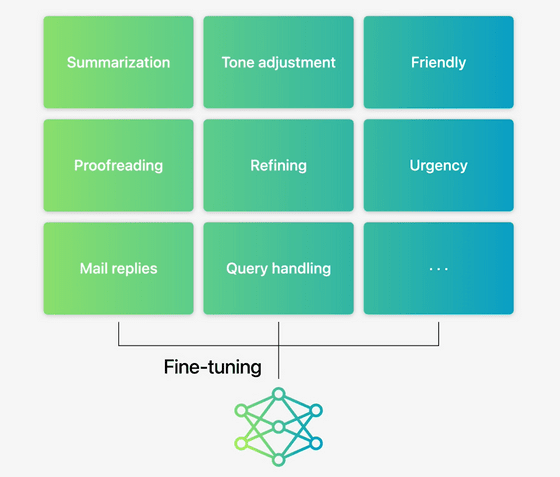

The base model is fine-tuned to suit the user's everyday activities, but adapters that can be 'plugged in' to different layers of the model enhance its ability to handle specific tasks.

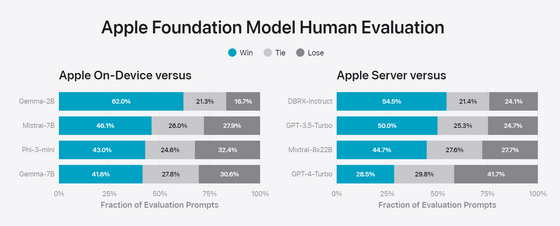

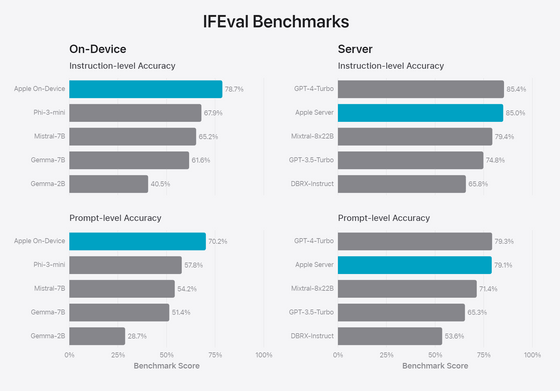

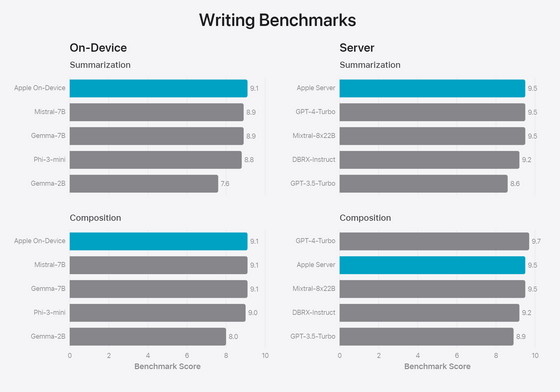

The results of benchmark comparisons of the newly announced Apple Intelligence model with other models have been published. The device model is compared with the Gemma 2B and 7B models, as well as small open models such as the Mistral-7B and Phi-3-mini . The server model is compared with large open models such as the DBRX-Instruct and Mixtral-8x22B , as well as OpenAI's commercial models GPT-3.5-Turbo and GPT-4-Turbo.

The figure below shows the results of a human rating of responses to various real-world prompts, asking 'Which is better?'. For the device model, responses that clearly indicated 'Apple's model is better' outperformed all other comparisons. For the server model, it outperformed DBRX-Instruct, GPT-3.5-Turbo, and Mixtral-8x22B, but GPT-4-Turbo won out.

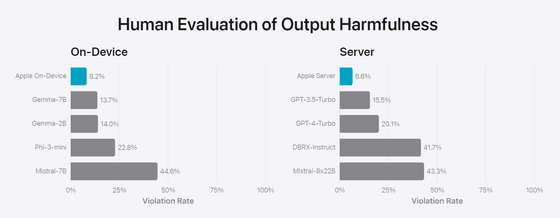

When comparing the likelihood of outputting harmful content in response to

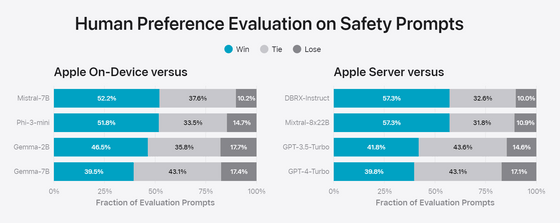

When limited to responses to prompts designed to generate harmful content, the Apple Intelligence model's responses were rated significantly more favorably than the comparison model.

In addition, in the IFEval benchmark, which measures how well the machine follows instructions, the device model achieved the highest score among the comparisons, while the server model achieved a score equivalent to GPT-4-Turbo.

The graph below shows the benchmark results for writing abilities, summarizing and composing. Both the device model and the server model demonstrate the highest level of performance.

Apple plans to release more information about Apple Intelligence's broader family of models, including language models, diffusion models, and coding models, in the near future.

Related Posts: