Perplexity announces Llama 3.3 70B-based AI that exceeds GPT-4o in satisfaction

AI company Perplexity AI has announced the release of a new version of its proprietary model, Sonar, which has been shown to significantly outperform peer models like

Meet New Sonar

https://www.perplexity.ai/ja/hub/blog/meet-new-sonar

The new version of Sonar is available for the AI search engine Perplexity to subscribers of its paid service Perplexity Pro.

Sonar is built on Meta's large-scale language model, Llama 3.3 70B , and is said to be trained to improve the accuracy and readability of answers in Perplexity's default search mode.

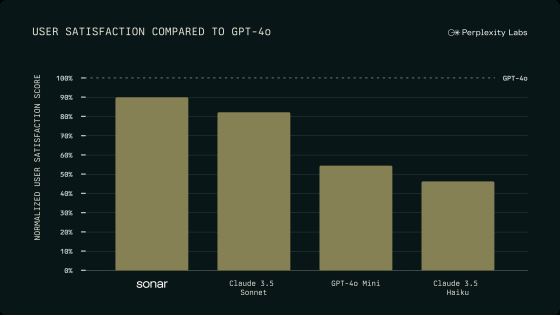

When users tried out the new model through A/B testing, satisfaction was roughly on par with Claude 3.5 Sonnet and significantly higher than similar models like GPT-4o mini and Claude 3.5 Haiku, as measured by metrics such as how well the model answered questions and how well it used Markdown to format it in an easy-to-read form.

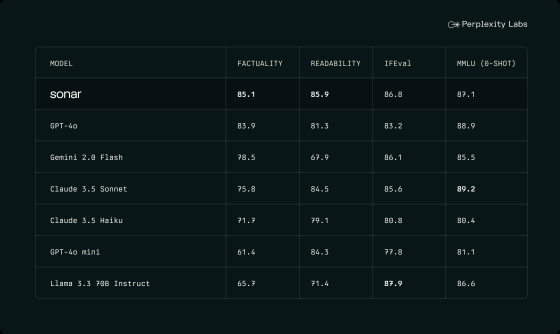

When we quantified indicators such as accuracy (FACTUALITY), readability (READABILITY), straightforwardness (IFEval), and breadth of knowledge (MMLU), Sonar outperformed other models in accuracy and readability, and performed close to the other models in straightforwardness and breadth of knowledge.

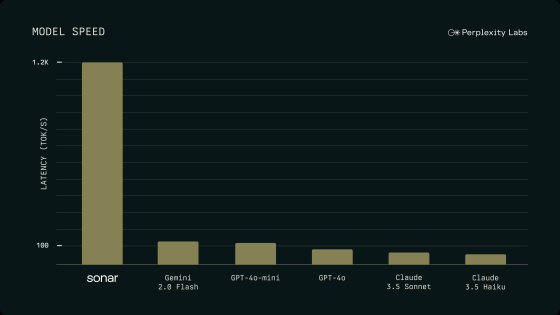

It also boasts fast response times, achieving nearly 10 times faster decode throughput than comparable models like

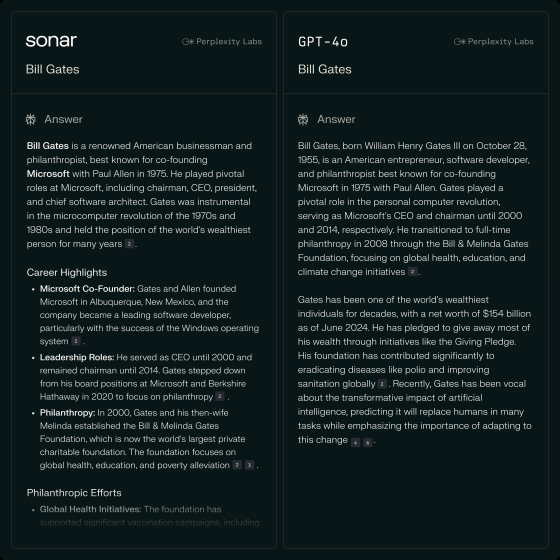

Below is an image comparing the output results of Sonar and GPT-4o. Although what is written looks almost the same, you can see that Sonar has made it easier to read by displaying individual backgrounds in bullet points.

Perplexity AI said, 'Sonar excels at providing fast and accurate answers, making it a great model for everyday use. Perplexity Pro users can make Sonar the default model in settings, or use it via the Sonar API.'

Related Posts:

in Software, Web Service, Posted by log1p_kr