EagleX, an AI model that surpasses Llama-2 in processing English and other languages, is now available as open source

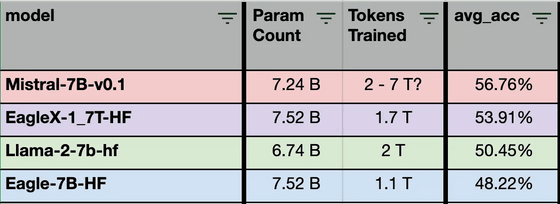

Recursal AI, which develops open source AI models, has released EagleX , a model that shows the best multilingual performance among models with 7 billion (7B) parameters. At the time of writing this article, ' EagleX 1.7T ' trained with 1.7 trillion tokens has been released.

???? EagleX 1.7T : Soaring past LLaMA 7B 2T in both English and Multi-lang evals (RWKV-v5)

EagleX is a model built on the open source architecture ' RWKV -v5'. RWKV has a 10 to 100 times lower inference cost than other transformers (one of the series transformation models) with a large context size, and reduces resource usage during runtime and training.

EagleX 1.7T is a 7.52B (7.52 billion) parameter-count model trained on 1.7 trillion tokens across 100+ languages based on RWKV performance.

As a result of conducting multilingual benchmarks, EagleX 1.7T demonstrated performance that outperformed all models in the same 7B band, and in English, it exceeded LLaMA-2-7B with multiple benchmarks conditionally.

Users who have used EagleX 1.7T say, 'Compared to DeepL and other models, it performed well on translation tasks. Gemini Pro did not perform as well, and only GPT 3.5 and Mistral medium were more accurate. However, I felt that translating between French and a minor European language was an issue.There were many mistakes, but the word order was more natural, and the only weak point was the use of words. has been received.

Comment

by u/Someone13574 from discussion

in LocalLLaMA

The full version of EagleX 2T is scheduled to be released in the second half of March 2024. This EagleX 1.7T was released solely for the purpose of sharing information and research that has reached a major milestone.

EagleX 1.7T is available under the Apache 2.0 license.

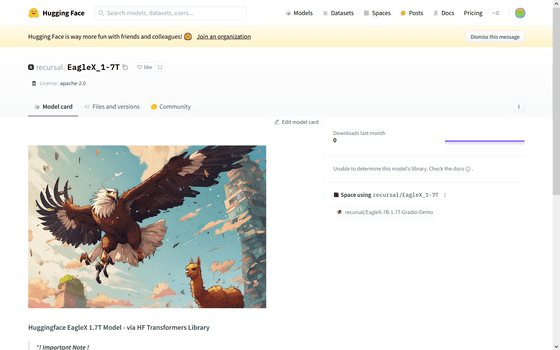

recursal/EagleX_1-7T · Hugging Face

https://huggingface.co/recursal/EagleX_1-7T

Related Posts: