'DoRA' can expect higher performance with less computational cost and time than LoRA by retraining machine learning models

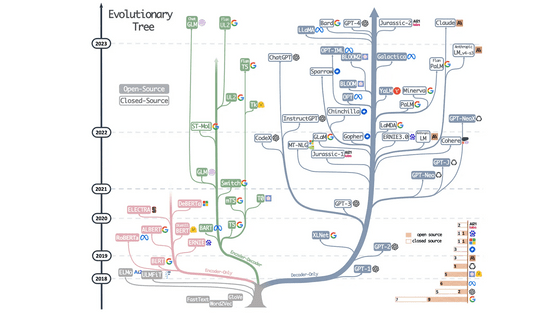

For machine learning models such as large-scale language models and image generation AI, techniques such as fine tuning and LoRA (Low Rank Adaptation) are used to fine-tune the model weights and customize the output to suit specific tasks and purposes. can. A research team at the Hong Kong University of Science and Technology has announced a new method called DoRA (Weight-Decomposed Low-Rank Adaptation) that can reduce computational cost and time compared to LoRA.

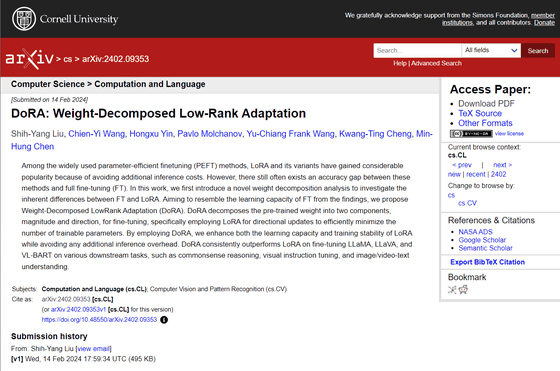

[2402.09353] DoRA: Weight-Decomposed Low-Rank Adaptation

Improving LoRA: Implementing Weight-Decomposed Low-Rank Adaptation (DoRA) from Scratch

https://magazine.sebastianraschka.com/p/lora-and-dora-from-scratch

In order to optimize a machine learning model that has been sufficiently pre-trained, it is necessary to perform ``fine tuning'', which is adjusting the ``weight'' parameters that quantify the importance of input values. However, the problem with normal fine-tuning is that it is necessary to update all the weight parameters inside the model, which takes a very long time. For example, the large language model Llama 2-7B has 7 billion parameters, and it is not practical to retrain all of them.

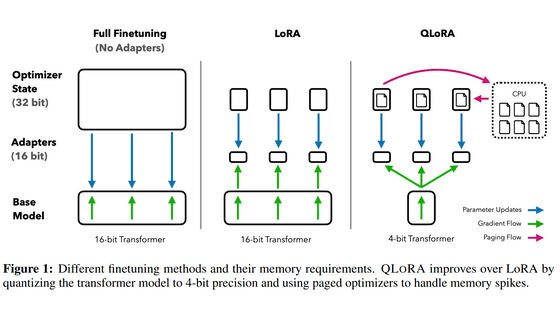

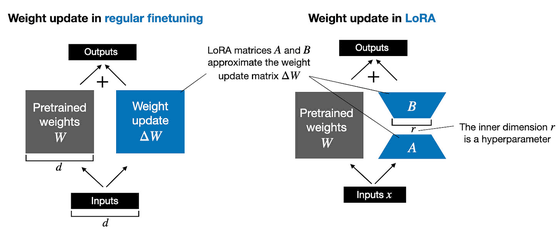

Many large-scale language models and diffusion models such as image generation AI use an architecture called Transformer. Therefore, by adding parameters to each layer of the Transformer, LoRA can recalibrate the machine learning model more quickly. LoRA retunes far fewer parameters than regular fine-tuning, so it uses less VRAM and is less computationally expensive than fine-tuning.

The DoRA method announced this time is a method that decomposes the weight matrix W of a pre-trained model into

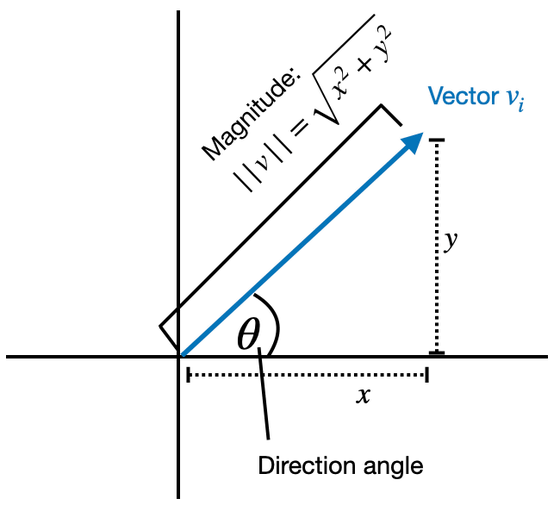

For example, in the case of a real two-dimensional vector [x,y], if the starting point of the vector is placed at the origin of the Cartesian coordinates, the coordinates of the ending point (x,y) become the vector components and can represent the direction and size of the arrow. At the same time, when the length of the arrow is the absolute value √(x 2 +y 2 ) and the angle between the arrow and the positive X axis is θ, the direction can be expressed as [cosθ, sinθ]. If it is a real two-dimensional vector [1, 2], it can be expressed in the form m = √5 (about 2.24), V = [√5/5 (about 0.447), 2√5/5 (about 0.894)].

In this way, DoRA decomposes the weight matrix W into mV and then applies LoRA to this V, that is, adds the difference ΔV. Written mathematically, the weight matrix after adjustment is W'=m(V+ΔV). However, in reality, DoRA not only decomposes the weight matrix and applies LoRA, but also performs

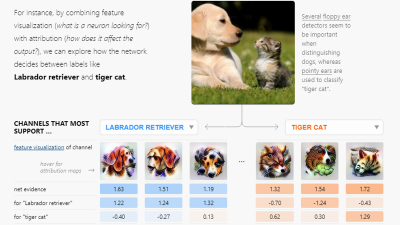

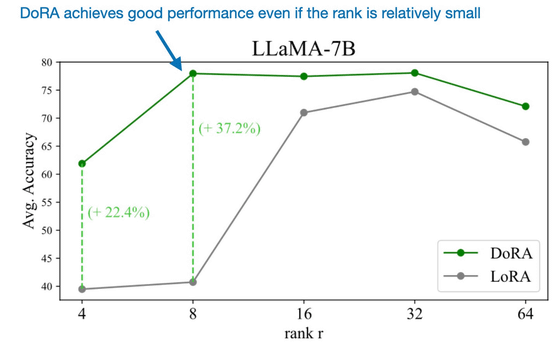

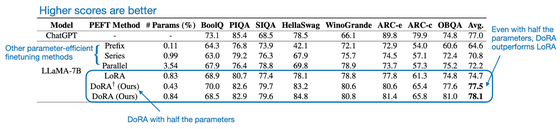

In the case of LoRA, it is necessary to carefully determine the 'number of ranks' to be set to optimize performance, but according to the research team, DoRA is more robust than LoRA, and even with a lower number of ranks it is higher than LoRA. It is said to show performance. Below is a graph showing the average accuracy of the models applying DoRA (green) and LoRA (gray) to LLaMA-7B, respectively, on the vertical axis and the number of ranks on the horizontal axis. It can be seen that the model to which DoRA is applied is superior in accuracy for both rank numbers.

Also, when comparing a model to which LoRA is applied with the same LLaMA-7B (LoRA) and a model to which DoRA is applied to half the number of ranks (DoRA†(Ours)), the average performance score is that of the model to which DoRA is applied. It was said that it was better. In other words, the research team claims that DoRA can achieve higher performance at lower cost than LoRA.

Ahead of AI, an AI-related news site, found that when DoRA was applied to DistilBERT , which is a lightweight version of Google's large-scale language model Bert , prediction accuracy improved by more than 1% compared to a model that applied LoRA. ``DoRA appears to be a promising logical and effective extension of LoRA.''

Related Posts: