How do neural networks understand images?

Although neural networks are demonstrating power in fields such as image recognition, we need to not only identify images but also make it possible for humans to understand what kind of basis the classification was done . An idea for a method to make it easy for human beings to understand image recognition processing of a neural network by posting existing interpretable methods into a rich user interface on blog "Distill" dealing with machine learning is published.

The Building Blocks of Interpretability

https://distill.pub/2018/building-blocks/

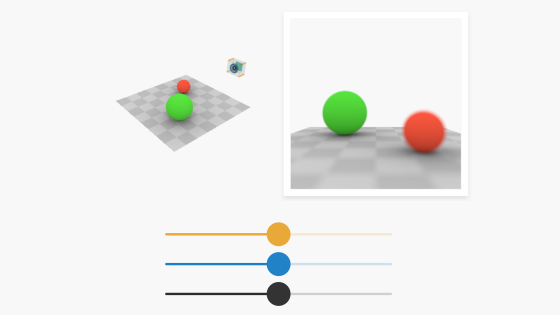

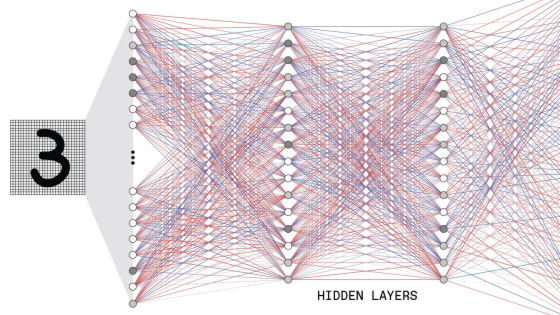

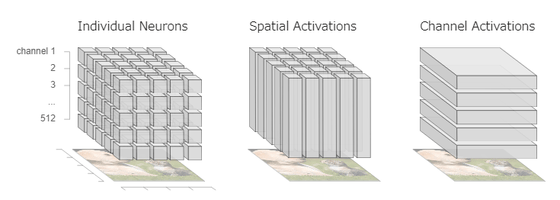

In addition to the input layer that inputs the color channel values of all the pixels of the input image and the output layer that outputs the probability related to the class label, the neural network has several hidden layers. In the hidden layer the computer executes the same feature extractor at every position in the image. Each layer can be regarded as a cube, and each cell making up a cube shows the amount of firing neuron. The x and y axes correspond to the positions in the image, and the z axis to the channel, that is, the type of extractor. By changing how to divide the cube, you can target the activation of the image position and channel in addition to individual neurons.

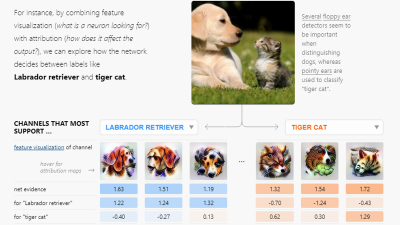

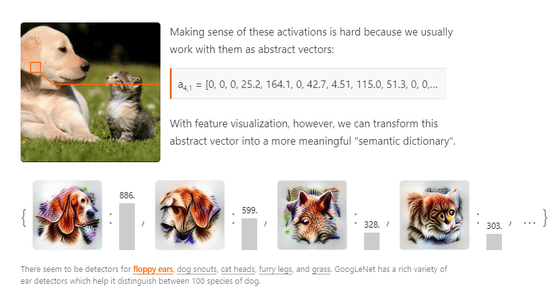

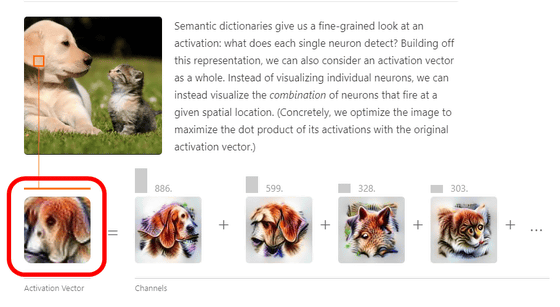

Normally, activation information of the hidden layer is an abstracted vector and it is difficult to understand. According to Distill, however,Visualization of features"We were able to convert this abstract vector to a more meaningful" semantic dictionary ".

This semantic dictionary is created by linking the activation information of all neurons with the visual field of the neuron and classifying it according to the degree of activation. In image recognition, the neural network learns visual abstraction, so the image is the most natural to represent its abstracted vision.

In the semantic dictionary, we can see details of activation of what each neuron is detecting. You can also capture the entire activation vector and visualize a combination of neurons that fires at a portion of the image. Specifically, the image was optimized to maximize the dot product with the original activation vector.

Doing the same for all activation vectors will not only know what the neural network is detecting at each location in the image, but also that the neural network understands what the input image is as a whole You will be able to see how it is.

By looking at each layer, you will be able to understand how the neural network understands the image. In the image below, you can see that the edge on the upper left layer is detected and the shape is gradually refined every time you move to the right layer.

In the image above, I threw away the information of the magnitude of activation, but if you scaled the size of the image by the activation information, it will look like the image below. By doing this you can show how strongly the feature the neural network detected in some parts of the image.

Besides, how each part judges "what is likely to be output" is ... ...

Images showing activation information for each channel, not for each part of the image are posted.

There is also information such as how to decompose the matrix ......

In addition, I mentioned the possibilities of various interfaces using the above information.

Thus, in the former blog, information of high density is summarized about how a person can understand a neural network. It was a glimpse of the cutting edge of the study of connecting people to neural networks.

Related Posts:

in Software, Posted by log1d_ts