What kind of preprocessing is most efficient when using large-scale language models to search all of the data within an organization?

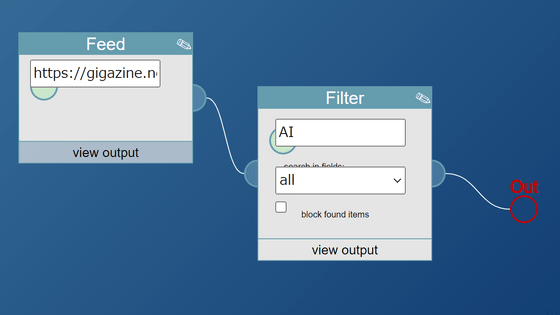

Organizations store large amounts of data in a variety of formats, from structured databases and nicely formatted CSVs to casually written emails and complex technical manuals. Search Augmentation Generation (RAG) is a technology that uses large-scale language models (LLMs) to extract the right information from all the data, but Unstructured, which develops data preprocessing services for RAG, explained how to efficiently ingest and preprocess data when using RAG.

Understanding What Matters for LLM Ingestion and Preprocessing – Unstructured

To get the most out of LLM, it is important to convert unstructured documents into a format that is easy for LLM to read. Unstructured explains the preprocessing steps: 'conversion,' 'cleaning,' 'chunking,' 'summarization,' and 'embedding.'

・Transform

The transformation phase involves first extracting the main content from the source document, then breaking down the extracted text into the smallest possible elements, and finally storing these elements together in a structured format, such as JSON, so that the code can more efficiently manipulate them in further processing. It is important for RAG to transform the data into a common structured format, regardless of the original file format.

·cleaning

Data contains a lot of unnecessary content, such as headers, footers, duplications, irrelevant information, etc. Including unnecessary content in data not only adds processing and storage costs, but also pollutes the context window. The metadata attached to each element of structured data allows for efficient curation of large text corpora .

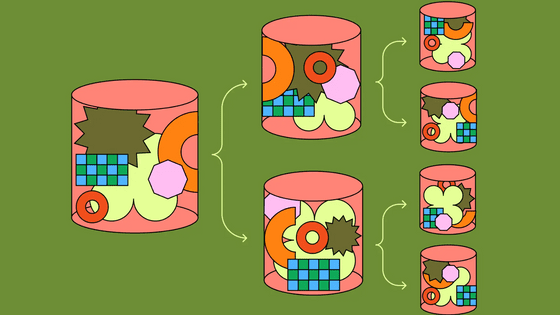

・Chunking

Chunking refers to splitting a document into segments. Although simple splitting is often done by splitting at any number of characters or using natural delimiters such as periods, logical and contextual splitting, called smart chunking, improves the relevance of data in the segments and improves the performance of RAG applications.

·summary

By generating summaries of chunks and distilling the data, the full text in the chunks can be used to generate answers. Summarization also allows us to obtain different representations of the data, enabling efficient matching of raw data with queries. For images and tables, summaries can significantly improve discoverability.

Embedding

Finally, we use a machine learning model to embed the text into vector strings. Embeddings allow us to encode semantic information in the original data, making it possible to search text based on semantic similarity rather than just keyword matches.

This is how a single file is pre-processed to make it compatible with RAG. Unstructured also explains the technique of building a 'pre-processing pipeline' that retrieves all files from multiple data sources and pre-processes them, so if you're interested, check it out.

Related Posts:

in Software, Posted by log1d_ts