Gemini 2.0 Flash is dramatically better in both cost and performance for converting large volumes of PDFs for use with AI

Presentations and handouts are sometimes shared in

Ingesting Millions of PDFs and why Gemini 2.0 Changes Everything - Sergey's Blog

https://www.sergey.fyi/articles/gemini-flash-2

There are open source and proprietary solutions to convert PDFs so they can be used by RAG. However, open source solutions require orchestrating multiple specialized machine learning models for layout detection, table analysis, and markdown conversion. For example, NVIDIA's multimodal data extraction service, nv-ingest , requires the use of Kubernetes with eight services and two GPUs for AI. This is a very tedious task and 'not optimal performance,' Filimonov pointed out.

Proprietary solutions have difficulty achieving complex layouts and consistent accuracy, and are astronomically expensive when dealing with large data sets. For example, RAG analyzes hundreds of millions of PDF pages, which would be prohibitively expensive to run on a proprietary solution.

While AI models seem to be well suited to these tasks, they have yet to prove that they are more cost-effective than alternatives. For example, when using OpenAI's GPT-4o to optically recognize (OCR) PDF content, it sometimes produced false cell artifacts on a table .

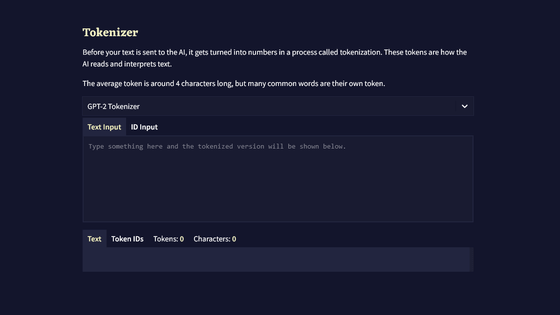

However, Google's 'Gemini 2.0 Flash' is still behind OpenAI in terms of developer experience, but it is very cost-effective. Also, while the previous model, Gemini 1.5 Flash, had problems with OCR accuracy, Gemini 2.0 Flash 'was confirmed to achieve near-perfect OCR accuracy in Matrisk.ai's internal tests,' Filimonov wrote.

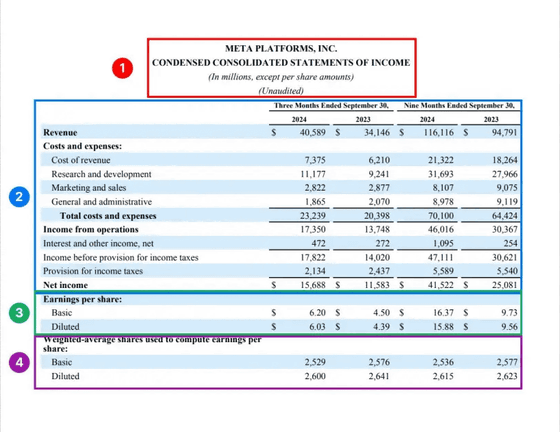

Below is a table summarizing the cost efficiency of converting PDF to Markdown format using each AI model. The cost efficiency shows how many pages of PDF can be converted per dollar (about 150 yen).

| Provider | Model | Cost-effective |

|---|---|---|

| Gemini 2.0 Flash | 6000 | |

| Gemini 2.0 Flash Lite | 12,000 (untested) | |

| Gemini 1.5 Flash | 10,000 | |

| Amazon | Amazon Textract | 1000 |

| Gemini 1.5 Pro | 700 | |

| OpenAI | GPT-4o mini | 450 |

| Llama Index | LlamaParse | 300 |

| OpenAI | GPT-4o | 200 |

| Anthropic | Claude 3.5 Sonnet | 100 |

| Reducer | Reducer | 100 |

| Lumina AI | Chunkr | 100 |

Next, we measured the conversion performance of each AI model. PDFs often have poor scanning quality, span multiple languages, and have complex table structures, but we used Reducto's rd-tablebench , a benchmark to measure how accurately text can be scanned in such 'realistic PDFs.'

| Model | Accuracy | Comments |

|---|---|---|

| Reducer | 0.90 ± 0.10 | |

| Gemini 2.0 Flash | 0.84 ± 0.16 | Near perfect |

| Sonnet | 0.81 ± 0.16 | |

| Amazon Textract | 0.80 ± 0.16 | |

| Gemini 1.5 Pro | 0.77 ± 0.17 | |

| Gemini 1.5 Flash | 0.76 ± 0.18 | |

| GPT-4o | 0.67 ± 0.19 | Causes subtle hallucination |

| GPT-4o-mini | 0.65 ± 0.23 | poor |

| Gcloud | 0.62 ± 0.21 | |

| Chunkr | 0.62 ± 0.21 |

Reducto outperforms Gemini Flash 2.0 in the benchmarks, but in cases where Gemini Flash 2.0 performs poorly, there are only minor structural changes that do not substantially affect the understanding of the table, and there are few cases where specific numbers are misread, says Filimonov.

Gemini Flash 2.0 not only analyzes tables, but also provides consistent performance with almost perfect accuracy in all aspects of converting PDF to markdown format. Therefore, using Gemini Flash 2.0 will create a simple, scalable, and inexpensive indexing pipeline,' says Filimonov.

Converting PDFs to Markdown format is only the first step. To use documents effectively with RAG, they need to be split into smaller chunks. Recent studies have shown that using LLMs for this task outperforms other approaches in terms of search accuracy. The problem with using LLMs in this step is also the cost. Traditional LLMs were costly, but this has changed with the advent of Gemini 2.0 Flash. Gemini 2.0 Flash can analyze a corpus of over 100 million pages for just $5,000.

Markdown conversion and chunking solve many of the problems in document parsing, but it has a significant limitation: it loses bounding box information, which is essential for linking extracted information to its exact location in the source PDF and giving users confidence that the data has not been forged.

For example, the following table has different bounding boxes for '1', '2', '3', and '4', but Gemini cannot accurately maintain these bounding boxes.

But because LLM has a remarkable spatial understanding , it should be able to map text to its exact location in the document, Filimonov writes. Gemini, however, struggles in this regard, producing very inaccurate bounding boxes no matter how you instruct it. This suggests that understanding of document layout is not well represented in the training data, Filimonov points out.

This is a temporary issue, and if Google incorporates more document-specific data during training or fine-tunes the model to focus more on document layout, the gap 'could be closed fairly easily,' Filimonov wrote.

'By integrating these solutions, we can build an indexing pipeline that is elegant and economical even at scale,' Filimonov said. 'We will eventually open source this work, and I expect many other companies will implement similar libraries.'

He added, 'Importantly, by solving these three problems - parsing, chunking, and bounding box detection - document ingestion into LLM is essentially 'solved.' This progress brings us very close to a future where document parsing is not only efficient but virtually effortless for all use cases.'

Related Posts: