Google develops 'InkSight', a model that extracts pen movements from photos of handwritten notes

A Google team has announced a model called 'InkSight' that extracts pen movements from photos of handwritten notes. It is said that it will be possible to digitize handwritten notes while maintaining the handwritten style without the need for special tools.

A return to hand-written notes by learning to read & write

[2402.05804] InkSight: Offline-to-Online Handwriting Conversion by Learning to Read and Write

https://arxiv.org/abs/2402.05804

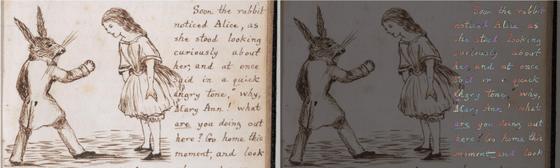

InkSight allows you to extract 'pen movements' from handwritten content. For example, in the image below, if you input the handwritten text on the left into InkSight, the 'strokes' of the pen movements will be extracted as shown on the right. For ease of understanding, in the image below, each recognized word is colored in a rainbow color, and within each stroke, the color changes from dark to light.

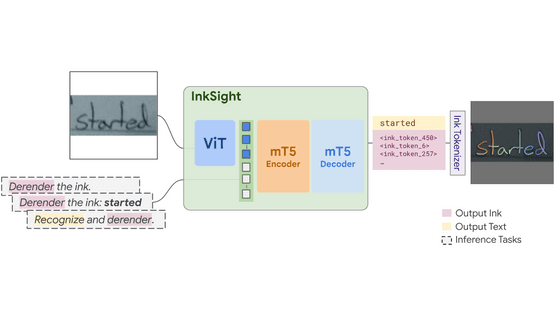

Inside InkSight, we first use an existing OCR model to identify handwritten words, then convert them into strokes using an InkSight model, which uses the widely used

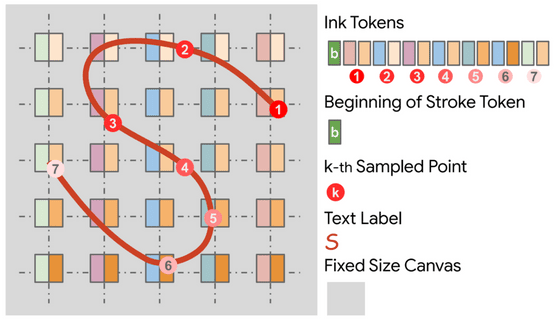

The model was trained using images of text and strokes sampled from real-time writing trajectories. To input and output to the model in the form of tokens, the strokes were tokenized using a dedicated tokenizer. As shown in the figure below, the tokenizer tokenized the handwritten trajectory data by starting with the token 'b' which indicates the start of the token, followed by tokens for the coordinates of each sampling point.

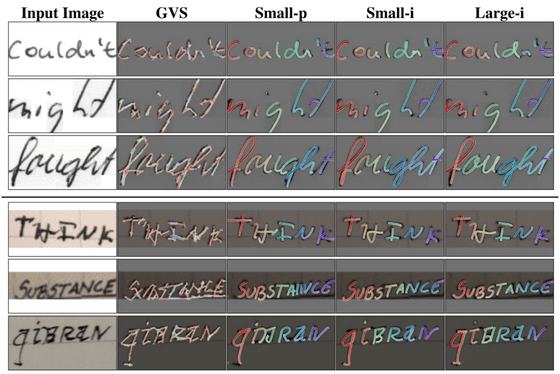

The Google team trained three models: 'Small-p' for external use, and 'Small-i' and 'Large-i' for internal use. The number of parameters is about 340 million for the Small model and about 1 billion for the Large model. The comparison results of each model and the baseline (PDF file)

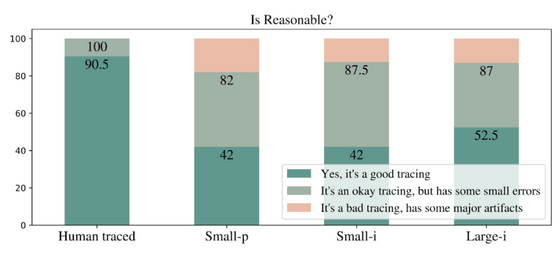

The research team also compared the same text image by tracing it with humans and the three InkSight models, hiding the tracing method and having humans evaluate it. The results are shown in the figure below. 90.5% of the human traces were rated as 'good traces' and there were no 'bad traces.' On the other hand, the InkSight models were rated as 'good traces' 42% to 52.5% of the time, and 12.5% to 18% were rated as 'bad traces.'

The team describes their research as 'the first approach to converting handwritten photos into digital ink.' The model and code used in the research can be downloaded from the GitHub repository , and the demo output can be seen on Hugging Face .

Related Posts:

in Software, Posted by log1d_ts