How can we use a biased coin to more precisely determine 50-50 odds?

When flipping a coin, the probability of getting heads and the probability of getting tails are theoretically equal to 1/2. Here, when assuming a 'biased coin' where the probability of getting heads is not 1/2, data scientist Marton Trenzen uses Python to explain the problem of 'how to manipulate a biased coin to express a fair probability of 1/2.'

Bytepawn - Marton Trencseni – Fair coin from biased coin

https://bytepawn.com/fair-coin-from-biased-coin.html

If the probability of landing on heads (binary 0) when tossing a coin is expressed as p and the probability of landing on tails (binary 1) is expressed as (1- p ), then if the coin is biased, then p ≠ (1- p ).

Trenzeni has devised three solutions to an algorithm that can more fairly output random numbers with a probability of 1 in 2 using p and (1- p ), which are the probabilities of this biased coin.

・Neumann's probabilistic solution

As its name suggests, the von Neumann probabilistic solution is based on the probability theory of John von Neumann, a leading mathematician of the 20th century. It returns '0' if the result of flipping a biased coin twice is heads = [01], and '1' if the result is tails = [10]. If it is [00] or [11], it will try again. The key point of this solution is that both [01] and [10] have the same probability p (1- p ).

The solution is coded in Python below:

[code]def probabilistic(biased_coin):

while True:

s, w = biased_coin(), biased_coin()

if s == 0 and w == 1:

return 1

elif s == 1 and w == 0:

return 0[/code]

von Neumann's probabilistic solution is easy to understand and implement because it only looks at the results of two coin flips. It also requires less memory usage because it only needs to remember the results of the last two flips. It has also been mathematically proven to produce completely fair results in the long run. However, its weakness is that it has a low ratio of output to input because of the high probability of retries. Its efficiency drops significantly especially when the bias is large, so it may not be suitable for some coin biases.

・Statistical solutions

The statistical solution involves tossing a coin N /2 times to measure the bias, and taking the average as the estimate p1 . Then tossing it another N / 2 times, the second estimate obtained is taken as p2 . If p 2 ] p 1 , then it returns '1', and if p 1 ] p 2 , then it returns '0'. In other words, when a coin is tossed N times, if the first half of the coin lands more heads than the second half, then it outputs '1', and if the second half lands more heads than the first half, then it outputs '0'. According to the normal distribution , the probability that ' p 2 ] p 1 ' and the probability that ' p 1 ] p 2 ' are the same.

The code is as follows:

[code]def statistical(biased_coin, N):

while True:

num_flips = int(N/2.0)

s = sum([biased_coin() for _ in range(num_flips)])/num_flips

num_flips = N - num_flips

w = sum([biased_coin() for _ in range(num_flips)])/num_flips

if s < w:

return 1

elif s > w:

return 0[/code]

Statistical solutions are relatively easy to implement but do not guarantee perfect fairness, and increasing N increases accuracy but decreases efficiency.

・Permutation solving method

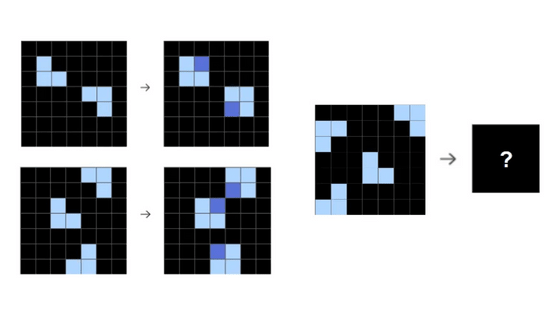

The permutation solution is based on a comment on Hacker News. Toss a coin N times, record [0] if it lands on heads, and [1] if it lands on tails, and input the final N- digit bit string. If the bit string is greater than the reverse bit string in lexicographical order, return '1', otherwise return '0'. However, if the bit string and its reverse order are the same, retry.

For example, when N = 5, 2 5 = 32 possible bit strings can be obtained, such as [10011] and [00101]. If N = 5 and heads appear three times, 10 possible bit strings are possible: [11000], [10100], [10010], [10001], [01100], [01010], [01001], [00110], [00101], and [00011]. If [00011] is input, it is smaller than the reversed [11000], so the output result is '0'. However, [10001] and [01010] are palindrome patterns that are the same even when reversed, so they are excluded and retried.

This permutation solving method is expressed in Python as follows.

[code]def permutations(biased_coin, N):

while True:

inst = ''.join([str(biased_coin()) for _ in range(N)])

revr = inst[::-1]

if inst > revr:

return 1

elif inst < revr:

return 0[/code]

If the number of heads is k , then the probability of getting a particular bit string is p k (1- p ) N - k . The important thing is that all bit strings with the number of heads = k have the same probability of appearing. That is, for all pairs of bit strings with the same k , the original bit string is always equally likely to be greater than or less than the reversed one. This method has the advantage that it does not require checking for coin bias and can theoretically generate completely fair results, but it still may be less efficient because a retry is required if a palindrome bit string appears.

After implementing and comparing three solution methods, von Neumann's probabilistic solution, statistical solution, and permutation solution, Trencini found that all methods were equivalent in accuracy and efficiency. He argues that the three solution methods, which appear to be based on different principles at first glance, are essentially just different views of the same algorithm.

And Trencini explains the concept of 'deterministic random number extraction,' which generalizes the problem of 'using a biased coin to represent a fair probability of 1 in 2.'

Bytepawn - Marton Trencseni – Randomness extractors: making fair coins out of biased coins

https://bytepawn.com/randomness-extractors-making-fair-coins-out-of-biased-coins.html

The main goal of deterministic random number generation is to generate a statistically uniform and unpredictable output sequence of bits from a sequence of biased and/or correlated input bits. The word 'deterministic' means that the process is reproducible, i.e., the same output is always obtained for the same input.

Trenczeni cites 'entropy concentration' as the core of deterministic random number extraction. In information theory, entropy is the degree of uncertainty or unpredictability of information. In a completely random bit string, the entropy of each bit is 1 bit, and it is completely impossible to predict whether the next bit will be 0 or 1, but in a biased bit string, the entropy of each bit is less than 1.

For example, if you toss a coin that has a 75% chance of landing on heads 10 times, each result will tend to land on heads more often, and is not completely random. So, instead of checking each result individually, Trencini's idea is to extract more random information by checking all 10 results together. By combining the results of 10 times into one, the amount of information is reduced, but the randomness is increased.

Trenzeni has devised a method called 'parity extraction' that applies this entropy concentration. This method groups a biased bit string into groups of N bits, and outputs '0' if the number of 1s in the bit string is even, and '1' if the number is odd.

For example, if the input bits are [0101 1110 1010] and processing is performed with N = 4, [0101] has two 1s, so it is '0,' [1110] has three 1s, so it is '1,' and [1010] has two 1s, so it is '0,' and the output is '010.' The larger the value of N , the more random the output will be, but the number of output bits will decrease accordingly.

Trenczeni also introduces 'Blum extraction,' which applies von Neumann's probabilistic algorithm to extract fair random numbers from the bit string generated when the input bits are generated by a ' Markov chain .'

The key to Blum extraction is to remember the last result for each toss of a biased coin. If heads followed by tails, output 1, if tails followed by heads, output 0, if the same result occurs repeatedly, output nothing, and update the result. This method can be used without knowing the bias of the coin, and the number of 0s and 1s output will be fewer than the number of original tosses, but it is fairer.

Trenczeni said that in the completely general case where we know nothing about the structure of the input bit string, it is impossible to generate uniform random numbers. He went on to argue that 'using parity extractors or Blum extractors, for example, it is possible to extract effective random numbers with partial information or assumptions about the input source.'

In order to empirically verify the effectiveness of these methods, it is necessary to statistically test the randomness of the generated output bit strings, so I have published the code for my homemade random number extractor and the results of my testing on GitHub.

playground/Randomness Extractors.ipynb at master · mtrencseni/playground · GitHub

https://github.com/mtrencseni/playground/blob/master/Randomness%20Extractors.ipynb

Related Posts:

in Science, Posted by log1i_yk