How to do 'video scraping' by uploading screen recordings to Google AI Studio and extracting data with Gemini?

AI researcher and data journalist Simon Wilson explains 'video scraping,' which uses Google's multimodal AI,

Video scraping: extracting JSON data from a 35 second screen capture for less than 1/10th of a cent

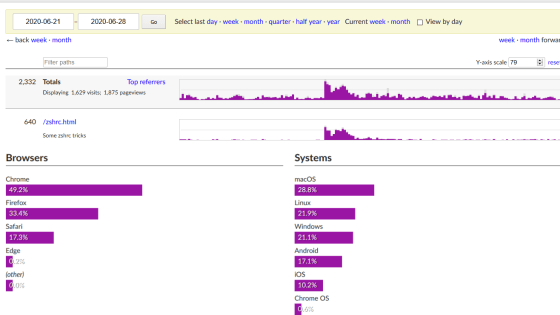

https://simonwillison.net/2024/Oct/17/video-scraping/

Cheap AI “video scraping” can now extract data from any screen recording

https://arstechnica.com/ai/2024/10/cheap-ai-video-scraping-can-now-extract-data-from-any-screen-recording/

AI researcher scrapes usable data from a 35-second screen recording for less than one cent via Google Gemini | Tom's Hardware

https://www.tomshardware.com/tech-industry/artificial-intelligence/ai-researcher-scrapes-usable-data-from-a-35-second-screen-recording-for-less-than-one-cent-via-google-gemini

One day, Wilson came across an opportunity to tally up numbers scattered across 12 emails. However, since copying and pasting all the numbers from the emails into a spreadsheet or similar was a time-consuming process, he decided to try a different approach.

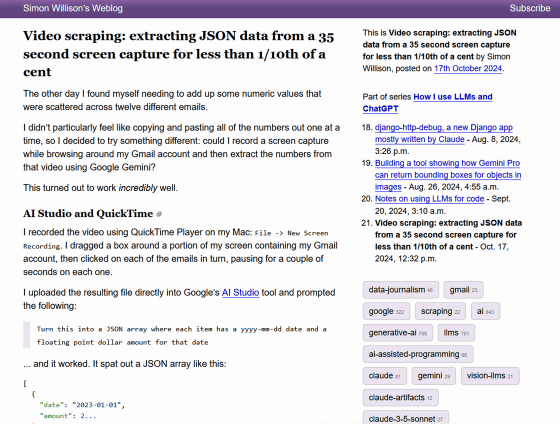

So Wilson came up with a data extraction method that combines Google's multimodal AI 'Gemini' with screen recording. First, he used the screen recording function built into the OS and set it to record only a part of the screen so that no unnecessary personal information would be captured, then opened the emails he received in Gmail one by one. He waited a few seconds so that the data he wanted to extract was captured in the box, then opened the next email, and so on, creating a 35-second video.

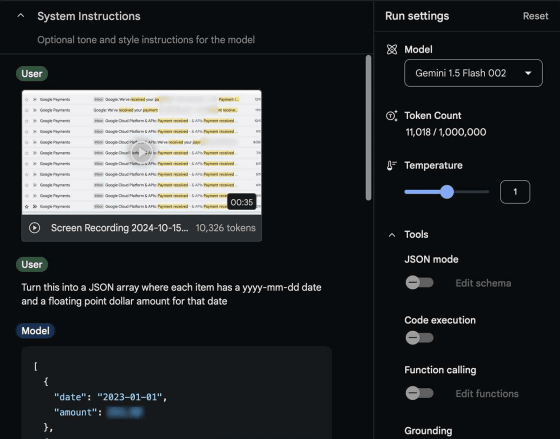

Next, I uploaded the video I created to Google AI Studio , a Google web service that uses Gemini, and instructed it to 'Turn this into a JSON array where each item has a yyyy-mm-dd date and a floating point dollar amount for that date.'

Gemini then correctly output the date and amount as a JSON array. When I asked it to convert this data to CSV format, I got CSV format data that could be easily pasted into a spreadsheet.

Just to be sure, Wilson himself rewatched the video carefully, picked up the numbers and recalculated, and found that all the answers Gemini gave were correct. Wilson also said that he had originally planned to select the latest Gemini 1.5 Pro, but accidentally selected the cheaper Gemini 1.5 Flash 002, but there was no problem with the accuracy of the calculations.

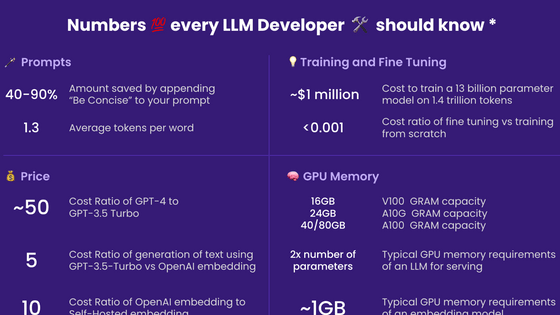

According to Google AI Studio, the series of processes used 11,018 tokens, of which 10,326 were spent on video. Gemini 1.5 Flash costs $0.075 (about 11 yen) per million tokens, so the cost of the series of processes is only about 0.08 cents (about 0.12 yen). At the time of writing, Google AI Studio itself is provided free of charge, so Wilson actually paid zero.

The results of this test demonstrate the performance and scalability of Gemini, a multimodal AI that can process not only text but also audio and video.

Wilson said that video scraping is easier than alternatives such as opening each email, picking up numbers and calculating them, programmatically accessing Gmail data to perform calculations, or using browser automation tools that can click into Gmail accounts to perform calculations. The alternative is to allow existing AI tools to access entire email accounts and do similar things, but this comes with security risks.

'The great thing about this video scraping technique is that it can use anything that's on your screen, and you have complete control over what you ultimately expose to your AI model,' Wilson said. 'The results I get are entirely dependent on how I position the screen capture area and how I click. There's no setup cost to this; I just sign in to the site, hit record, browse a bit, and throw the video into Gemini.'

Technology media Ars Technica points out that data sources that people like data journalists deal with often have different formats, storage locations, display formats, etc., making automatic scraping difficult. This method uses AI image recognition to handle data sources with different display formats together, potentially avoiding traditional barriers to data extraction.

'I'm sure I'll be using this technique more often in the future,' Wilson said. 'It also has applications in the world of data journalism, where there's a lot of need to scrape data from sources that don't want to be scraped.'

Related Posts:

in AI, Software, Web Service, Posted by log1h_ik