Google updates Gemini 1.5 Pro, expanding context window from 1 million to 2 million tokens

At the developer event 'Google I/O' held on May 14, 2024 local time, Google announced an update to the high-performance AI model '

Gemini 1.5 Pro updates, 1.5 Flash debuts and 2 new Gemma models

https://blog.google/technology/developers/gemini-gemma-developer-updates-may-2024/

Google Gemini update: Access to 1.5 Pro and new features

Gemini 1.5 Pro, announced on February 15, 2024, is said to be able to handle up to one hour of movies and 700,000 words of text. When Gemini 1.5 Pro was first announced, it was only available to some users as a 'limited test,' but from April 9, 2024, a public preview was launched and anyone can use it via the Gemini API.

Google launches public preview of Gemini 1.5 Pro, with new features such as voice understanding, system commands, and JSON mode - GIGAZINE

In addition, at Google I/O held in May 2024, it was reported that Gemini 1.5 Pro had made a series of quality improvements to major use cases such as translation, coding, and inference. In addition, the initial context window for Gemini 1.5 Pro was 1 million tokens, but this announcement revealed that it had been doubled to 2 million tokens in the private preview.

In order to take advantage of the 2 million token context window, you will need to join the waiting list for

In addition, Google announced that it will introduce Gemini 1.5 Pro to its AI chat ' Gemini Advanced '. According to Google, the introduction of Gemini 1.5 Pro will enable it to understand a total of 1,500 pages of documents and summarize about 100 emails. Google also said, 'In the near future, it will be able to handle up to an hour of video content and more than 30,000 lines of code.'

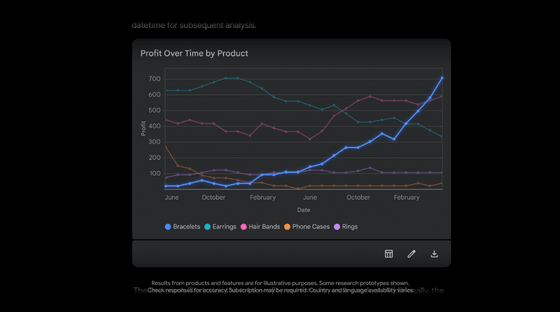

Google has also added the ability to upload files directly to Gemini Advanced via Google Drive to take advantage of the context window. This allows you to quickly generate answers for complex documents, as well as requests to analyze data on uploaded spreadsheets and build graphs. Google states that 'uploaded data is not used to train AI models.'

In addition, it has been announced that the voice conversation feature 'Gemini Live' for Gemini Advanced subscribers will be released within a few months. Regarding Gemini Live, Google reports that 'With Gemini Live, you can choose from multiple natural voices. You can also speak at your own pace and pause in the middle of a response to clarify your question, just like in any other conversation.' Google also revealed that it plans to make the camera available when using Gemini Live in the second half of 2024, which will enable conversations about what is captured by the camera.

Related Posts:

in Software, Posted by log1r_ut