``LLM Visualization'' is a site that visualizes the structure of large-scale language models in 3D and displays what calculations are being performed in an easy-to-see manner.

Chat AI such as ChatGPT performs numerous calculations internally to generate sentences. ' LLM Visualization ' is a site that allows you to easily visualize in 3D format what parameters are stored internally and what calculations are being performed.

LLM Visualization

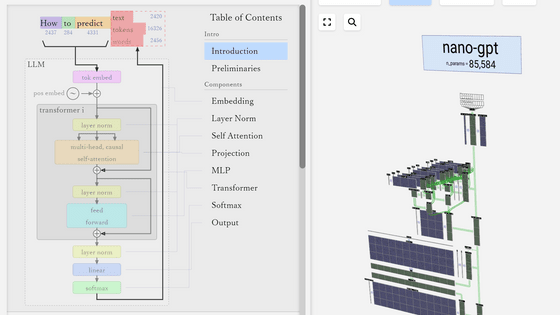

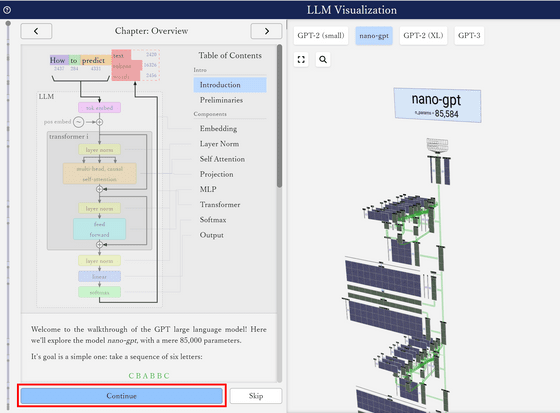

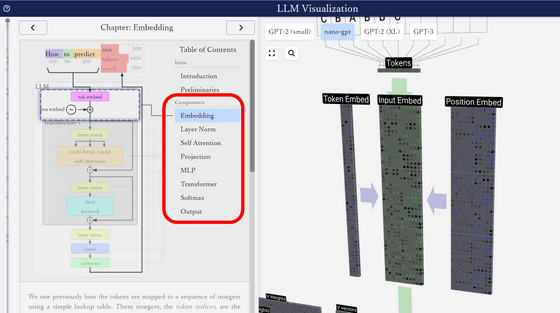

This is what it looks like when you access the site. There is an explanation on the left side of the screen and a 3D model on the right side. Click 'Continue' in the explanation.

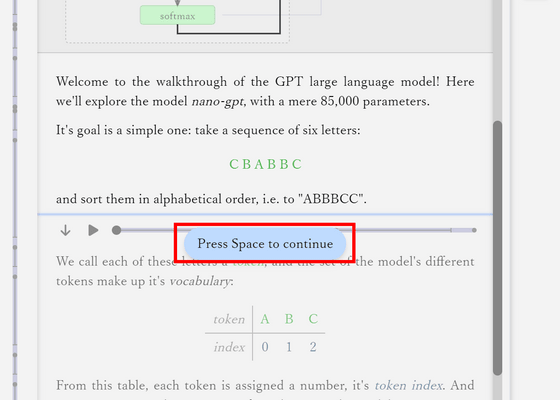

In the explanation, we will perform a task to rearrange three types of characters using the model ``nano-gpt'', which has approximately 85,000 parameters, and confirm the calculations inside the large-scale language model. Press the spacebar to advance to the next section.

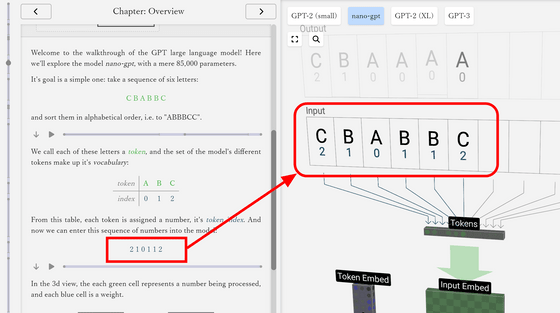

The places of interest in the 3D model are highlighted according to the stage of the explanation, making it easier to understand which place is being talked about.

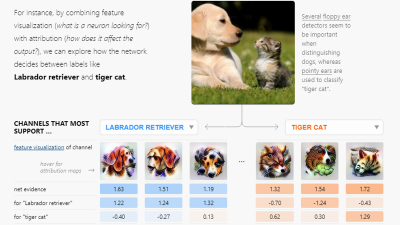

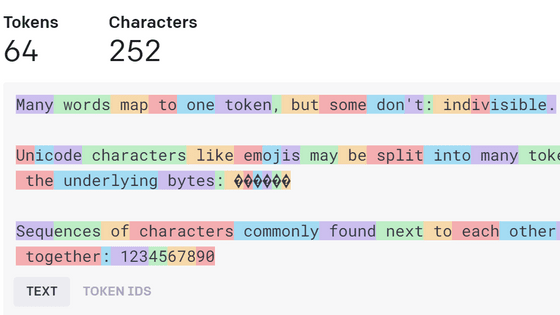

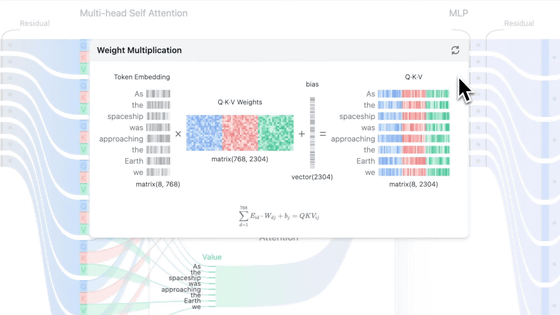

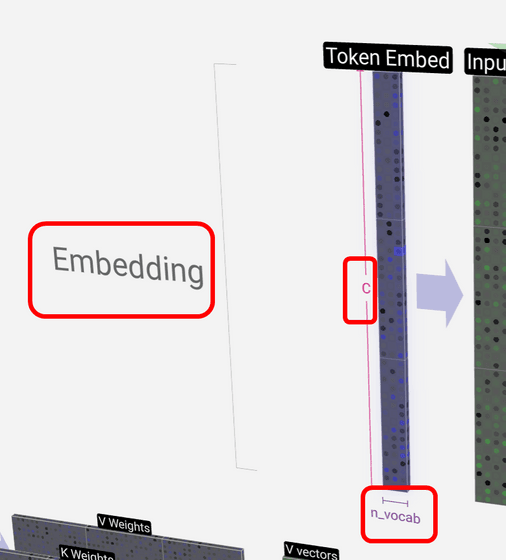

When you place the cursor on a part of the 3D model, you can check the parameters such as 'which structure the part belongs to', 'number of rows', and 'number of columns'. In the case of 'Token Embed' in the figure below, it constitutes 'Embedding', the number of rows is 'C' which indicates the number of channels whose size is the embedding, and the number of columns is 'n_vocab' which indicates the number of vocabulary (large). is displayed. nano-gpt is an extremely small model and can only handle three characters, 'A', 'B', and 'C', so the number of Token Embed columns only needs to be three.

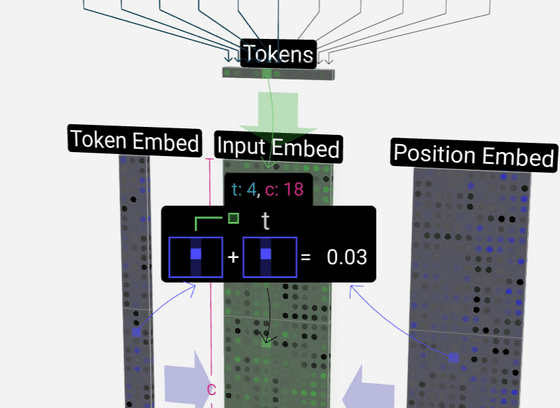

You can also see the calculation formula by placing the cursor on the part where the result is calculated. 'Input Embed' is calculated by adding 'Token Embed' and 'Position Embed'. The green block is the part that calculates the value based on the input, and the blue block is the part that becomes the parameter whose value is adjusted during training.

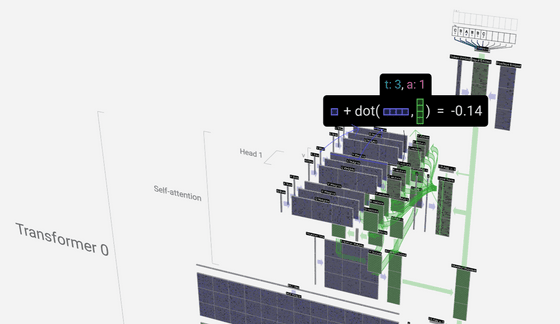

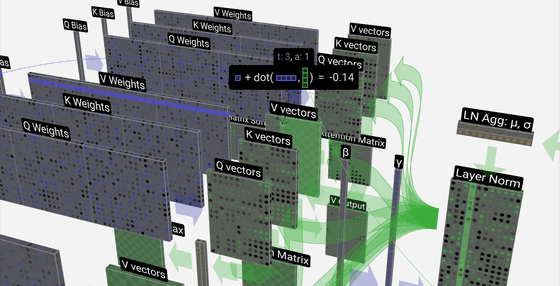

In places where the structure is complicated, 'which structure it belongs to' is displayed hierarchically.

If you want to check the internal structure in more detail, you can just zoom in.

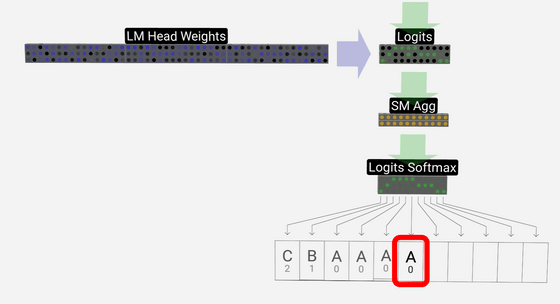

Based on various calculations, the next character prediction was output as 'A'. By feeding back this prediction to the input, it is possible to make predictions one after another.

You can also read detailed explanations of each internal structure on the site, so if you are interested, please check it out.

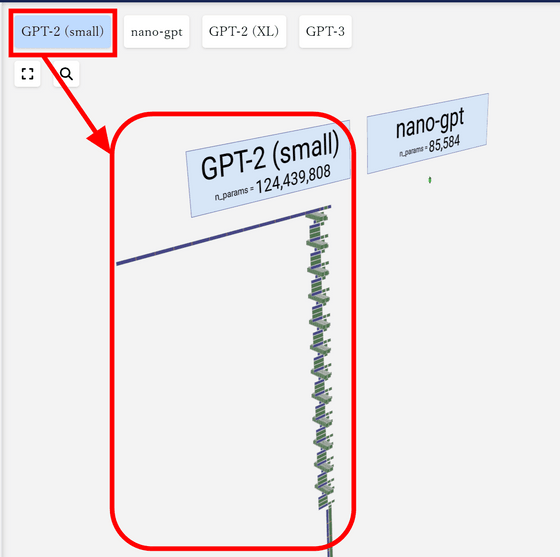

In addition, in the explanation, we used 'nano-gpt' which has approximately 85,000 parameters, but if you compare nano-gpt with GPT-2 (small) which has 124 million parameters, it will be as shown in the figure below.

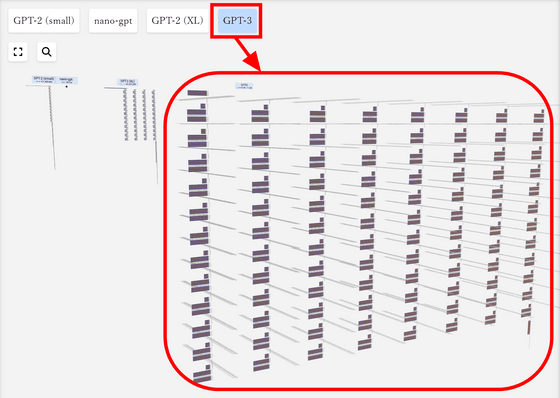

The size of GPT-3, which has approximately 175 billion parameters, is shown in the figure below. Thanks to the 3D format, you can see at a glance how much the models differ in size.

Related Posts:

in Software, Review, Web Application, Posted by log1d_ts