Google's chatbot AI 'Bard' finally surpasses GPT-4 in benchmark score and rises to second place

Large Model Systems Org (LMSYS Org), an open research organization established in collaboration with the University of California, Berkeley, the University of California, San Diego, and Carnegie Mellon University, provides large-scale machine learning model datasets, open models, and evaluation tools. We are developing it jointly. LMSYS Org has reported that the benchmark score of Google's chatbot AI ' Bard with Gemini Pro ' has ranked 2nd in the large-scale language model benchmark platform developed by LMSYS, surpassing some models of OpenAI's GPT-4. Did.

LMSys Chatbot Arena Leaderboard - a Hugging Face Space by lmsys

https://huggingface.co/spaces/lmsys/chatbot-arena-leaderboard

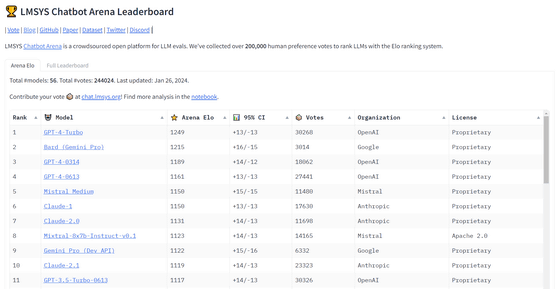

LMSYS Org is developing a large-scale language model benchmarking platform 'Chatbot Arena'. This benchmark invites human users to an open chat, has them converse with two anonymous AI models, votes on them, and ranks them using the Elo rating used in chess.

In the interactive chat AI benchmark ranking, GPT-4-based ChatGPT is ranked first, Claude-v1 is second, and Google's PaLM 2 is also ranked in the top 10 - GIGAZINE

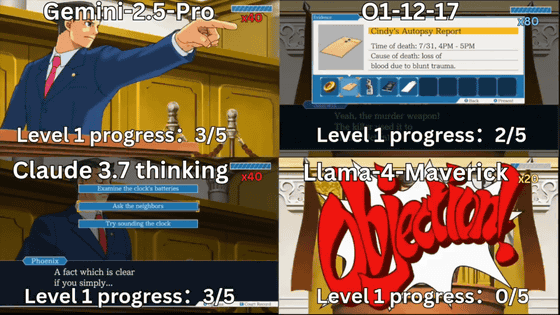

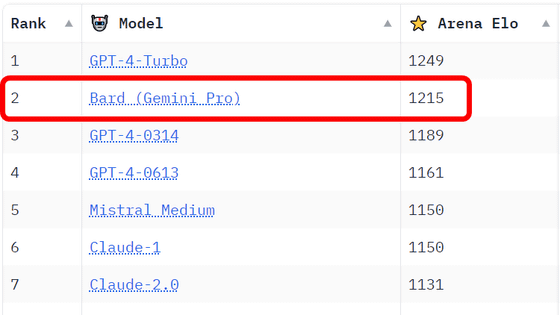

According to LMSYS Org, as of January 26, 2024, Chatbot Arena's Elo rating ranking is 1st place is OpenAI's GPT-4 Turbo, 2nd place is Google's Bard (Gemini Pro), and 3rd place is OpenAI's GPT-4 version 0314, and 4th place is OpenAI's GPT-4 version 0613.

????Breaking News from Arena

— lmsys.org (@lmsysorg) January 26, 2024

Google's Bard has just made a stunning leap, surpassing GPT-4 to the SECOND SPOT on the leaderboard! Big congrats to @Google for the remarkable achievement!

The race is heating up like never before! Super excited to see what's next for Bard + Gemini… pic.twitter.com/QPtsqZdJhC

GPT-4 version 0314 is the first released version of the GPT-4 model ' Azure OpenAI Service ' running on Microsoft Azure, and GPT-4 version 0613 is the second released version. And this time, the Bard that surpassed these GPT-4 models in Elo rating is 'Bard with Gemini Pro', which is based on the multimodal AI Gemini Pro developed by Google.

Below is a table summarizing the rating sampling table and match win rate for each model over 1000 games.

Sorry the above screenshots are not up-to-date. Here are the correct plots for model ratings and win-rate.

— lmsys.org (@lmsysorg) January 26, 2024

Check out leaderboard link for full details: https://t.co/MsbfthaZlk

Also, we welcome the community to compare Bard and other models at https://t.co/4LVJjx4pZi ! pic.twitter.com/G8Z99iqpvO

LMSYS Org commented, ``Google's Bard has made a spectacular leap forward, surpassing GPT-4 and taking second place in the ranking. Big congratulations to Google for their wonderful achievement!'' We are also looking forward to the results of the Gemini Ultra-based Bard, which is scheduled for release in the future.

Related Posts:

in Software, Posted by log1i_yk