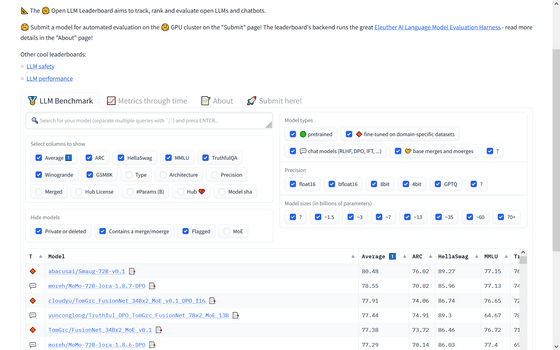

The open source LLM 'Smaug-72B' released by Abacus AI has topped Hugging Face's Open LLM Leaderboard and has been revealed to outperform GPT-3.5 in several benchmarks.

' Smaug-72B ', which was created by fine-tuning Alibaba's open source language model '

abacusai/Smaug-72B-v0.1 · Hugging Face

https://huggingface.co/abacusai/Smaug-72B-v0.1

Smaug-72B - The Best Open Source Model In The World - Top of Hugging LLM LeaderBoard!!

— Bindu Reddy (@bindureddy) February 6, 2024

Smaug72B from Abacus AI is available now on Hugging Face, is on top of the LLM leaderboard, and is the first model with an average score of 80!!

In other words, it is the world's best… pic.twitter.com/CGHawmLhqI

Meet 'Smaug-72B': The new king of open-source AI | VentureBeat

https://venturebeat.com/ai/meet-smaug-72b-the-new-king-of-open-source-ai/

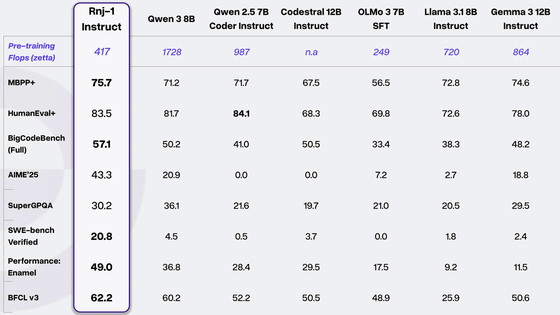

Developed by Abacus AI, an AI company based in San Francisco, California, Smaug-72B has achieved high scores in prominent benchmarks such as ARC, HellaSwag, MMLU, TruthfulQA, Winogrande, and GSM8K, ranking among all open source language models. achieved the highest average score.

The page that summarizes the benchmark scores of open source large-scale language models can be accessed from the following.

Open LLM Leaderboard - a Hugging Face Space by HuggingFaceH4

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard

Smaug-72B has outperformed the large-scale language models 'GPT-3.5' and 'Mistral Medium' in several benchmarks, and in particular, it has an average score of nearly 7 points compared to the derived model 'Qwen-72B'. is attached.

Although Smaug-72B still falls short of the average score of 90-100, which indicates human-level performance, it is the first open-source model to score over 80, and technology media VentureBeat notes that it is a 'long-secret secret'. 'This suggests that open source language models may soon catch up to Big Tech's hidden capabilities.'

Bindu Reddy, CEO of Abacus AI said, 'The Smaug-72B is now available at Hugging Face. This model is at the top of the LLM leaderboard and is the first model to reach an average score of 80. In other words, the world's best open It's a source basic model.'' He added: 'Our next goal is to publish these techniques in a research paper and apply them to the best existing Mistral models.'

Further details about Smaug-72B will be provided in a future paper.

Related Posts:

in Software, Posted by log1p_kr