1008 child pornography images were found to be deleted in the over 5 billion image set ``LAION-5B'' used for image generation AI ``Stable Diffusion'' etc.

According to an investigation by

Investigation Finds AI Image Generation Models Trained on Child Abuse | FSI

https://cyber.fsi.stanford.edu/io/news/investigation-finds-ai-image-generation-models-trained-child-abuse

Largest Dataset Powering AI Images Removed After Discovery of Child Sexual Abuse Material

https://www.404media.co/laion-datasets-removed-stanford-csam-child-abuse/

Large AI Dataset Has Over 1,000 Child Abuse Images, Researchers Find - Bloomberg

https://www.bloomberg.com/news/articles/2023-12-20/large-ai-dataset-has-over-1-000-child-abuse-images-researchers-find

Child abuse images found in AI training data, Stanford study reports

https://www.axios.com/2023/12/20/ai-training-data-child-abuse-images-stanford

With the rapid development of generative models using machine learning, online child safety organizations Thorn and SIO have discovered that CSAM can be generated using open source generative AI. Following this, new research reveals that LAION-5B , an open source image dataset of more than 5 billion images used to train generative AI, contains at least 1008 CSAM images. It has become clear. According to the investigation, LAION-5B had collected known CSAM from social media, websites, popular adult video sites, etc.

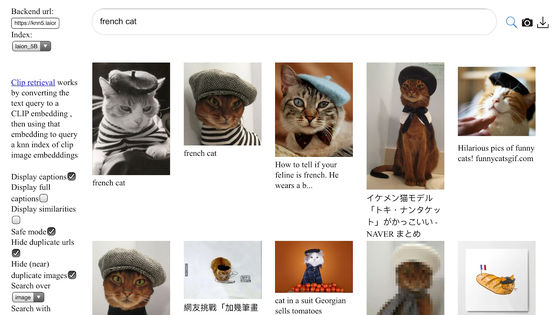

SIO's research team has already reported the URL of the image to the National Center for Missing and Exploited Children (NCMEC) in the United States and the Canadian Center for Child Protection (C3P), and work to delete the image is underway at the time of writing. The investigation mainly used PhotoDNA , a hash tool used for CSAM matching, and the researchers who conducted the investigation did not directly view CSAM, but reported images with matching hashes to NCMEC. In addition, if confirmation is required, C3P has done this.

Minimizing CSAM in datasets for training AI models includes building open datasets, where there is no central authority hosting the data, and where the data is cleaned and distributed. The disadvantage is that it becomes very difficult to perform a stop.

The research team added, ``Images collected in future datasets will be matched to known CSAM lists by using detection tools like PhotoDNA and by partnering with child safety organizations like NCMEC and C3P.'' It should be verified.'

In response, a spokesperson for LAION, the German non-profit organization that developed LAION-5B, explained that they have

Christoph Schumann, founder of LAION, told Bloomberg that he was not aware that the data set included child nudity. If this becomes apparent, we will immediately remove the link.

Stable Diffusion 2.0, the latest version of Stable Diffusion, is trained on data filtered from LAION-5B for 'unsafe images,' making it difficult for users to generate sexually explicit images. It has become. However, Stable Diffusion 1.5 makes it relatively easy to generate sexually explicit content, and content created using it is widespread on the Internet.

When Bloomberg asked Stability AI, the developer of Stable Diffusion, about this, a company spokesperson said, ``We are committed to preventing misuse of AI, and Stability AI, the developer of Stable Diffusion, is committed to preventing misuse, and Stable Diffusion may not be used for illegal activities, including creating or editing CSAM.'' 'It is prohibited,' he commented. Furthermore, Stability AI explains that ``Stable Diffusion 1.5 was not developed by Stability AI, but was released by Runway, an AI startup that collaborated in creating the original version of Stable Diffusion.'' On the other hand, Runway seems to have commented on Stable Diffusion 1.5, saying that it was 'released jointly with Stability AI.'

A spokesperson for Stability AI said, ``We have implemented filters to block unsafe prompts and unsafe output when users interact with models on our platform. We have also invested in content labeling capabilities to help identify images generated on our platform, making it harder for bad actors to exploit our AI.'

Related Posts:

in Software, Posted by logu_ii