The dataset 'LAION-5B' used for image generation AI Stable Diffusion contains photos of children without their consent, making it possible to identify their identities

The

Brazil: Children's Personal Photos Misused to Power AI Tools | Human Rights Watch

https://www.hrw.org/news/2024/06/10/brazil-childrens-personal-photos-misused-power-ai-tools

AI trained on photos from kids' entire childhood without their consent | Ars Technica

https://arstechnica.com/tech-policy/2024/06/ai-trained-on-photos-from-kids-entire-childhood-without-their-consent/

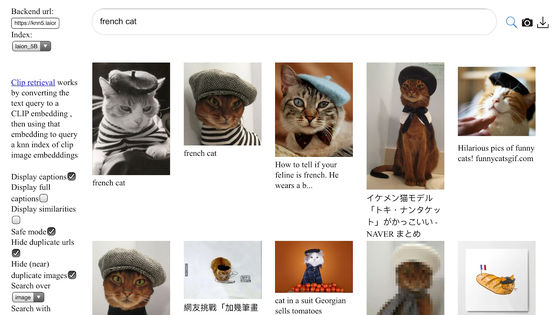

LAION-5B is an open dataset released in March 2022 by the German non-profit organization Large-scale Artificial Intelligence Open Network (LAION) , and consists of 5.85 billion image and text combinations filtered by the image classification model CLIP. These images and text are collected by analyzing common crawl files that provide data on the Internet and extracting highly similar image and text pairs, so even the creators of the dataset cannot accurately understand the contents.

In the past, it was discovered that the LAION-5B dataset contained over 1,000 child pornography images, which were subsequently deleted. Investigations revealed that LAION-5B contained known child sexual abuse images (CSAM) from social media, websites, popular adult video sites, and other sources.

It was discovered that the 5 billion+ image set 'LAION-5B' used in the image generation AI 'Stable Diffusion' contained 1,008 child pornography images and will be deleted - GIGAZINE

In a new study, HRW researcher He Jun Han and his colleagues found 170 photos of children taken in at least 10 Brazilian states in the LAION-5B dataset. Some of the children's names were included in the accompanying text, the URLs of the images were saved, or the locations where they were taken were known, so in many cases it was possible to identify the children.

The photos found in the investigation spanned the entire childhood span, from a newborn baby being held by a gloved doctor, to a toddler blowing out candles on a birthday cake, to a child dancing in his underwear at home, to a student giving a presentation at school, to a teenager taking pictures at a high school festival. Many of the photos were intended for only a few people to see, uploaded to personal or parenting blogs, or were stills cut from low-view YouTube videos.

LAION acknowledged that the photos of children found by HRW were included in the dataset and promised to remove them. In response to an inquiry from technology media Ars Technica, LAION spokesperson Nate Tyler said, 'This is a very concerning issue, and as a non-profit volunteer organization, we will do our best to help.' However, HRW only examined less than 0.0001% of the LAION-5B dataset this time, and the photos found this time are thought to be just the tip of the iceberg.

The photos of children included in the LAION-5B dataset will be used to output image generation AI. LAION claims that AI models trained on the dataset cannot reproduce the original data as is, but since there have been a series of incidents around the world where image generation AI has been used to generate nude images of women, it is possible that photos of children are contributing to the output of child pornography.

The problem of male students creating 'deep nudes' of girls using generative AI apps is becoming more serious - GIGAZINE

At least 85 girls in Brazil have been victims of 'deep fakes' using image-generating AI. HRW points out, 'Fabricated media has existed for a long time, but creating them required time, resources and expertise, and most were not real. Today's AI tools can output lifelike images in seconds, and are often free and easy to use, so there is a risk that non-consensual deep fakes will spread and be recirculated online for a lifetime, causing lasting harm.'

'Children should not live in fear that their photos may be stolen or weaponized. Governments should urgently introduce policies to protect children's data from misuse by AI,' Han said, stressing the importance of efforts to prevent the harm of generative AI to children.

Continued:

Child sexual abuse content was found in the data set 'LAION-5B' used for Stable Diffusion, and the developer released 'Re-LAION-5B' with the links removed - GIGAZINE

Related Posts:

in AI, Software, Web Service, Posted by log1h_ik