A data library that summarizes more than 10,000 types of large-scale language models (LLM) and visualizes the number of downloads and similarities in an easy-to-understand manner will be released

From the second half of 2022, countless large-scale language models (LLM) and AI services such as ``ChatGPT'' and ``Bard'' have appeared, and users around the world have begun to actively use generative AI. Many of these large-scale language models have been deposited in

[2307.09793] On the Origin of LLMs: An Evolutionary Tree and Graph for 15,821 Large Language Models

https://doi.org/10.48550/arXiv.2307.09793

Constellation

https://constellation.sites.stanford.edu/

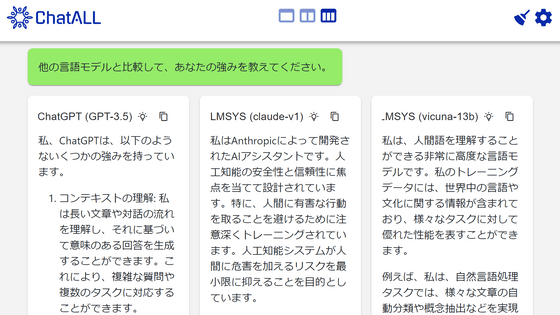

Access the 'Constellation' above and click 'Access Constellation'.

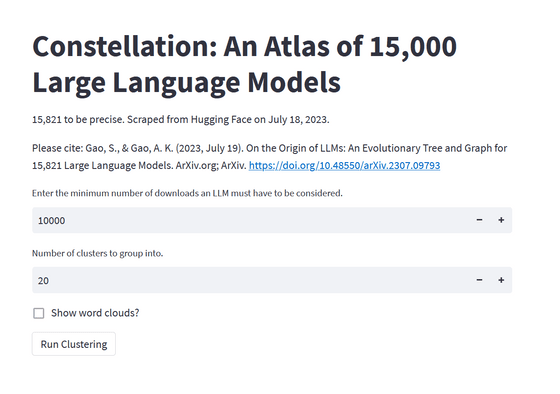

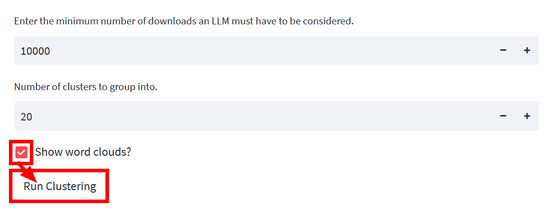

Next, specify the LLM you want to display. The number above is the minimum number of downloads. Change it when you want to display only those downloaded from Hugging Face exceeding the specified number. This allows you to narrow down to just popular LLMs. The number below is called the number of clusters, which simply specifies how many groups the LLM is divided into. LLMs are grouped by likeness.

This time, check the checkbox to display the word cloud and click 'Run Clustering'. After a while, some graphs will be displayed.

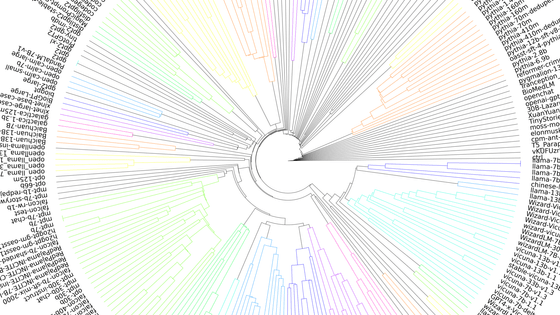

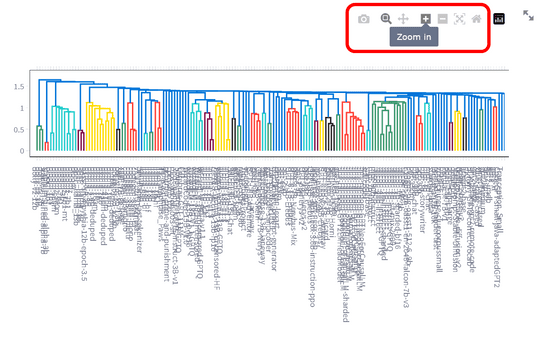

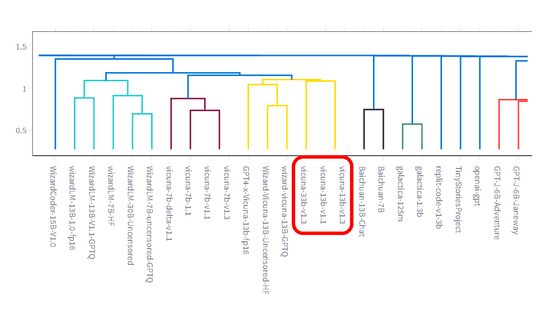

The first thing displayed is a tree diagram that organizes all LLMs filtered by this specification. It is very difficult to see, but you can enlarge it and display it by making full use of the zoom.

You can check what the LLM `` Vicuna-13B '', which has an accuracy comparable to ChatGPT and Google's Bard, was derived from, and what the similar language model is.

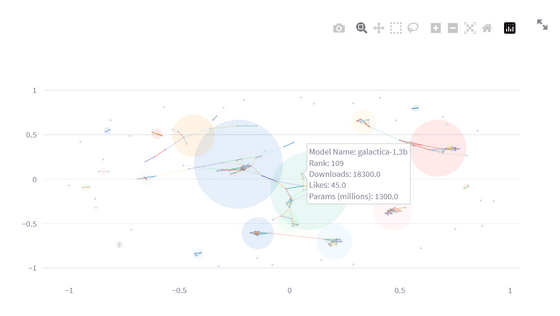

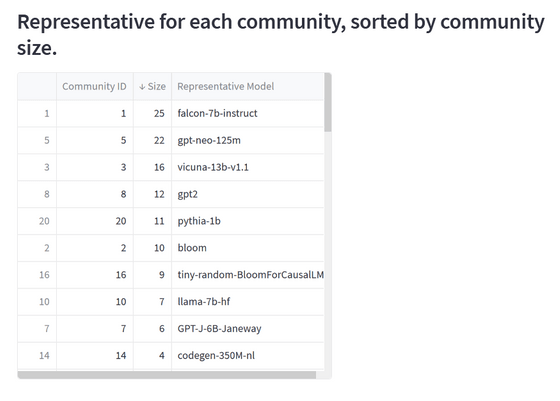

This is a graph when each LLM is divided into several communities using

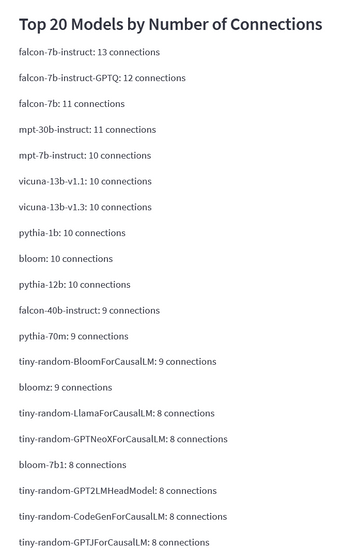

What you see next is a compiled list of the top 20 LLMs with the most ties to each LLM. Open source and commercially available '

Then you'll see a list of LLMs sorted by community size. The largest is

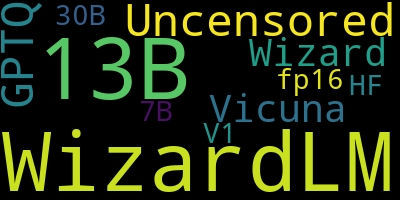

For each cluster, a word cloud is also displayed that shows which model group stands out.

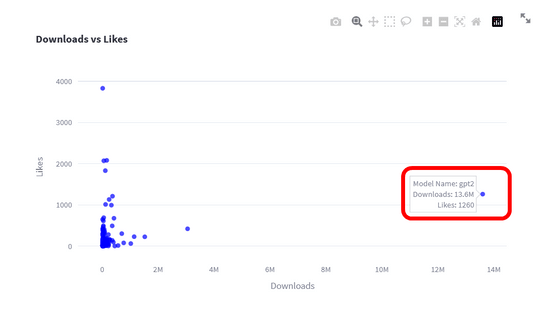

The last thing you see is a graph showing the number of downloads versus the number of likes. It was OpenAI's open source LLM '

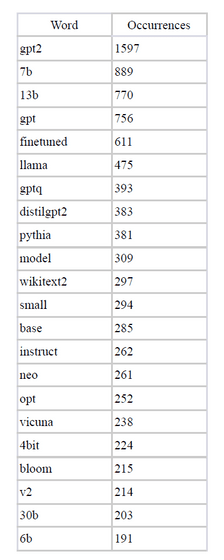

LLMs out there are often given similar names such as 'GPT' or 'model'. The researchers who created the data library this time list in detail what words appear in the name of LLM and how often they appear in

Related Posts:

in Software, Web Service, Review, Posted by log1p_kr