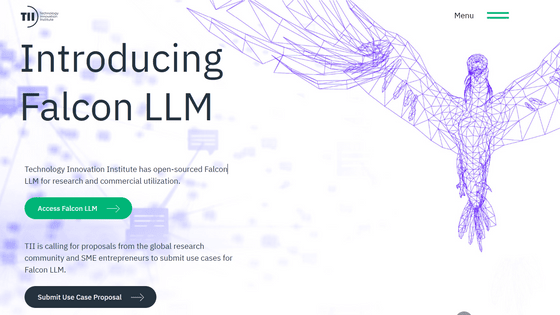

A large-scale language model 'Falcon' that is open source and commercially available has appeared, and it has the highest performance among open source models

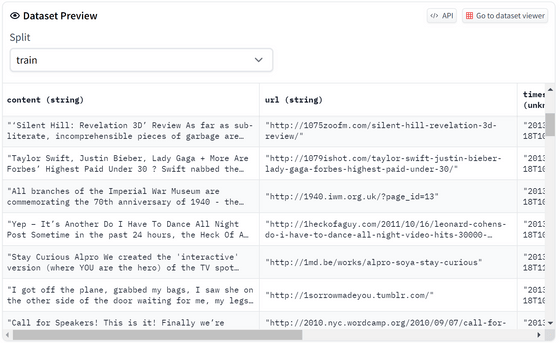

A research institute 'Technology Innovation Institute' based in Abu Dhabi, the capital of the United Arab Emirates, has released an open source large-scale language model ' Falcon ' and released the model on the machine learning related data sharing site 'Hugging Face'. bottom.

Falcon LLM - Home

The Falcon has landed in the Hugging Face ecosystem

https://huggingface.co/blog/falcon

tiiuae/falcon-40b Hugging Face

https://huggingface.co/tiiuae/falcon-40b

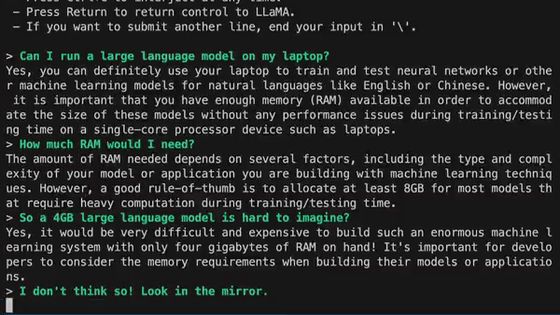

Two Falcon models have been released: the ' Falcon-40B ' model with 40 billion parameters and the ' Falcon-7B ' model with 7 billion parameters. Although the 40B model with more parameters has higher performance, it requires 90GB of GPU memory to operate, making it difficult for general users to afford. On the other hand, the 7B model will work if the GPU memory is 15GB.

As a point of caution, it is stated that the 40B and 7B models released this time have completed pre-learning and need to be fine-tuned before being used as a product. ' Falcon-40B-Instruct ' and ' Falcon-7B ' are experimentally fine-tuned using chat-format data for people who want to actually test the performance, but fine-tuning is difficult. -Instruct was also provided.

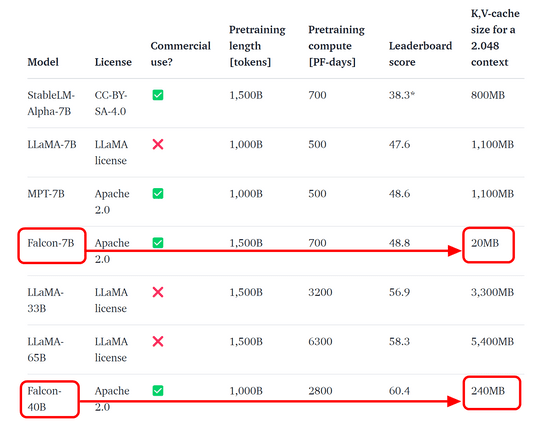

Hugging Face has a ranking called ' Open LLM Leaderboard ' where open source large-scale language models compete for scores, and you can see at a glance which model is superior, but this time The Falcon 40B model that appeared jumped to the top, overtaking the llama model. The 7B model also has the best performance among similar models.

The reason why Falcon's quality is high is in the data used for training. Based on

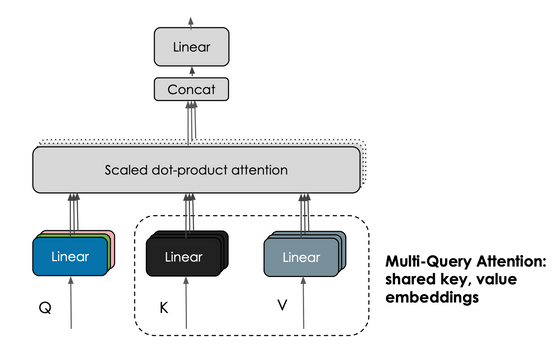

Another feature of Falcon is 'multi-query tension'. In the multi-head part of the conventional transformer structure, the query, key, and value are stored for each head, but in the multi-query tension, all heads share the query, key, and value.

By adopting multi-query tension, it is possible to reduce the cache amount of keys and values during operation to a maximum of 1/100, and it is possible to suppress the amount of memory required for operation.

Hugging Face has a page where you can actually try Falcon-40B , but at the time of writing the article, it was not available due to an error due to high access.

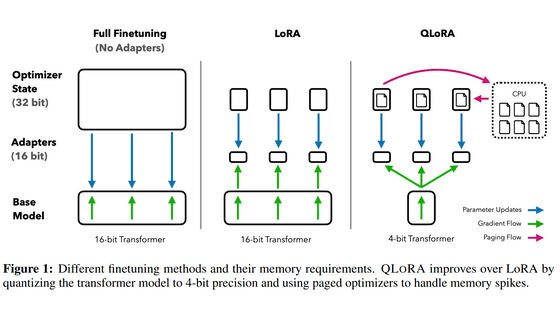

In the Hugging Face article, QLoRA was introduced as a method of training a huge model with over 10 billion parameters. QLoRA is explained in the following article.

A method 'QLoRA' that makes it possible to train a language model with a large number of parameters even if the GPU memory is small has appeared, what kind of method is it? -GIGAZINE

Related Posts:

in Software, Posted by log1d_ts