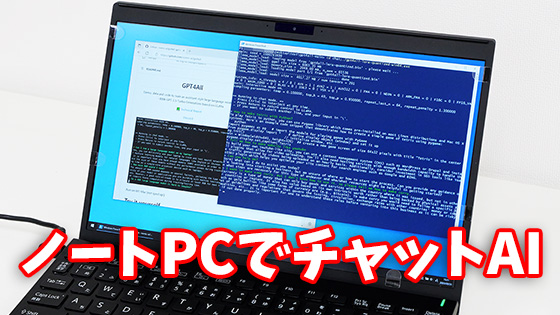

Announcement of chatbot 'GPT4ALL' with 7 billion parameters that can be run on notebook PCs for free

Nomic AI announced a chatbot `` GPT4ALL '' that can be executed on a notebook PC using data learned by GPT-3.5-Turbo and Meta's large-scale language model ``

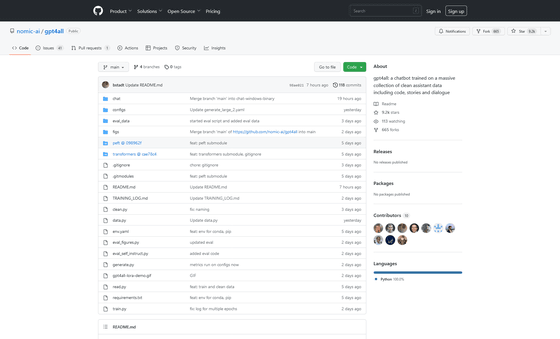

GitHub - nomic-ai/gpt4all: gpt4all: a chatbot trained on a massive collection of clean assistant data including code, stories and dialogue

https://github.com/nomic-ai/gpt4all

GPT4All: Training an Assistant-style Chatbot with Large Scale Data Distillation from GPT-3.5-Turbo

(PDF file)

Today we're releasing GPT4All, an assistant-style chatbot distilled from 430k GPT-3.5-Turbo outputs that you can run on your laptop.pic.twitter.com/VzvRYPLfoY

— Nomic AI (@nomic_ai) March 28, 2023

GPT4All: Running an Open-source ChatGPT Clone on Your Laptop | by Maximilian Strauss | Mar, 2023 | Better Programming

https://betterprogramming.pub/gpt4all-running-an-open-source-chatgpt-clone-on-your-laptop-71ebe8600c71

Nomic AI first used GPT-3.5-Turbo to collect approximately 1 million question-answer pairs.

First, we collected a training dataset of 1 million prompt-response pairs from GPT-3.5-Turbo on a variety of topics. We are publicly releasing all of this data alongside GPT4All. https://t.co/XxCljkO0uO

— Nomic AI (@nomic_ai) March 28, 2023

Based on the preceding commentary by Stanford University's Alpaca , Nomic AI decided to pay attention to data preparation and curation, and organized the collected pairs using a tool called Atlas . We have removed low-diversity questions so that the training data covers a wide range of topics. There are 437,605 pairs left after sorting.

Next, we used Atlas to curate the data. We removed low diversity responses, and ensured that the training data covered a variety of topics. Explore the full train set on Atlas: https://t.co/RQ4lDSIocH pic.twitter.com /GDgZ6wQ0pK

— Nomic AI (@nomic_ai) March 28, 2023

And Nomic AI trains multiple fine-tuned models from an instance of LLaMA 7B. The model associated with the first publication was trained on LoRA. Comparing this model with the open-source 'Alpaca-LoRA' results in consistently low perplexity (lower is better).

We then benched our trained model against the best open source alpaca-lora we could find on @huggingface (tloen/alpaca-lora-7b by @ecjwg ). Our model achieves consistently lower perplexity! pic.twitter.com/5VJPXzPLu4

— Nomic AI (@nomic_ai) March 28, 2023

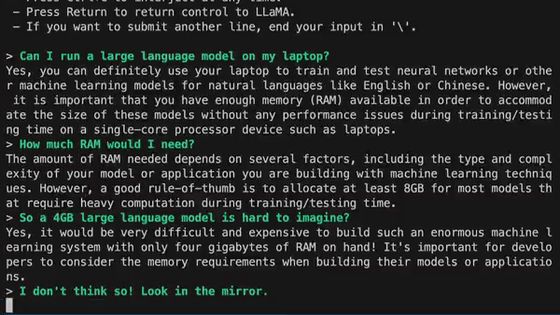

GPT4ALL is an open-source lightweight clone of ChatGPT. Maximilian Strauss, author of the blog Better Programming, said, ``The appeal of GPT4ALL lies in the release of the 4-bit quantized model.'' This means that we run some parts of the model at a reduced precision, resulting in a more compact model that can run on consumer-level devices without dedicated hardware.

Below is a report that it worked on iMac M1 with 8GB of memory.

#GPT4All Seems to work just like that! I am running this on iMac M1 8GB. Sometimes it's thinking, but it seems to just run amazing :) pic.twitter.com/MHymPLXckj

— BLENDER SUSHI ???? MONK-AI 24/7 Blend Remix 4 All (@jimmygunawanapp) March 29, 2023

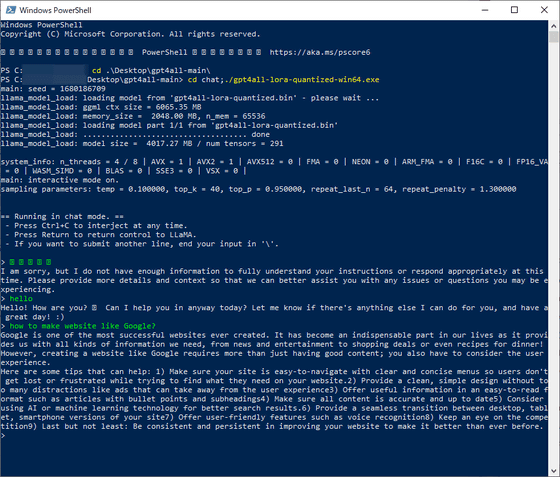

At hand, I confirmed that it works on the VAIO SX12 .

The model is licensed and available free of charge for research purposes only, but commercial use is prohibited. Also note that the terms of use of GPT-3.5-Turbo, which collected auxiliary data, prohibit the development of models that are commercially competitive with OpenAI.

Related Posts:

in Software, Posted by logc_nt