No special equipment required, the demo video of Epic Games' ``MetaHuman Animator'' that replaces the video taken with only the iPhone with a completely different face in just a few minutes is amazing

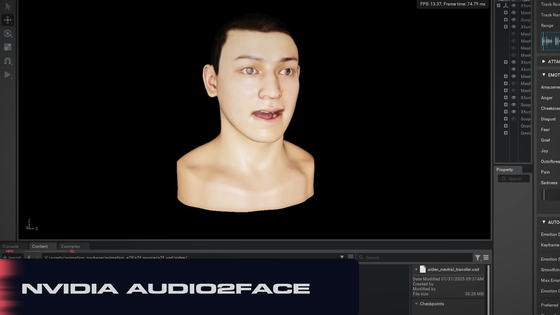

Facial capture, which converts the performer's face into 3DCG, is widely used in the game industry, etc., but because it requires special hardware, computer processing, and manual fine-tuning by artists, It's not easy for a studio to do this. Epic Games has developed a new tool ' MetaHuman Animator ' that will revolutionize such a labor-intensive and time-consuming workflow, and has released a demo video.

New MetaHuman Animator feature set to easily apply high-fidelity performance capture to MetaHuman - Unreal Engine

MetaHuman-Real-Time Facial Model Animation Demo | State of Unreal 2023-YouTube

Epic's new motion-capture animation tech has to be seen to be believed | Ars Technica

https://arstechnica.com/gaming/2023/03/epics-new-motion-capture-animation-tech-has-to-be-seen-to-be-believed/

Epic Games' ' MetaHuman Creator ', which can create high-definition human 3DCG, can easily create a 3DCG model, but it is very difficult to move the model like a human being. In the first place, it is difficult to make a 3DCG model move like a real human, and it is a hard work that takes even a skilled studio from several weeks to several months. Therefore, MetaHuman Animator, a plug-in for MetaHuman Creator, was developed, and by processing images taken with an iPhone or a camera mounted on a stereo helmet, it is possible to easily reflect the movement from live action to the 3DCG model. increase.

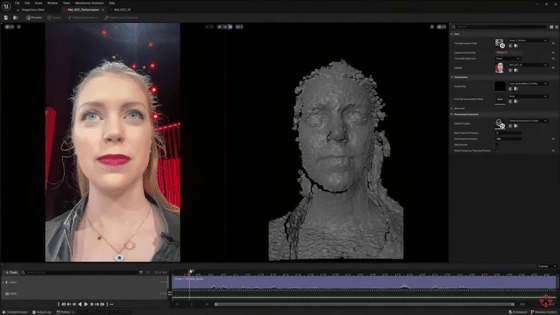

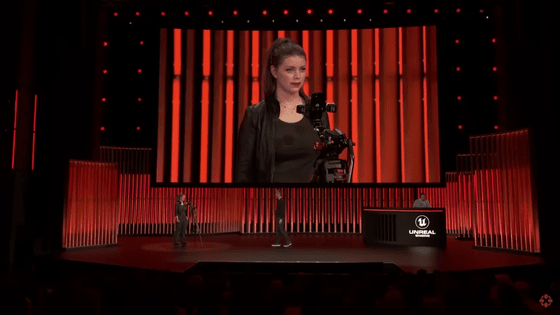

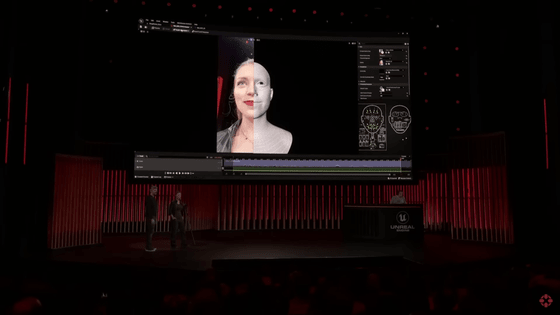

A demonstration was actually performed using the iPhone. Hellblade: Hellblade: Senua's Sacrifice who played the character Melina Juergens who cooperated in the shooting.

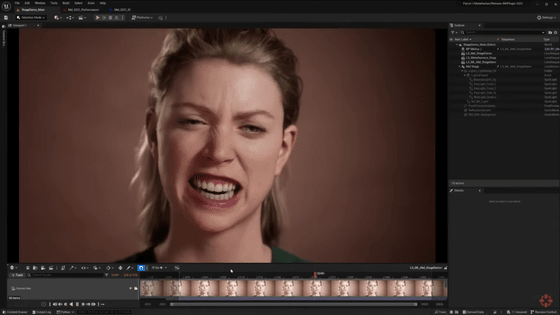

Make a smiling face, an angry face, etc.

The footage is transferred to a machine with high-end AMD products for processing. The process starts with 'just click the button once'.

After processing, the next step is to export. For the 10-second video shot this time, the export process takes only a few seconds.

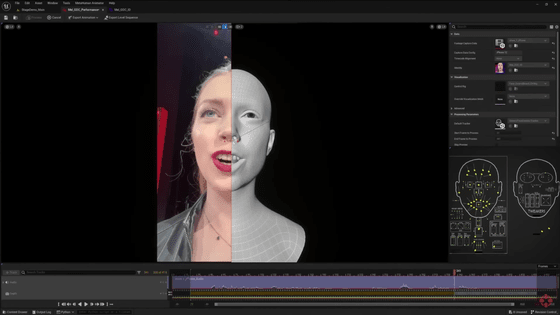

The resulting animation is below. The expression played by Mr. Juergens earlier is reproduced exactly. It took about 2 minutes to extract the motion from the live-action video, apply it to the 3DCG model and animate it, which is extremely fast.

Images captured once can be reproduced on other faces. MetaHuman Animator can estimate from the recorded sound and reproduce realistic tongue movements.

Vladimir Mastilovic of Epic Games, who demonstrated the demo, said, ``We guarantee that the resulting animation will always move in the same way regardless of the logic of any face, and will not break even if it is transferred to another one.'' . Also, since it focuses on generating animations that accurately match performance, it seems that details can be reproduced well.

MetaHuman Animator is scheduled to appear in the summer of 2023. ``By making this tool widely available, we can expect to work faster and see results sooner, democratizing complex character creation techniques,'' Mastilovich said.

Related Posts: