Runway releases 'Act-One,' a tool that can animate AI-generated characters with realistic facial expressions

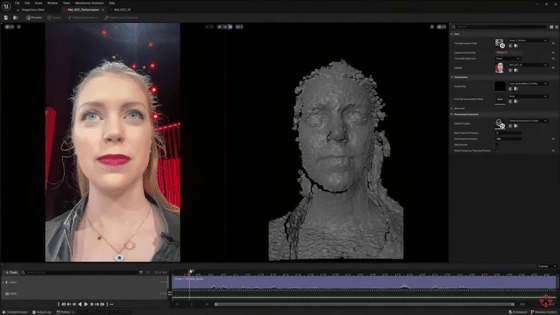

AI development company Runway has released an AI tool called ' Act-One ' that allows you to easily transfer the facial expressions of a person to an AI-generated character just by taking a video of the person. Act-One is available to anyone who has access to Runway's video generation AI model ' Gen-3 Alpha .'

Runway Research | Introducing Act-One

https://runwayml.com/research/introducing-act-one

Introducing Act-One | Runway - YouTube

Introducing, Act-One. A new way to generate expressive character performances inside Gen-3 Alpha using a single driving video and character image. No motion capture or rigging required.

— Runway (@runwayml) October 22, 2024

Learn more about Act-One below.

(1/7) pic.twitter.com/p1Q8lR8K7G

The task of giving facial expressions to 3D models, known as 'facial animation,' has previously required complex processes such as motion capture equipment and filming from various angles. Below is a look at the filming of ' Rise of the Planet of the Apes .' Actors are required to wear numerous sensors for motion capture while acting.

Turning Human Motion-Capture into Realistic Apes in Dawn of the Planet of the Apes | WIRED - YouTube

However, in recent years, various AI companies have been developing AI tools that enable easy facial animation, and Runway's Act-One is one of them.

Act-One is an AI tool that focuses on modeling facial expressions. By simply capturing footage using a smartphone camera or other device, it is possible to transfer the facial expressions of a subject onto an AI-generated character.

Act-One allows you to faithfully capture the essence of an actor's performance and transpose it to your generation. Where traditional pipelines for facial animation involve complex, multi-step workflows, Act-One works with a single driving video that can be shot on something as… pic.twitter.com/Wq8Y8Cc1CA

— Runway (@runwayml) October 22, 2024

Runway says of Act-One, 'Act-One requires no motion capture or character rigging and can convert a single video input into countless different character designs and different styles.'

Without the need for motion-capture or character rigging, Act-One is able to translate the performance from a single input video across countless different character designs and in many different styles.

— Runway (@runwayml) October 22, 2024

(3/7) pic.twitter.com/n5YBzHHbqc

One of Act-One's strengths is its ability to deliver realistic cinematic quality output from a variety of camera angles and focal lengths, allowing even small creators to achieve complex character performances that would be difficult to achieve without expensive equipment and complex workflows. According to Runway, all you need is a smartphone camera and a single actor reading a script to create emotive content.

One of the models strengths is producing cinematic and realistic outputs across a robust number of camera angles and focal lengths. Allowing you to generate emotional performances with previously impossible character depth opening new avenues for creative expression.

— Runway (@runwayml) October 22, 2024

(4/7) pic.twitter.com/JG1Fvj8OUm

'We're past the point where the question was whether a generative model could produce consistent video; good models are now the new baseline,' said Cristobal Valenzuela, CEO of Runway. 'The difference is in how we think about applications and use cases, and ultimately what we build.'

We are now beyond the threshold of asking ourselves if generative models can generate consistent videos. A good model is now the new baseline. Generating pixels with prompts has become a commodity. The difference lies (as it has always been) in what you do with a model - how you… https://t.co/s0wVvPga7j

— Cristóbal Valenzuela (@c_valenzuelab) October 22, 2024

Act-One, which can output realistic facial expressions, has a comprehensive set of safety measures in place, including safeguards to automatically detect and block attempts to generate unauthorized content of public figures and celebrities, and technical tools to ensure that the voices used by users are legitimate. It also continuously monitors whether the tools are being used responsibly and for any misuse.

Act-One will be gradually rolled out to users of Runway's video generation AI model 'Gen-3 Alpha' from October 22, 2024.

Runway releases video generation AI model 'Gen-3 Alpha', anyone can generate 5-10 second videos within a few days - GIGAZINE

Runway announced a partnership with film and television production company Lionsgate in September 2024 to use AI in film production. 'Runway is a visionary, best-in-class partner that will help us leverage AI to develop cutting-edge, capital-efficient content creation opportunities. We see AI as an excellent tool to enhance and complement our current operations,' said Michael Burns, vice chairman of Lionsgate, in a release.

Related Posts: