Introducing a system that allows anyone to become a VTuber while richly expressing joy, anger, sadness, and joy from just one image

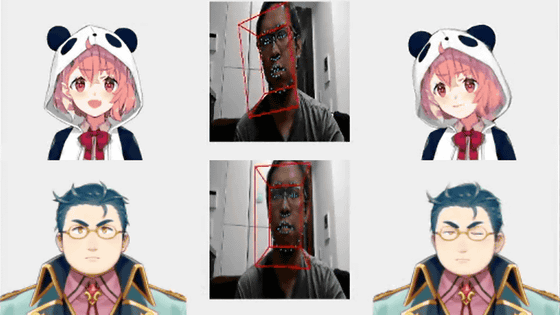

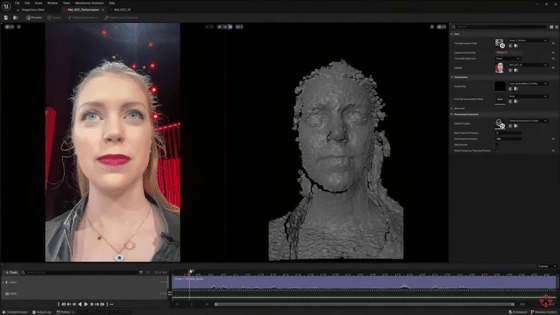

On February 2, 2021, Pramuk Kangaan, who works as a software engineer at Google, announced that he has developed a system that can create a variety of facial expressions from a single character image. This system allows you to freely move your eyes, mouth, irises, etc., and it is also possible to reflect your movements in your facial expressions in real time.

Talking Head Anime from a Single Image 2: More Expressive (Full Version)

I created a system that generates more expressive animation using a single character image.

https://pkhungurn.github.io/talking-head-anime-2/index-ja.html

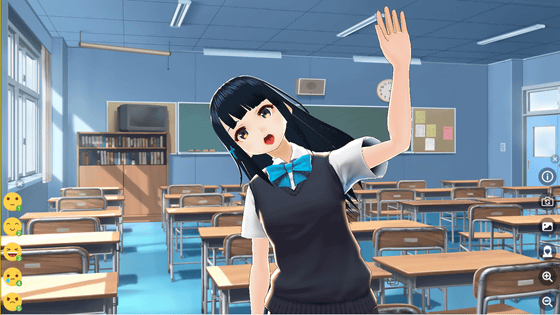

I created a system (v2) that allows you to become a VTuber with just one image. Just by preparing a frontal image of the character, you can manipulate the eyebrows, eyes, iris, and mouth, and even rotate the face. The characters are more expressive and the lip syncing looks good. For more information, visit https://t.co/0UF9sJnQkA . #Machine Learning #Deep Learning #Vtuber pic.twitter.com/LQazpLh5rm

— dragonmeteor (aka masterspark) (@dragonmeteor) February 3, 2021

The overview of the system and how you can become a VTuber is explained in the following movie.

I created a system that allows you to become an expressive VTuber with a single image - Nico Nico Douga

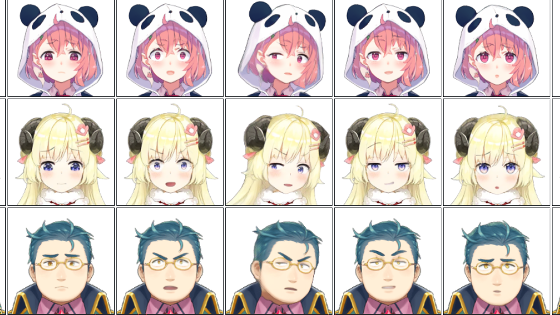

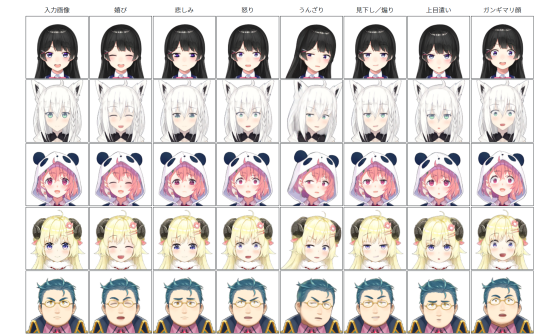

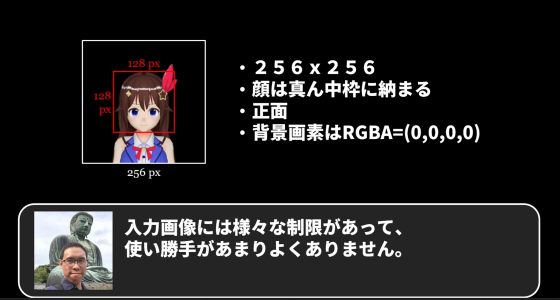

Kangaan started creating this system in 2019 with the goal of making it easier to become a Vtuber. The system allows you to load a single image as an input image and output the image with various facial expressions, but at first it was only possible to rotate the character's face and open and close the eyes and mouth. About. At this time, there were only six types of pose vectors to specify poses, and the character could only take six types of movements.

In order to increase the character's facial expressions, Kangaan repeated

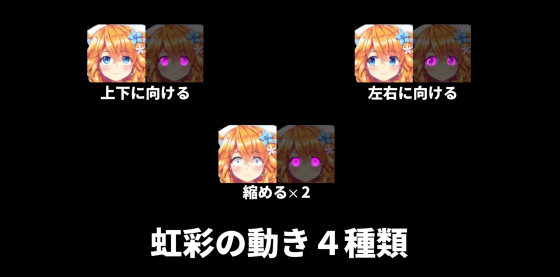

4 types of iris movements

12 types of eyebrow movements

There are 11 types of mouth movements.

This allows the character to move through 42 different pose vectors and take on many detailed facial expressions.

This system is compatible with both male and female characters, and it is possible to change hair and eyes visible through glasses without any problems. Also, in the previous system, if the input image had a closed mouth, the mouth in the output image could not be opened, but this time the mouth opens in a somewhat appropriate manner.

This system also uses an iOS app called

Yet Another Tool to Transfer Human Facial Movement to Anime Characters in Real Time - YouTube

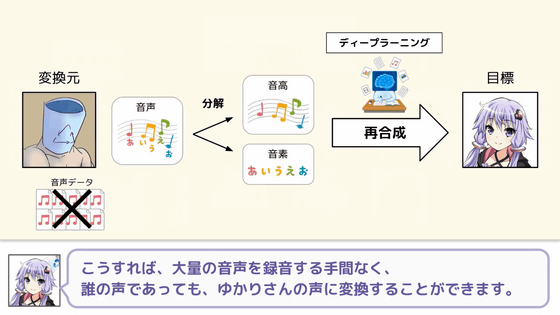

It is also possible to use recorded footage to reflect facial expressions on any character. Letting VTubers play Uirobi, who is famous for long lines...

I chanted Uirobi and transferred the movement to a VTuber image. - YouTube

It is now possible to do things like sing songs.

I lip-synced 'Bakataitai' and had a VTuber's image sing it. - YouTube

According to Kangaan, there are still things that need to be improved with this system, such as ``Only the movement that the 3D model can do can be reflected in the image'' and ``There are limitations on the input image.'' He says he will resolve these issues in his next project.

Related Posts: