'DEGAS' technology will be announced to realize a highly accurate 3D avatar that can reproduce the movements and expressions of real people

The technology of generating 3D images from 2D data using

[2408.10588] DEGAS: Detailed Expressions on Full-Body Gaussian Avatars

https://arxiv.org/abs/2408.10588

Projectpage of DEGAS (Detailed Expressions on Full-body Gaussian Avatars)

https://initialneil.github.io/DEGAS

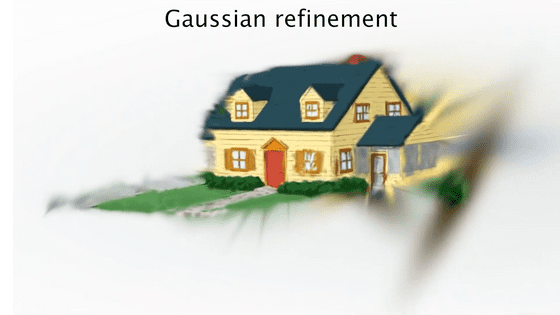

DEGAS is a modeling method based on 3D Gaussian Splatting (3DGS), a neural rendering technique. 3DGS is a technique for expressing 3D images by overlapping millions of Gaussian distributions. First, the initial state of the Gaussian distribution is appropriately set, and then each Gaussian distribution is adjusted to match the actual image, improving accuracy.

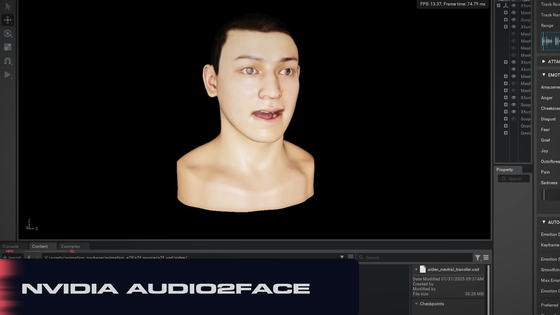

In developing DEGAS, the research team adopted an expression latent space trained only on 2D facial images, instead of the 3D morphable model commonly used in high-precision 3D head avatars. This bridges the gap between 2D expressions and 3D avatars, and leverages the rendering capabilities of 3DGS and the rich expressiveness of the expression latent space to reproduce realistic rendering images with delicate and accurate expressions.

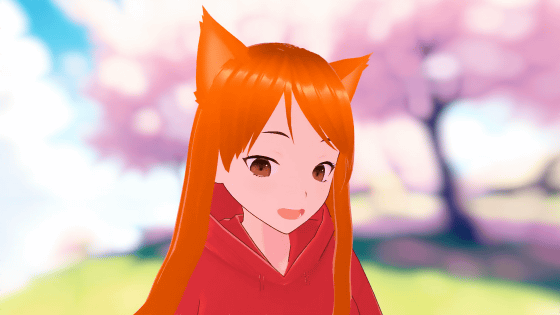

The remaining five avatars move in accordance with the movements, expressions, and speech of the person in the upper left.

It is also possible to reproduce facial expressions from voice data by using open source software called SadTalker.

![]()

'In this paper, we argue that a facial expression latent space pre-trained on only 2D conversational faces is a good choice for recreating 3D avatars, opening up new possibilities for interactive realistic agents. Avatars modeled with DEGAS reproduce natural body movements and rich facial expressions, allowing them to be animated and rendered at real-time frame rates,' the research team said.

Related Posts: