A research paper that the latest language models such as `` GPT-3 '' have acquired `` theory of mind '', which is the ability to naturally infer the minds of others

The latest language models such as '

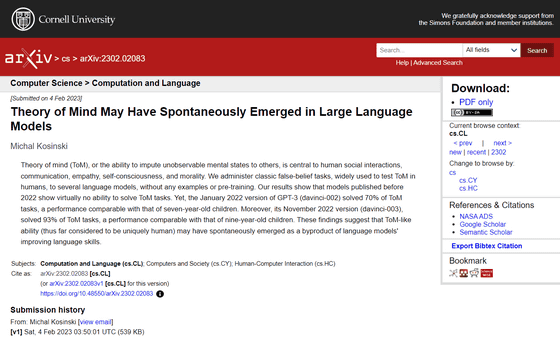

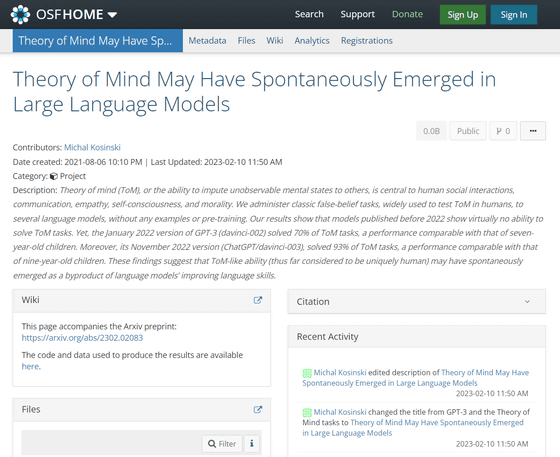

[2302.02083] Theory of Mind May Have Spontaneously Emerged in Large Language Models

https://arxiv.org/abs/2302.02083

Theory of Mind May Have Spontaneously Emerged in Large Language Models

(PDF file) https://arxiv.org/ftp/arxiv/papers/2302/2302.02083.pdf

The function of human mind to infer other's mental states, purposes, intentions, knowledge, beliefs, intentions, doubts, etc. is called ' theory of mind '. It is considered to be central to ethics.

'Theory of mind' develops early in life and is considered very important. Therefore, it seems that there are many cases where people who do not have a 'theory of mind' functioning normally are judged to be suffering from mental illnesses such as autism, bipolar disorder, schizophrenia, and psychosis. Even apes, which are said to have the highest intelligence closest to humans, seem to score significantly inferior to humans when testing 'theory of mind'.

In the past, RoBERTa, early GPT-3, and custom-trained question-and-answer models have been used to solve 'theory of mind' tests, but in these

However, Michal Kosinski of the Stanford University Graduate School of Business believes that such abilities as 'theory of mind' do not need to be explicitly incorporated into AI. It seems that he thought that there was even a possibility of acquiring 'theory of mind'.

In particular, Kosinski cites large-scale language models as possible candidates for the natural expression of ``theory of mind,'' because ``human language contains descriptions of mental states, different beliefs, and so on. Because many characters with thoughts and desires will appear.'

Mr. Kosinski used two classicfalse belief tasks widely used in tests to measure human theory of mind ability, and tested several language models without presenting examples or performing any prior training. We conducted an experiment to receive

As a result of the experiment, it turns out that the language model announced before 2022 had no ability to solve the 'theory of mind' test. However, the January 2022 version of ``GPT-3'' produced a test score similar to that of a 7-year-old child, with a correct answer rate of about 70%. In addition, the November 2022 version of ``GPT-3'' recorded a score equivalent to that of a 9-year-old child with a correct answer rate of 93%. It is

Regarding the results of the experiment, Mr. Kosinski said, ``It suggests that the `` theory of mind '', which was thought to be possessed only by humans, may have been acquired as a by-product in the process of improving language processing ability by language models. ” is mentioned.

The code and tasks used by Mr. Kosinski in the experiment are available to anyone from the following pages.

OSF | Theory of Mind May Have Spontaneously Emerged in Large Language Models

https://osf.io/csdhb/

Related Posts: