OpenAI is secretly promoting a new AI project called 'Strawberry,' a math-solving AI previously known as 'Q*' in a leak

It has been reported that OpenAI's AI development project 'Q* (Qster),' which is rumored to bring about a breakthrough in research into artificial general intelligence (AGI), is being actively worked on within the company.

Exclusive: OpenAI working on new reasoning technology under code name 'Strawberry' | Reuters

OpenAI's Q* Gets New Name, Project Strawberry: Report Says It Can Navigate Internet Autonomously With 'Deep Research' And Significantly Better Reasoning Capabilities - Microsoft (NASDAQ:MSFT) - Benzinga

Large-scale language models are good at describing words that humans can understand, but they are relatively inaccurate when it comes to calculations and mathematical reasoning. It has been shown that the longer the question, the more likely it is that a person will give a wrong answer. 'Q*' is a project being developed to solve this problem.

What is the new AI 'Q* (Q Star)' developed by OpenAI speculated to be? - GIGAZINE

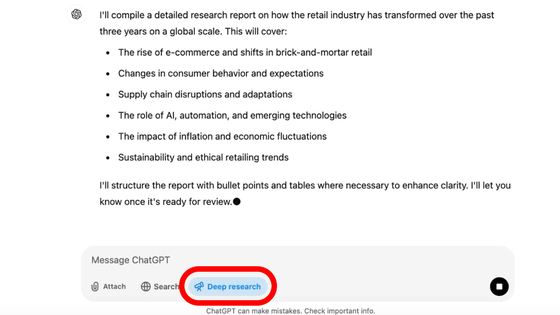

According to Reuters, 'Q*', which was rumored to be around winter 2023, was still under development as of May 2024 and was internally codenamed 'Strawberry'. The documents confirmed by Reuters detailed Strawberry's development plans, including how to use Strawberry's model to enable AI to not just generate answers to queries, but to autonomously browse the web with the assistance of a 'Computer-Using Agent (CUA)' and take action based on the results. Reuters also reported that the source said, 'Plans are underway.'

Strawberry has been described internally as 'groundbreaking,' with the promise of enabling AI to plan ahead, incorporate how the physical world works into its answers, and solve complex step problems reliably.

Large-scale language models can summarize sentences and create excellent sentences much faster than humans, but they are not good at problems that humans intuitively understand, such as recognizing logical errors or playing tic-tac-toe. OpenAI researchers say that the reasoning ability required to solve such problems is 'the key to achieving human or superhuman levels of intelligence.'

Related Posts:

in Software, Posted by log1p_kr