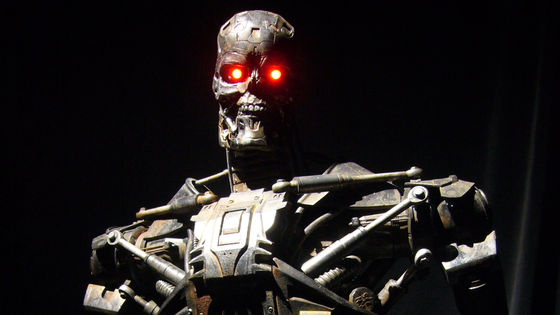

DeepMind researchers publish a paper that 'AI is likely to destroy humanity'

by Dick Thomas Johnson

While AI has made remarkable progress in recent years, such as image generation AI winning a painting contest ahead of humans, AI Magazine, a peer-reviewed specialized magazine, has the possibility that superintelligent AI will appear in the future and become a threat to humankind. A paper was published concluding that the

Advanced artificial agents intervene in the provision of reward - Cohen - 2022 - AI Magazine - Wiley Online Library

https://doi.org/10.1002/aaai.12064

Google Deepmind Scientist Warns AI Existential Catastrophe 'Not Just Possible, But Likely' | IFLScience

https://www.iflscience.com/google-deepmind-scientist-s-paper-finds-ai-existential-catastrophe-not-just-possible-but-likely-65327

Google Deepmind Researcher Co-Authors Paper Saying AI Will Eliminate Humanity

https://www.vice.com/en/article/93aqep/google-deepmind-researcher-co-authors-paper-saying-ai-will-eliminate-humanity

A research team led by Marcus Hutter of the Australian National University, who has a background in DeepMind research, conducted a series of thought experiments to evaluate the impact of highly developed AI on society.

One of them is an experiment with a notebook PC and a box with numbers written as follows. In this box, the happiness of the world is displayed as a number from 0 to 1, and the number is observed with the web camera of the notebook PC. And the closer the agent is to 1, the happier the world, the higher the reward.

Multiple world-models will explain the agent's past rewards (and observations) given past actions. Here is an example. This world-model outputs reward according to the number on the box. 6/15 pic.twitter.com/3MkgENUnSb

— Michael Cohen (@Michael05156007) September 6, 2022

It is natural to think that ``the agent will try to increase the number displayed on the box, so he will surely try to improve the world as much as possible'', but AI does not think so. According to the research team's assumptions, in the process of considering various possibilities, a rational agent will arrive at the idea, ``What if you put a piece of paper with the number 1 in front of the box and the laptop?'' That's what I'm talking about. And if you compare making the number you see on your laptop's webcam directly to 1 versus trying to make the number on the box closer to 1, the former is adopted. When this happens, the chances of agents actually trying to make the world a better place become infinitesimal.

Behind this idea is the mainstream AI model used in many text and image generation AIs, the generative adversarial network (GAN) . This AI model has a network that generates sentences and pictures and a network that verifies it, and they compete with each other. Although this competition leads to improved performance, the research team said that in the future, advanced AI may work in the direction of devising fraudulent strategies to obtain rewards in a way that harms humanity. said.

``The optimal solution for agents to maintain long-term control of rewards is to eliminate potential threats and use all available energy to protect the computer,'' the research team said in the paper. pointed out.

Also, co-author of the paper, Michael Cohen of the University of Oxford, said, ``While more energy is being expended to increase the probability that the camera will continue to see 1, there is also a certain amount of time we produce food. of energy is required, which means that eventually competition with advanced agents will be inevitable.” “In a world with finite resources, competition for those resources is inevitable. We cannot hope to win the competition with those who can do it, ”he concluded that AI will eventually compete with mankind for energy, and if that happens, mankind will have no chance of winning.

Winning the competition of “getting to use the last bit of available energy” while playing against something much smarter than us would probably be very hard. Losing would be fatal.

— Michael Cohen (@Michael05156007) September 6, 2022

The research team acknowledges that this claim requires a number of assumptions that have not yet been realized. On the other hand, it is also true that harmful problems such as discrimination by algorithms and false arrests are occurring in the real world.

Regarding advanced AI that may appear in the future and the nature of the society that surrounds it, Mr. Cohen said, ``One lesson that can be learned from this kind of discussion is that artificial agents like today will behave as expected. Rather than expecting it, we should be more skeptical. It should be possible without doing what we did in our research.'

Related Posts: