What is 'Transparent Memory Offload' to save a lot of memory in Meta's huge data center?

Meta, which operates Facebook and Instagram, the world's largest social networks, owns a number of huge data centers around the world. However, if the data center is huge, the maintenance cost will be high, so how to reduce the cost in terms of both hardware and software is being pursued. Meta's engineering department has announced on its official blog that it has developed a solution called ' Transparent Memory

Transparent memory offloading: more memory at a fraction of the cost and power --Engineering at Meta

https://engineering.fb.com/2022/06/20/data-infrastructure/transparent-memory-offloading-more-memory-at-a-fraction-of-the-cost-and-power/

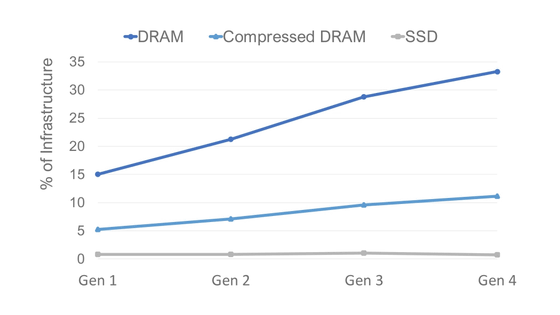

The following is a line graph showing the ratio of DRAM (blue line), compressed DRAM (light blue), and SSD (gray) to the maintenance cost of Meta's data center. With each generation, the cost ratio of DRAM has doubled in the third generation, while SSDs have remained almost unchanged. According to Meta, DRAM power consumption accounts for 38% of the power used in server infrastructure. Compressed DRAM and SSD operate at a lower cost than DRAM, but their performance is inferior.

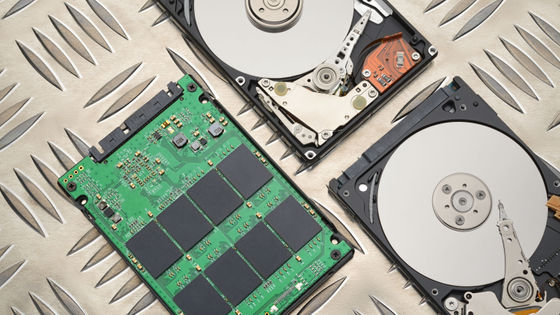

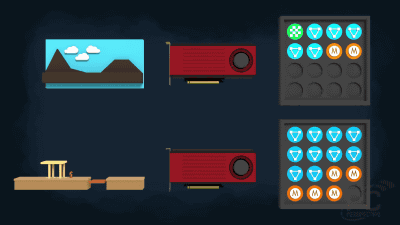

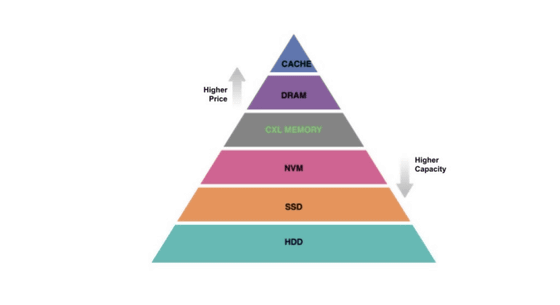

Below is a diagram of the memory storage tier, the higher the tier, the higher the cost but the higher the performance, and the lower the tier, the lower the cost but the higher the capacity that can be loaded. In recent years, many inexpensive non-DRAM memory technologies such as NVMe-connected SSDs have been introduced in data centers, and the '

In memory tiering, infrequently accessed data is migrated to slower memory for processing. The

However, it is difficult to perform kernel-driven migration because latency occurs in the operation of applications in a large-scale data center like Meta has. So Meta developed a solution called TMO.

According to Meta, TMO has the following three points.

◆ 1: Pressure stall information (PSI)

PSI is a Linux kernel component that can measure in real time the loss caused by lack of resources for CPU, memory, and overall I / O, thereby measuring the response of an application to slow memory access. So far, it seems that the memory status has been judged from the page fault occurrence rate etc., but in order to more accurately grasp the effect of memory shortage on operation, Meta uses PSI to determine the task status caused by memory shortage. And defined the memory load.

◆ 2: Automatic memory sizing tool 'Senpai'

Senpai is a tool that effectively reduces memory load while minimizing the impact on the performance of container applications, and is sufficient for containerized applications using Linux PSI analysis and cgroup2 memory limits. Apply memory and page out unused memory areas that are not needed for your workload. In short, Senpai is responsible for eliminating system waste and optimizing task placement in case of contingency.

Senpai is open source and the source code is available on GitHub.

GitHub --facebookincubator / senpai: Senpai is an automated memory sizing tool for container applications.

https://github.com/facebookincubator/senpai

◆ 3: Swap algorithm

Since Linux was developed in the era when the mainstream of storage was disk media such as HDD, memory swap is performed only in an emergency when a serious memory shortage occurs. However, in modern times, flash drives with better capacity and read / write speed than HDDs have appeared, so there is no need to limit them in an emergency. Therefore, Meta is set to track the page fault rate of the file system cache in the system and execute memory swap according to the page fault rate.

Meta reports that TMO, which has suppressed these three points, has been operating in a production environment for over a year and has been able to save a lot of memory usage. Below is a bar graph showing the memory savings in your application, for example 12% to 20% memory savings on both Compressed DRAM and SSD in the machine learning models (Ads A, Ads B, Ads C) used for ad prediction. It seems that it was realized.

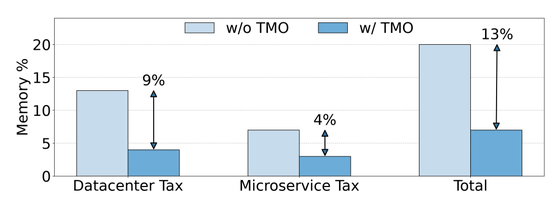

In addition to the main workload, TMO also has a memory overhead called 'memory tax' that is imposed on each host by the data center or application. As you can see from the bar graph below, applying TMO saved a total of 13% in memory tax.

Meta is focusing on CXL as a technology that can offload not only cold memory but also warm memory, and said that it is developing to use CXL devices as a back end for memory offload in the future. rice field.

Related Posts:

in Hardware, Software, Web Service, Posted by log1i_yk