Cerebras CS-2, a monster machine capable of training 120 trillion AI parameters with 163 million cores, will be announced

On August 24, 2021, Silicon Valley chip manufacturing startup Cerebras Systems announced the Cerebras CS-2, a small refrigerator-sized deep learning system equipped with a huge chip that uses a

Celebras Systems Announces World's First Brain-Scale Artificial Intelligence Solution | Business Wire

https://www.businesswire.com/news/home/20210824005645/en/

Cerebras Systems connects its huge chips to make AI more power-efficient | Reuters

https://www.reuters.com/technology/cerebras-systems-connects-its-huge-chips-make-ai-more-power-efficient-2021-08-24/

Cerebras' CS-2 brain-scale chip can power AI models with 120 trillion parameters | VentureBeat

https://venturebeat.com/2021/08/24/cerebras-cs-2-brain-scale-chip-can-power-ai-models-with-120-trillion-parameters/

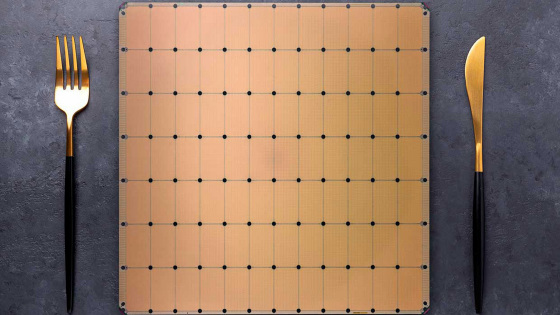

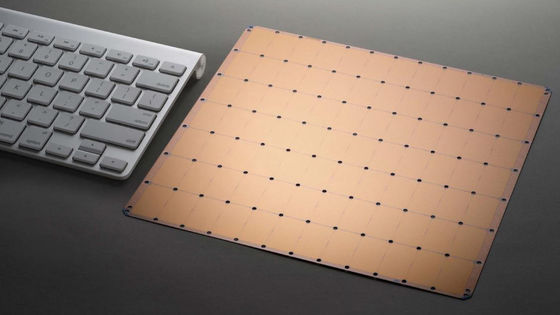

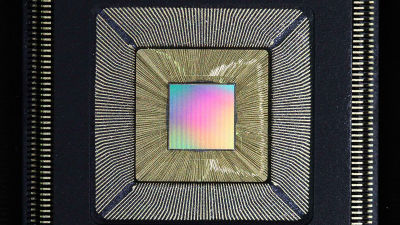

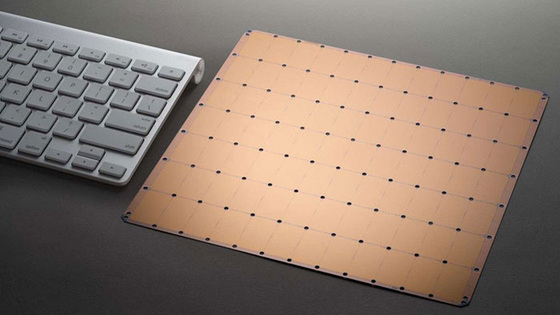

Founded in 2016, semiconductor manufacturer Cerebras Systems is a Silicon Valley-based artificial intelligence system developer. In 2019, the company announced the world's largest chip, Wafer Scale Engine (WSE) , measuring 20 cm x 22 cm. In addition, the deep learning system 'Cerebras CS-1' equipped with WSE has produced verification results that are said to 'exceed the laws of physics' in simulations.

Simulation by the world's largest chip 'Wafer Scale Engine' boasting 1.2 trillion transistors is explosive enough to exceed the laws of physics --GIGAZINE

Cerebras Systems has newly announced the 'Cerebras CS-2' which uses the 'Wafer Scale Engine 2 (WSE2)' equipped with 2.6 trillion transistors as a calculation unit.

According to the company's announcement, the Cerebras CS-2 simplifies the workload distribution model by separating the compute unit and parameter storage, allowing the CS-2 to scale from one to up to 192 'Cerebras Weight Streaming'. , Memory expansion technology 'Cerebras MemoryX' that can support models with 120 trillion parameters with high-performance memory of up to 2.4 petabytes, optimized for up to 163 million AIs across up to 192 CS-2s It is 'Cerebras SwarmX' of communications fabric that can connect core,

Of particular note is the Cerebras CS-2, which does not reduce power efficiency with additional chips. The neural network used in current AI cannot be processed by one chip, so it is usually distributed by multiple chips. However, as the number of chips increases, the power efficiency decreases, so a huge amount of power was required to increase the processing capacity of the entire deep learning system.

On the other hand, the Cerebras CS-2 does not change its power efficiency even if the number of chips is increased, so the increase in power consumption when the processing capacity is doubled is also doubled. This allows the Cerebras CS-2 to be expanded to a maximum of 192 units while maintaining power efficiency, connecting 163 million cores to one, and creating a neural network with 120 trillion parameters comparable to the human brain. It is said that you can train.

In a statement, Cerebras Systems co-founder Andrew Feldman said, ' Large networks like the GPT-3 make things never imagined possible and natural language processing (NLP) . The situation is already changing. In the industry, models with 1 trillion parameters are emerging, but we have expanded the boundary by two digits to realize a brain-scale neural network with 120 trillion parameters. I will do it. '

Related Posts:

in Hardware, Posted by log1l_ks