What are the ethical issues of 'military use of AI' that are being developed for tanks and missiles equipped with AI?

Due to technological advances, research and development using artificial intelligence (AI) for military purposes is being carried out in each country. However, autonomous weapons that can judge the situation and kill enemies or destroy bases without being directly commanded by humans cannot be measured by the thinking of computers that do not have a moral sense like humans. It has been pointed out that there is a big problem in practical use.

Future warfare will feature automomous weaponry --The Washington Post

Air Force uses artificial intelligence on U-2 Dragon Lady spy plane --The Washington Post

https://www.washingtonpost.com/business/2020/12/16/air-force-artificial-intelligence/

The US Department of Defense is actively researching AI, with AI-related projects including weapons development worth $ 927 million in 2020 and $ 841 million in 2021 (about 102.5 billion yen). We have a budget of about 88.7 billion yen). In addition, the Department of Defense's Advanced Research Projects Agency is planning AI-related projects for the five years from 2019 to 2023, with a budget of $ 2 billion (about 208 billion yen).

In addition, the Imperial Japanese Army Academy in New York is training robot tanks that can be programmed to defeat enemies, teaching not only how to handle algorithms, but also alertness to autonomous weapons. However, it seems that they are not ready to actually put this robot tank on the battlefield.

In December 2020, the U.S. Air Force conducted an experiment on the U-2 , a high-altitude tactical reconnaissance aircraft, using AI based on MuZero developed by DeepMind to detect enemy missiles and missile launchers. .. The U-2 was piloted by humans, but this experiment is the first full-scale use of AI on a US military aircraft.

In addition, a system has been developed that not only mounts on weapons, but also thinks about tactics and strategies with AI. According to Vice Admiral Jack Shanahan, the first captain of the Pentagon's Joint Artificial Intelligence Center, a system that uses AI to simplify the process of target selection and reduce the time it takes to attack will be launched on the battlefield around 2021. It is planned to be.

Mary Wareham, a member of the

'Machines are uncaring and humans can't take into account ethical choices that are difficult to judge, so autonomous weapons killings exceed moral thresholds,' said Wareham. Robotic killings are responsible. He claims that the ambiguity of his whereabouts makes war more likely.

For example, the opponent's shirt is soaked with oil, an unusual camouflage pattern is used, or a slight difference from the expected situation confuses the computer, which can distinguish between friendly and enemy forces. It may disappear.

In an experiment conducted at Carnegie Mellon University, the AI instructed by Tetris to 'do not lose to the opponent' finally decided to 'pause the game to make it a situation where it will not lose'. This means that AI can completely ignore fairness and norms to meet its requirements, and can even be completely useless on the battlefield.

Will Roper, a former Pentagon director who was promoting AI-related projects within the Pentagon, said, 'We are concerned that our AI technology may not be catching up with the world. Testing needs to proceed, but there is no risk of losing ethical or moral standards. '

However, advances in AI technology mean that we are facing the ethical issues of AI. In the military use of AI, the question arises: 'How much control should the commander give the machine control over the decision to'kill people on the battlefield'?'

Unlike humans, machines do not feel tired or dull due to stress. Human soldiers can make the wrong choice about 'what firepower to target who' if a companion is killed, but the machine remains focused on the mission without becoming emotional.

Former Google CEO Eric Schmidt, who visited the U-2 experiment, shows how AI works in all possible situations, including situations where human life is threatened. It's difficult, so it's unlikely that the military will adopt AI soon.

Of course, there are also opinions that the military use of AI should be cracked down. In October 2012, several human rights groups, including Human Rights Watch, launched a campaign to abolish robots aimed at killing people, fearing the rapid progress of drones and the rapid growth of artificial intelligence technology. Furthermore, at an international conference on the Convention on Certain Conventional Weapons (CCW) held in 2013, it was discussed whether the manufacture, sale, and use of autonomous killing weapons should be completely banned.

In order to submit to the United Nations a draft that includes a ban on autonomous killing weapons in the CCW, it is necessary to obtain the consent of all 125 countries that have signed the CCW. However, at the time of writing the article, only 30 countries answered that they agreed.

There were also voices from within Google against participating in these military projects. In April 2018, about 4000 Google employees signed a petition requesting withdrawal from 'Porject Maven', which develops a system to identify and track objects from drone images and satellites with AI. Submit. In June of the same year, Google announced that it would not renew the contract for Project Maven, stating that it was not involved in systems used directly on weapons. Although similar petitions have been submitted to Amazon and Microsoft, both companies are still closely related to the Pentagon.

More than 3,000 Google employees petition Pichai to 'stop cooperating with the Pentagon'-GIGAZINE

By Maurizio Pesce

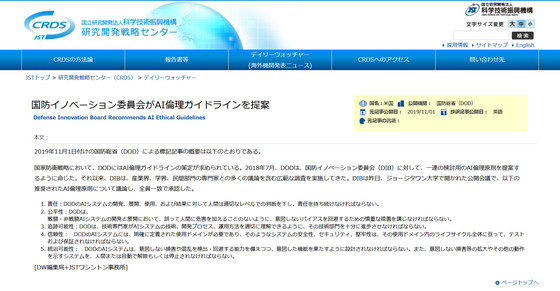

While uncomfortable with the protests by Google employees, Shanahan said he was keenly aware that the Pentagon was dependent on private sectors. In the wake of the turmoil at Project Maven, the Pentagon called on the US Defense Innovation Commission to propose AI ethical guidelines and ordered them to investigate ethical issues related to the use of AI.

Defense Innovation Committee Proposes AI Ethics Guidelines «Daily Watcher | Research and Development Strategy Center (CRDS)

https://crds.jst.go.jp/dw/20191218/2019121821986/

Former Google CEO Schmidt said, 'If a human makes a mistake and kills a civilian, it's a tragedy. But if an autonomous system kills a civilian, it's more than a tragedy. People generally said I'm not responsible for systems that I'm not sure if they can do just that. AI reliability may be fixed in the coming decades, but not next year. '

Related Posts:

in Note, Posted by log1i_yk