A large-scale network failure involving Amazon, Microsoft, PSN, etc. occurred, the cause was BGP misconfiguration

On August 30, 2020 Pacific Time,

Analysis of Today's CenturyLink/Level(3) Outage

https://blog.cloudflare.com/analysis-of-todays-centurylink-level-3-outage/

Major internet outage: Dozens of websites and apps were down-CNN

https://edition.cnn.com/2020/08/30/tech/internet-outage-cloudflare/index.html

CenturyLink outage led to a 3.5% drop in global web traffic | ZDNet

https://www.zdnet.com/article/centurylink-outage-led-to-a-3-5-drop-in-global-web-traffic/

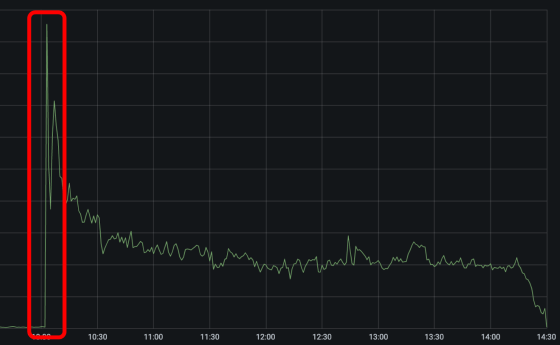

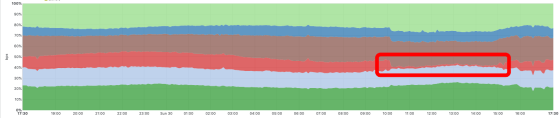

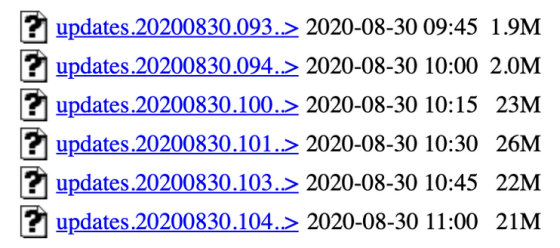

A CenturyLink network outage on the morning of August 30, 2020 Pacific Time caused problems for many services such as Amazon, PSN, and Hulu. Cloudflare's monitoring system also reported many reports of '522 error' indicating that it could not connect to the server.

Cloudflare detects CenturyLink failures and automatically switches traffic to other providers' networks such as

At the time of writing the article, the cause of the failure was not reported by CenturyLink, but Cloudflare analyzed the cause from the situation at the time of the failure.

Cloudflare speculates that the root cause of this failure is an 'inappropriate (PDF)

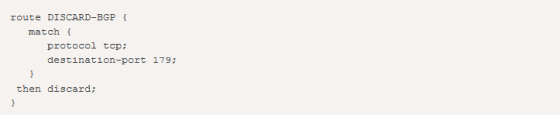

CenturyLink's status message states that 'A certain Flowspec description has hindered the establishment of BGP connections within CenturyLink's network.' Therefore, the following rules that block the entire BGP have been spread within the network. Cloudflare speculates that it may be because of the rapid increase in UPDATE messages, it is thought that the problem is that the Flowspec rule at the end of the UPDATE message is described and the message has looped.

Also, even though CenturyLink is a very good network operator, the reason why it took more than 4 hours to resolve the failure is that a lot of UPDATE messages put a heavy load on the router, I guess it was difficult to log in. In addition, many providers allow customers to use Flowspec, and if the offending Flowspec rule is submitted by the customer, it's difficult to track, Cloudflare explains.

'Failures do happen. Thank you to CenturyLink's team for keeping us informed during the failure,' Cloudflare said.

Related Posts:

in Web Service, Posted by darkhorse_log