How much does the relationship of trust and emotions change depending on whether the trading partner is a robot or a human being?

With the advancement of science and technology, robots and computers are being introduced one after another at work sites, and there are

Trust in humans and robots: Economically similar but emotionally different-ScienceDirect

https://www.sciencedirect.com/science/article/abs/pii/S0167487020300106

Trust in humans and robots: Economically similar but emotionally different | EurekAlert! Science News

https://www.eurekalert.org/pub_releases/2020-04/cu-tih041620.php

The scenes where humans and robots interact are becoming commonplace in markets, workplaces, streets, and homes. Research teams

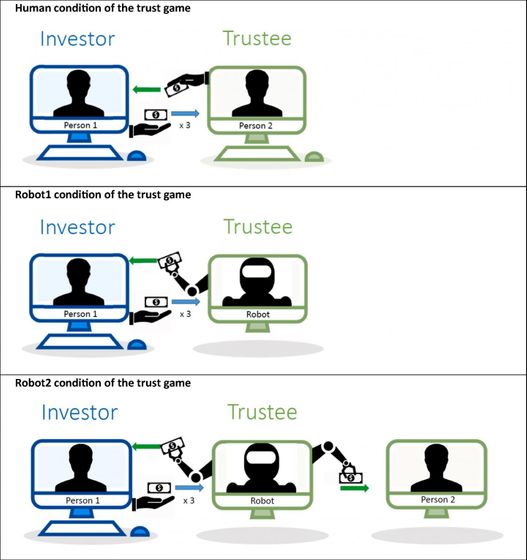

The trust game is a game played by two test subjects, an 'investor' and a 'trustee.' Initially, the investor will be paid a certain amount. The investor then decides how much money he / she will invest in the trustee, and the trustee receives 'three times' the amount invested by the investor. The fiduciary then sends back some of the money received as a 'reward.' There is no limit to the amount of money you can give back, and you may give the entire amount of funds at hand or none at all. The game ends when the investor receives a reward from the trustee.

As the name implies, the trust game is a game that investigates the 'trust relationship' between the investor and the trustee. For example, suppose an investor is initially paid $ 10. If the investor believes that the fiduciary will not use all of the money he / she has in his hands and will not reward him / her, then if the fiduciary does not trust the fiduciary, the investor first transfers money to the fiduciary. There is nothing to do. In this case, the investor makes a profit of $ 10 and the trustee makes no profit.

On the other hand, if the investor believes that the trustee should give back more than the amount invested by the trustee, and trusts the trustee, the investor will transfer money to the trustee. For example, suppose an investor is paid $ 10. In actual experiments, there were many cases where about 50% of the paid amount was remitted, so I will remit $ 5 (about 550 yen).

In this case, the amount that the trustee can receive is $ 15 (about 1600 yen), which is three times as much as $ 5, and the investor's profit is $ 5 Person's profit is $ 15. However, in many cases of trust games for humans, the trustee will return about 40% of the amount received to the investor. When the trustee sends 6 dollars (about 660 yen) out of 15 dollars to the investor, the investor's profit finally becomes 11 dollars (about 1200 yen) and the trustee's profit becomes 9 dollars (about 990 yen) , Both of you can get more than $ 10 investor and $ 0 trustee if the investor decides not to send any money. In human-to-human transactions, it has been observed that investors trust trustees, and trustees also reward investor trust.

In this experiment, in addition to 'Human-Human Trust Game', 3 patterns of 'Human-Robot Trust Game' and 'Human-Robot Trust Game in which robot's profit is passed to another person' are implemented. Whether the trading partner is a human or a robot, or directly interacting with a robot is the pattern that is ultimately affected by humans, and how much the trust and feelings the investor has in the other party will be investigated .

by Chapman University

Experiments have shown that investors, regardless of whether they are humans, robots, or robots that affect humans, transfer money with the same degree of confidence. Humans did not blindly trust the robot, nor did they trust the robot and refused to trust it.

On the other hand, the feelings that investors have when a transaction succeeds or fails has changed depending on whether the opponent is a human or a robot. If the transaction was successful and profitable, the investor's 'gratitude' was greater when the other party was a human than when it was a robot. It was also shown that the feeling of 'anger' that a person has when a transaction fails is larger when the opponent is a human and smaller when the opponent is a robot. Also, the research team points out that if the trading partner was a robot that affects humans, human emotions were greater than if the counterparty was a simple robot.

In this experiment, it was shown that the trust held by human investors at the beginning of the game is the same whether the opponent is a robot or a human, but if similar transactions are repeated many times, the trust relationship with the trading partner May be different. If the transaction is successful many times, the investor will be much more grateful if the other person is a human, and the relationship is likely to be very strong. On the other hand, if the opponent is a robot, thank you is small, so the relationship of trust may remain weaker than that of a human opponent. Similarly, if the transaction fails several times, it is possible that the human opponent easily feels anger and the trust relationship is easily broken, while the robot opponent is hard to get angry and the relationship may be hard to break.

Although there will be unmanned vehicles and passengers on public roads in the future, the number of vehicles controlled by robots will increase, and it is possible that problems with conventional vehicles driven by humans will occur. In the same way, robots are expected to enter the reception areas of hotels and airports, as well as various service industries, and the number of cases in which humans and robots conduct transactions will increase. The research team argues that it is meaningful to investigate the relationship of trust between humans and robots and emotional movements in order to understand such future situations.

Related Posts:

in Note, Posted by log1h_ik