Google develops a model incorporating Adversalial Examples to overcome the weakness of neural machine translation

by

Google's neural machine translation has a weakness that 'a slight modification of the text before translation results in a large change in the text after translation'. To overcome this weakness, Google has newly developed a model that incorporates the 'Adversarial Examples' algorithm, which confuses the translation model by adding noise that can not be identified by humans to the image.

Google AI Blog: Robust Neural Machine Translation

https://ai.googleblog.com/2019/07/robust-neural-machine-translation.html

Neural machine translation using Google's Transformer model has been successful in the online translation world. Neural machine translation is based on deep neural networks, and translates in an end-to-end parallel corpus without the need for explicit language rules.

However, although neural machine translation has been a great success, it has the weakness that it is very sensitive to minor modifications of input information. Just replacing one word in a sentence with a synonym may result in a completely different translation.

There are also companies and organizations that can not be incorporated into systems due to the lack of robustness in neural machine translation. It has been pointed out that even with Wikipedia, a large number of articles posted by machine translation are being used, this means that the credibility of Wikipedia itself is lost.

Machine translation still has problems as a translation tool for wikipedia, making wikipedia itself unreliable-GIGAZINE

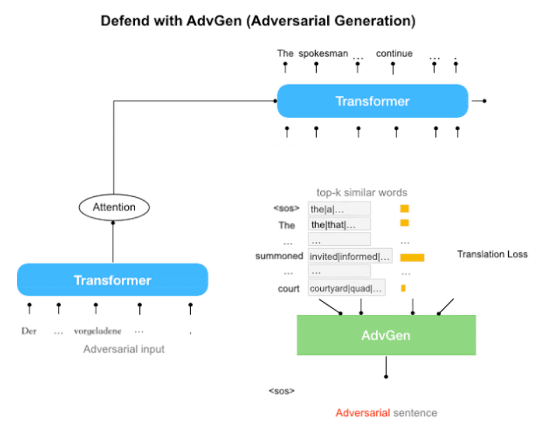

Google is working on a solution to this problem, and a paper published in June 2019 is one such approach. The study said that it incorporated an algorithm called 'Adversarial Examples', which confuses the translation model by putting noise that can not be identified by humans on the image. This method is inspired by the Hostile Network (GAN) , but instead of relying on the Discriminator to determine authenticity, it uses Adversalial Examples in training to diversify and extend the training set.

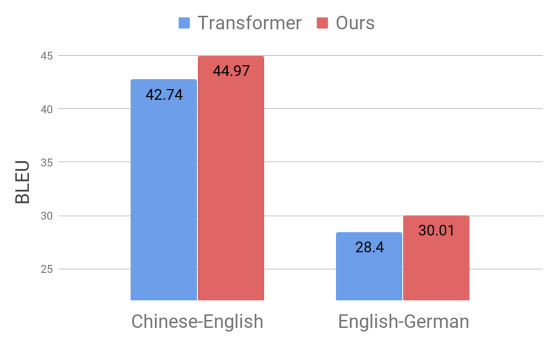

The development team benchmarked the combination of “Chinese-English” and “English-German” and found that the

The researchers say that the results of this study show the possibility of overcoming the existing weaknesses of neural machine translation, 'lack of robustness'. The new model performs better than competing models, and it is hoped that this translation model will be useful for downstream tasks in the future.

Related Posts:

in Software, Posted by darkhorse_log