An attempt to read an electroencephalogram by deep learning

by Thư Anh

There are a lot of researchers trying to accurately predict actual motion from electroencephalograms and nerve signals using " deep learning " applied to various technologies such as translation programs , movie editing techniques , and noise suppression during calls . Also, FloydHub reveals that such attempts are now possible to do quite easily.

Reading Minds with Deep Learning - FloydHub Blog

https://blog.floydhub.com/reading-minds-with-deep-learning/

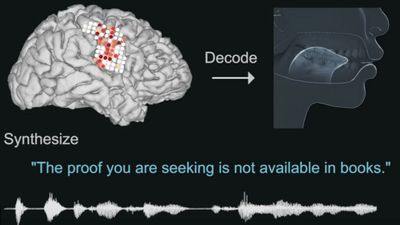

What human beings live is a series of translating various information into a suitable form. Listening to sounds is possible by converting vibration in the air into sound, and what we see with eyes is possible by converting electromagnetic waves into video. Among the various signals related to human beings, "Brain Computer Interface (BCI)" which receives attention especially in recent years by receiving, analyzing and utilizing brain waves is a brain computer interface (BCI).

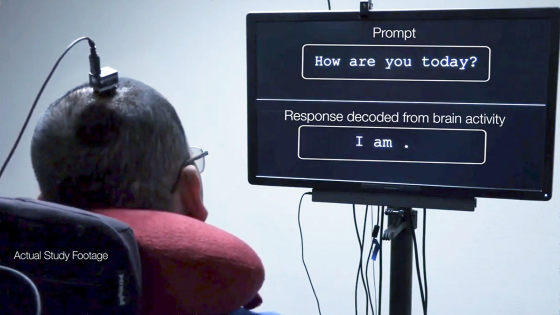

By using this BCI and deep learning, there are several attempts to predict what people are "trying to do" and "what are you thinking" from changes in brain waves and the like.

◆ CTRL-labs

The company called CTRL-labs attached a neural sensor to the wrist, recorded what nerve signal was issued when moving any finger, and created an interface that can accurately predict the actual movement of the hand from the nerve signal . According to CTRL-labs, the sensor attached to the wrist is sensing the "easily recognizable signal" emitted from the nervous system, "Before the spinal cord and the nerve signal emitted from the arm reaches the brain I am reading it. "

CTRL-labs human neural interface - YouTube

The CTRL-labs approach has the potential to become a breakthrough breakthrough for BCI, which has been thought to be "electrodes and sensors must be attached to the brain".

Also, CTRL-labs says, "If we can communicate with computers only by neural signals, our nervous system can not wear a way to handle more numbers than we are currently using Is it written? "

◆ MindMaze

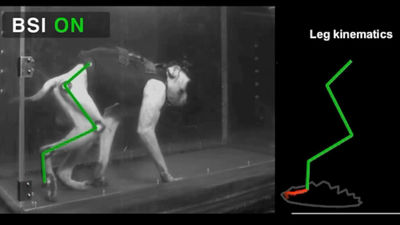

Many BCIs are applied in the medical field. Controlling the prosthesis connected to the computer with thinking can be a great hope for people who have become paralyzed and part of the body has stopped moving. MindMaze is developing VR applications for training, so that people who have paralyzed parts of the body can learn again how to properly control the muscles.

In the application, we convert the user's thinking into the motion of the avatar in the VR space, and an approach is being studied that remembers how to move the actual body while freely manipulating the avatar in the VR space.

Mindmaze The Neurotechnology Company

◆ Kernel

A company called Kernel, launched by Brian Johnson, founder of Brain Tree that eBay bought for $ 800 million (about 90 billion yen) also entered the BCI field. The short-term goal of Kernel has already claimed that "by about 80% success" by converting brain memory to computer chip. However, details are not disclosed at the time of article creation.

Kernel

◆ Neuralink

Neuralink is a nervous system UI development company that connects computers and brains launched by Eelon Mask who is also the founder of Tesla and SpaceX. Although the company is working on improving drugs at BCI and connecting devices, the real purpose is to merge human beings and AI. Mask CEO asserts that the only way for human beings to survive for a long time is "to evolve with AI."

While listening to these corporate stories, the BCI field seems like a story that does not relate to the general public, but in reality, if you use a convolution neural network, you can tell "what kind of actions have been done?" It is also possible to read. Therefore, blog FloydHub that puts out information on deep learning relationships zakkuri explains how to actually read the brain wave.

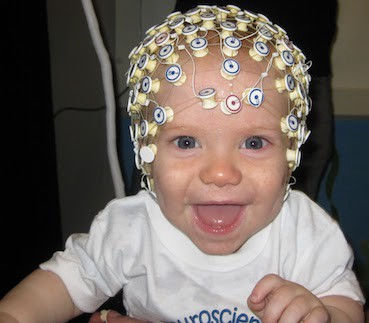

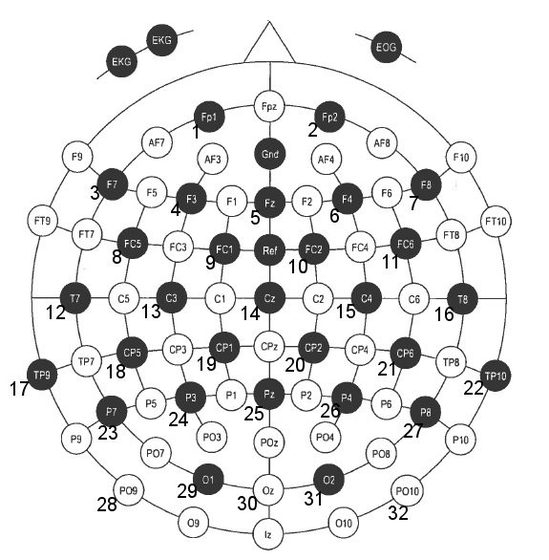

To read brain waves, data on brain waves is necessary, so a data set that records measured values of brain waves during manual work for twelve people is used.

In this data set, brain waves are read from 32 locations in the following electroencephalogram, and the subject is instructed to "start the action of lifting the object when the light is lit", and thereafter, "touch the object", "touch the object" Putting it on an object "" Lifting an object "" Positioning an object "" Release an object "five actions.

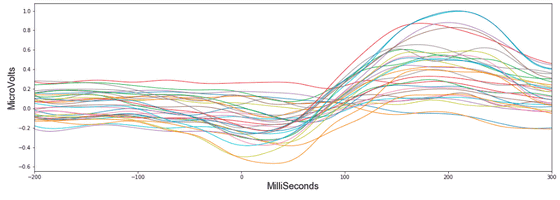

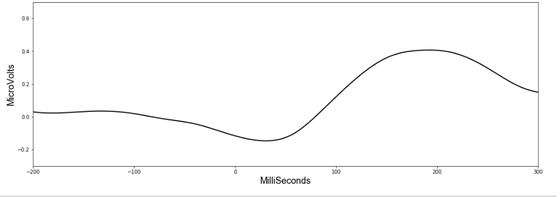

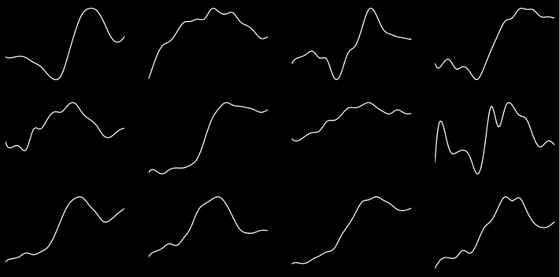

Among them, the graph below shows the changes in brain waves at the moment when the subject "starts lighting up the object when the light turns on". The 32 lines show the values measured at 32 measurement points in the electroencephalogram, respectively, and you can see the change in the value from 200 ms before moving the hand to 300 ms after starting to move the hand.

The average of these 32 data is as follows.

However, in the other five movements, the change pattern of brain wave is not so clear, it seems that it was difficult to predict "what kind of action actually was performed" by only looking at the brain waveform. Although it becomes a graph of the mountains on the whole as a whole, the shapes of the curves are different from each other, and it was difficult to distinguish as "this behavior because it is this type".

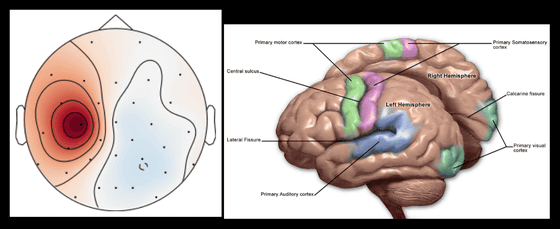

Apart from this, using MNE in the Python library, it is possible to visualize "at which part of the brain the change of the brain wave is occurring" at the moment of touching or lifting the object. For example, when you touch an object to lift it, the most active part is the left part of the brain (the red part on the left in the lower part of the figure below), where there is a part called the motor field (the green part on the right in the lower figure).

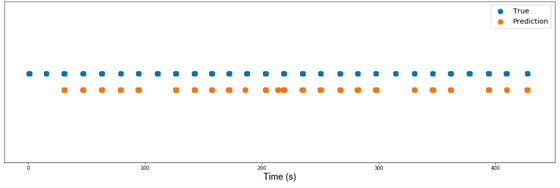

I learned convolution neural network with these data using PyTorch for deep learning library and succeeded in making precision model with AUC of 94%. The image below shows this in an easy-to-understand way. In the dataset, the subject lifts the object 28 times, but when the subject actually performed the motion, it is indicated by a blue dot, and the model indicates the timing when the model predicted that the action was performed is indicated by an orange point. According to this model, the model succeeded in correctly predicting 23 out of 28 movements.

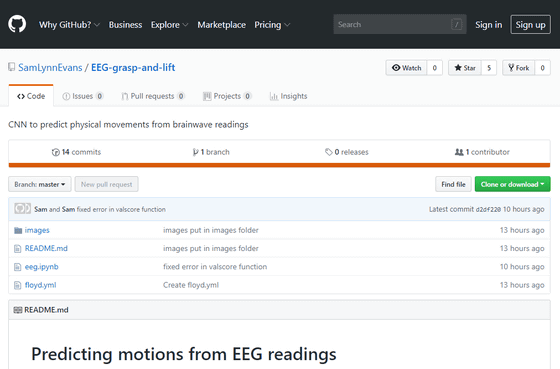

In this way, it is possible to verify how the brain wave is connected to what kind of action relatively easily by using deep learning. Actually, the model created by FloydHub is also published on the following GitHub page, so click on it if you want to play with data and model.

GitHub - SamLynnEvans / EEG - grasp - and - lift: CNN to predict physical movements from brainwave readings

Even if people interested in neuroscience use deep learning to try to identify the actual behavior of human beings from brain waves, there is a problem that we do not know how the neural networks used work together, FloydHub says, "There is no need to always know how deep learning works," says, "It is necessary to use the wheel before using the physical law and to use electricity before you know about electrons It is the same as "It is written.

Related Posts: