How should we resolve the "ethical dilemma" of which pedestrian or passenger's priority should be given priority to an automated driving car accident?

If an automated driving car is put into practical use, it is expected that accidents caused by "human error" will disappear and people who die in a traffic accident will be drastically reduced. Meanwhile, as the technology of automatic driving cars advances, "In the unlikely event that accidents can not be avoided, the automatic driving car is allowed to prioritize the passenger's life over the pedestrian's life Discussions on a very difficult problem such as how to solve the ethical problem "Is it?" Is rising. On how MIT Media Lab should solve the "ethical dilemma" of which automated driving car should prioritize the life of anyoneRiyadh RowanThe professor explains with TED Talk.

The Social Dilemma Of Driverless Cars | Iyad Rahwan | TEDxCambridge - YouTube

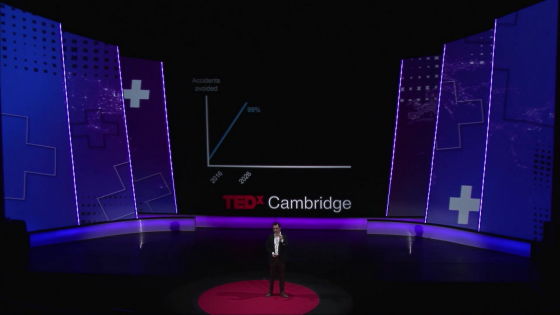

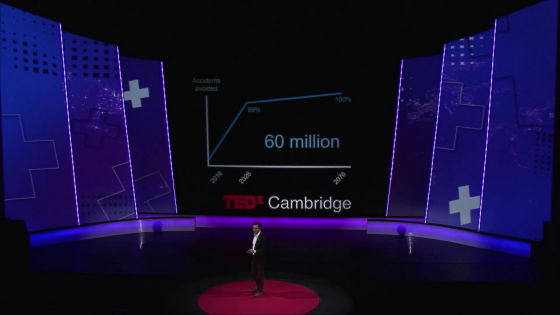

In the United States alone, 35,000 people have died in a car accident in 2015, and 1.2 million people died of car accidents every year in the world as a whole. The answer "How can I reduce the number of people who die in accidents by more than 90%?" Is "to reduce human errors", and this is one of the major factors for the rapid development of automatic driving cars is.

Let's consider the automatic driving car in 2030.

I am watching a movie of this classic TED talk sitting in the backseat.

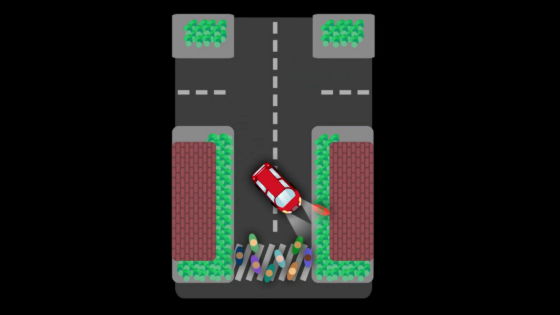

Let's suppose that a sudden mechanical problem occurred here. The automatic driving car can not stop.

If there are many pedestrians ahead of you, the automated driving car may jump into the row of pedestrians and many people will die.

By giving priority to the lives of many pedestrians, it is also conceivable to hit the people beside the road. This will lead to saving a lot of human lives.

There is another way.

Automatic driving cars can also be stopped by crashing against no-one walls. In this case, only the person who was on the automatic driving car is lost.

"Is it impossible for people to die, who will give priority to their lives?" Is an ethical question being debated from decades ago.

It is easy to turn your eyes saying "This scenario is not realistic", but then the problem will not be solved.

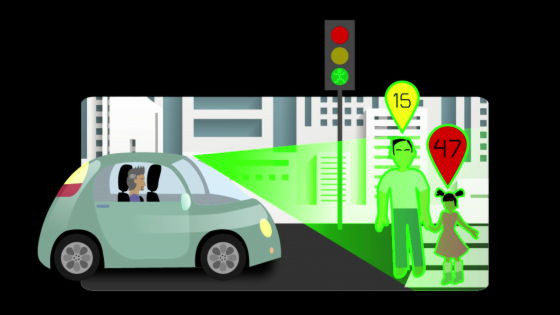

There is also a methodology that "In what direction will you calculate and judge instantaneously how many people will die at what probability."

"Do you protect the driver? Or will you protect the pedestrian?" Would require very complicated calculations. There is still a problem of "trade-off" that choosing one chooses to throw away the other.

There is also the idea that advancement of technology will be solved. In any case, because technologies that do not cause any accidents will appear, we should wait for it.

Technology that reduces accidents by 90% is not so difficult. Accidents can be reduced by 90% within 10 years. However, setting the remaining 10% to zero is accompanied by an incomparable difficulty compared to drastically reducing accidents.

According to scientists' expectations it will take 50 years to make 90% to 100%. If we achieved this at a certain rate, 60 million people would lose their lives by the day the accident would be zero.

Various solutions have been issued by SNS and others.

There is also an idea that "automobiles managed to slip through the crowd glitteringly". Of course it would be wonderful if this could be done, but it would be meaningless for an impossible idea.

One blogger's idea was to install an "emergency escape button" and to escape with a parachute when an accident occurred.

On the premise that "a car can not escape from the trade-off on the road", how should we consider this trade-off? And how should we make that decision? The final conclusion will be decided by rules and laws, but they must reflect our social values.

In considering this problem, the ideas of two famous philosophers are helpful.

Jeremi BenthamExplained that "the greatest happiness of the greatest number of individuals" is the right way. In this way of thinking, it is likely that choosing to hit a person on a side street or to crash against a wall, which is the method with the fewest victims.

On the contraryImmanuel KantTo obey the universal moral rule unconditionallyTheory of dutyI chanted. According to it, you will not kill yourself even if you want others to die.

How do you think?

Usually people stand in Bentham's position. I believe that it is the right solution to live more human lives.

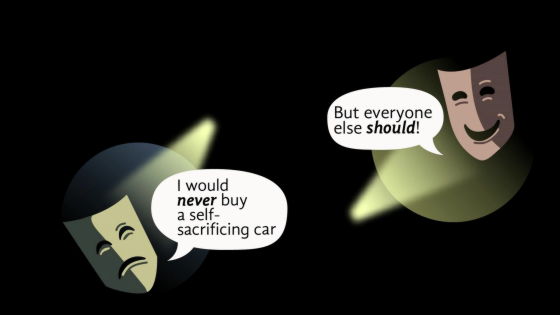

However, in response to the question "Are you going to buy an automated driving car that might sacrifice you to protect others' lives?", Answer "no".

I do not buy an automatic driving car, but I think that other people want me to buy an automatic driving car and by all means want to improve traffic safety.

Such a situation is known as a "social dilemma", and the British scholar William Forster Lloyd of the 1800 'sThe tragedy of commonsIt is the same kind as what has been discussed as.

Let's think about the situation where everyone shares the commons (shared place) and keeps the sheep. If the number of sheep is small, everyone will be happy.

Let's say that one person sneaks into a commons one more sheep secretly. This person gets a little more, but it will not be a big problem as it does not have much impact on other people.

However, if everyone loses reason and puts as many one sheep as commons, pasture is lacking and the sheep are annihilated. This situation is a nightmare for everyone.

The same thing happens even in the present age, such as overfishing of fish and emissions of greenhouse gases. And the same thing can happen with an automated driving car as the commons tragedy. However, in the case of an automated driving car, care must be taken that the structure is slightly different. Because it is not a user who buys an automatic driving car, because it is a car manufacturer who programs an automatic driving car. There is a difference that the user does not need to make a decision.

And the car maker will program so that "automatic driving car thinks by itself and draws a conclusion."

If you compare it to the sheep of the commons tragedy, it is like a digital sheep that you think and decide yourself. A digital sheep is decided, the owner does not know the idea of the sheep.

Let me call this a "tragedy of algorithms and commons." It is a new type of problem that occurred in the present age.

So far, we have resolved this kind of social dilemma by rule (regulation). Governments and citizens gathered and gathered to discuss and decide what rules are best for discussion and resolve them. In this way social good conditions have been kept.

Will the rules be kept as a rule on the automatic driving car that accidents happen and it can not be helped to sacrifice myself to help many lives?

The first possible response would be a voice against the rules. The next coming out is an opinion that you do not buy it if you are an automatic driving car made by such regulations.

In other words, due to rules created for the purpose of safety on the road, rather than buying an automatic driving car, as a result of an increase in the number of people not using it, a sarcastic situation is created that the intended safety is not realized is. Even if an automatic driving car is safer than a human driver. I do not have a methodology to solve this problem. However, I believe that this point is the starting point of social dilemmas and trade-off problems related to automatic driving cars now.

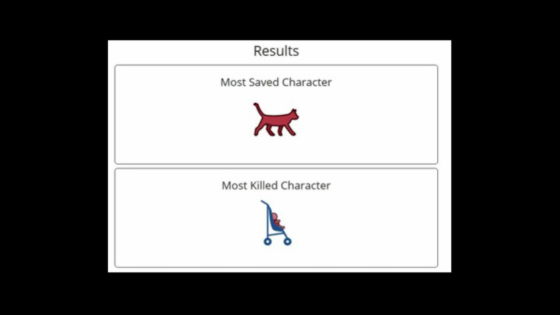

The excellent student that I am teaching has made a website to ask for questionnaires. In this site, people around the world judge what kind of judgment it is desirable for automatic driving cars in situations where various conditions such as the number of pedestrians and attributes are changed.

Already over 5 million answers have gathered from over 1 million people all over the world. These opinions will be very useful for making initial blueprints to solve the trade-off problem.

What is even more important is that thinking through such questionnaire will help you understand the difficulty of choosing in the trade - off problem. It is that you can understand that the person who makes the rule is forced to make impossible choices. In the end it is important that society understands more about the problems that must be resolved by the rules.

The opinions of many people are summarized and become rules. Let's give an example. Who should be saved with top priority and who should die?

Some people think that "cat" should be saved, and that "baby" is the opinion that it should die. (Laugh) This person may be thinking that occupant should take priority over pedestrians perhaps not. Of course I am not going to say that this idea is common, so please be relieved.

When I returned the story, I started asking questions from an ethical dilemma. The question is "What kind of judgment should be made for an automatic driving car under a certain unique situation?"

However, this problem is actually a problem of "social dilemma" of what kind of conclusion society considers good.

In 1940, a science fiction writer Isaac Asimov's novel that the robot should followThree principles of robot engineering"Was shown.

This means "robots should not hurt human beings", "robots must obey commands given to human beings", "robots must protect themselves", they have priority in this order .

I propose the zero-th rule to be protected in preference to these three principles.

It is a great principle that "robots must not hurt the human caring heart".

In the context of a trade-off problem faced by an automated driving car, I do not know how this zero-th major principle works. However, I think that it is important to understand that the problem of automatic driving car is not only a technical problem but also a problem of social cooperation. And I believe that understanding this will be at least a start to guide very difficult problems in the right direction.

Related Posts: