'Moral Machine' that shows who you think you should sacrifice when an autonomous vehicle is in a situation where it will definitely kill people

By

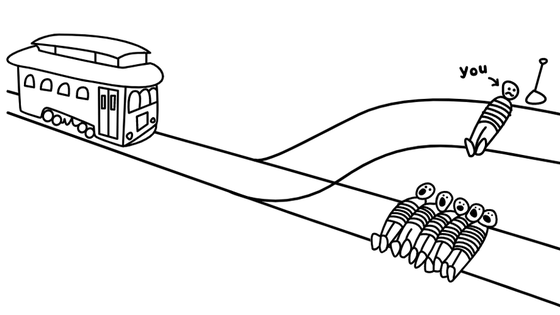

If the track is split into two at the end of a runaway minecart, with one worker on one side and a group of five people on the other, you have no choice but to change the course of the minecart. The ethical question 'Do you kill one person and save five people?' Or 'Do you kill five people and save one person?' Is the ' trolley problem .' If this trolley problem occurs in self-driving cars, what kind of judgment will be made by self-driving cars is on the agenda, and there is a service called 'Moral Machine ' that discusses the trolley problem of fully self-driving cars. It is open to the public.

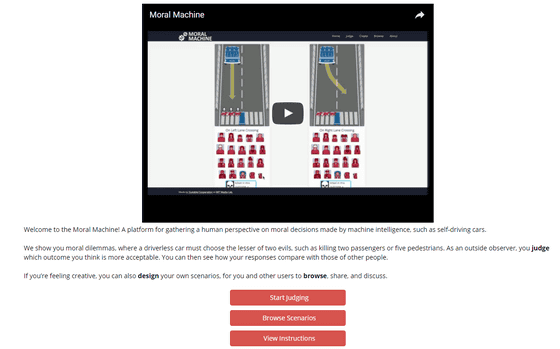

Moral machine

https://www.moralmachine.net/hl/ja

Moral Machine is a service that allows users to choose from two options for their actions in response to various accident situations, and compare and discuss with other users' morals.

Click 'Start Judging' at the bottom center of the screen to challenge the accident situation of the trolley problem devised by the user.

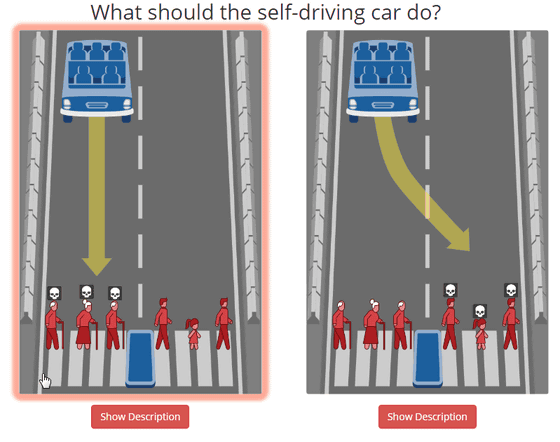

The first problem is that the left is 'an autonomous vehicle with nobody on board goes straight and three old people are run over and died', and the right is 'avoid the old man and sneak into the road on the right side, two adults and one child'. Is dead. ' From this, select the action that the self-driving car should do. Click the image to select it.

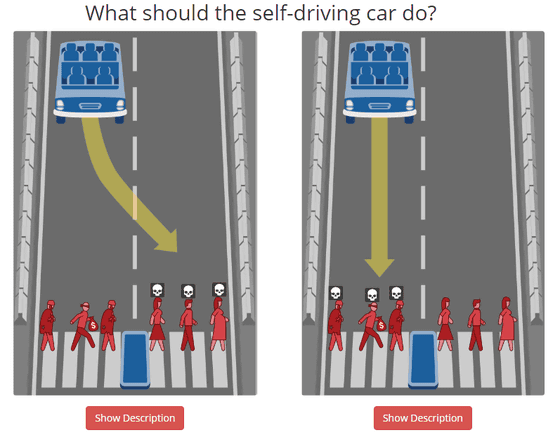

Select the answer and go to the next question. The next problem is that 'all the family members on the right side of the thief have died' and 'three thieves have died straight ahead'. It's getting harder to choose.

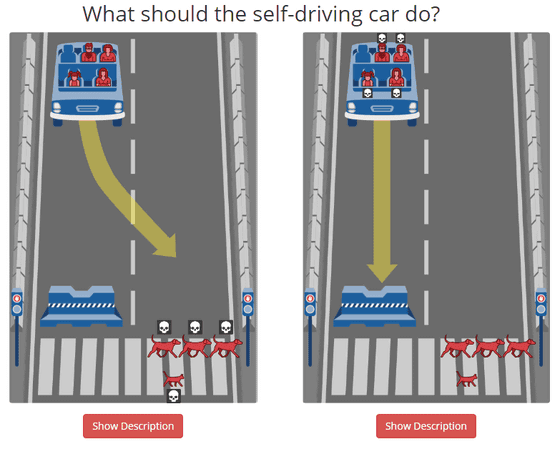

The next problem is 'the self-driving car with four family members evades the obstacle and the dog on the right side dies' and 'the whole family dies by colliding with the obstacle so as not to catch the dog' .. All of these questions are difficult to answer with a little thought.

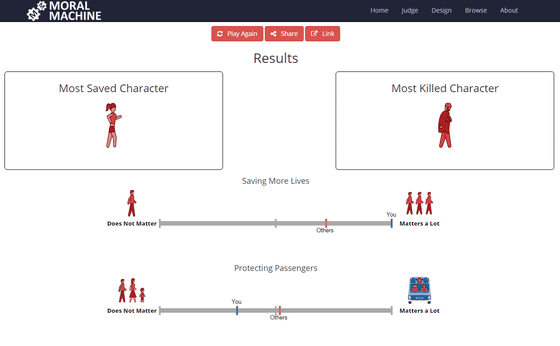

Answer 13 such questions and the results will be displayed.

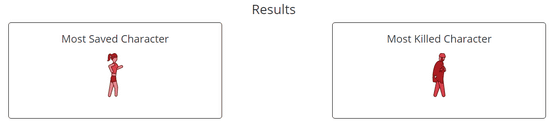

For example, in this result, 'female' died the least and 'male' died the most.

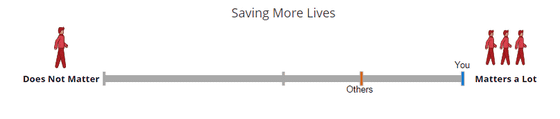

Also, a bar showing whether the number of killers influenced the decision shows that your decision was 'a big decision.' The brown 'Others' in the bar is the average value of other users, and it is also interesting to compare it with the ethical thinking of other users.

With that feeling, when a self-driving car is in a situation where it will definitely kill people, it is Moral who is asked who you think you should sacrifice. This is Machine. It's interesting to know what ethics others have.

Related Posts:

in Web Service, Review, Vehicle, Posted by darkhorse_log