Pointed out that if you forcibly select options in the `` cart truck problem '', you do not see `` what you really want to do ''

The problem that the autonomous driving car takes on the realism due to the rapid development of artificial intelligence becomes an issue, is the ``

Life and death decisions of autonomous vehicles | Nature

http://dx.doi.org/10.1038/s41586-020-1987-4

The Moral Machine reexamined: Forced-choice testing does not reveal true wishes

https://techxplore.com/news/2020-03-moral-machine-reexamined-forced-choice-reveal.html

The 'Minecart problem' was a thought experiment proposed by philosopher Philippa Foot , who said, 'There are five workers at the destination of a runaway tram. Switching a switch on the way can save five people, Beyond that is another worker. Is it permissible to sacrifice one to save five? '

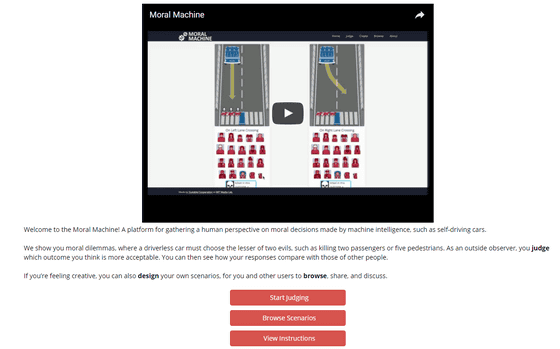

The challenge with the evolution of artificial intelligence is how machines make decisions on these types of ethical issues. The Massachusetts Institute of Technology research team, using an online experimental platform `` Moral Machine '' in an attempt to quantify social expectations about ethical principles that guide the behavior of machines, from a total of 40 million cases from 233 countries and regions Data collected.

`` Moral Machine '' that knows who should sacrifice when an automatic driving car absolutely kills a person-gigazine

As a result, we came to see, 'Animals, not people. Running , 'Run over the old man instead of the young,' and 'run over the man, not the woman.'

The Moral Machine experiment | Nature

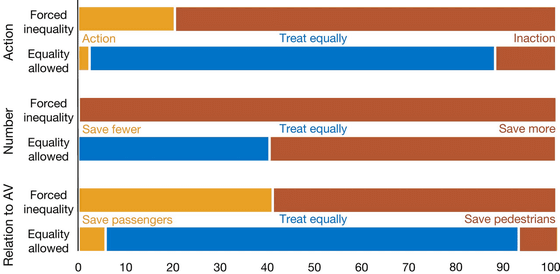

This time, Johanan Bigman and Kurt Gray of the University of North Carolina at Chapel Hill allege that in an experiment conducted at the Massachusetts Institute of Technology, the testee said, `` Equally treat everyone ''. The result was that the result was distorted because three options were not given.

Bigman and Gray conducted a test similar to 'Moral Machine' with the addition of a third option that doesn't matter 'who gets hit or who helps'. As a result, many participants chose the third option for most questions.

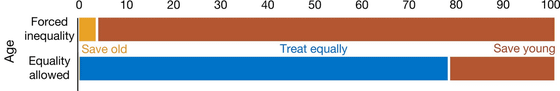

For example, the following graph shows a question that asks you to choose whether to run over a young man to save an old man or run over an old man to save a young man. In the case of the two choices, the bottom shows the selection rate for each of the three choices that add the option of “equally treat”, and in the case of two choices, “saving young people” was 90% or more, , 3 choices drop to about 20%, and 'Equal' accounts for nearly 80%.

Regarding the question asking 'whether it is a small number or a large number', many people chose 'save a large number of people' (center graph), but as a general tendency, 'treat equally' There were many cases to choose.

From this, the research team states that 'a question that is forced to choose does not reveal the true wishes of the people.'

Related Posts:

in Note, Posted by logc_nt