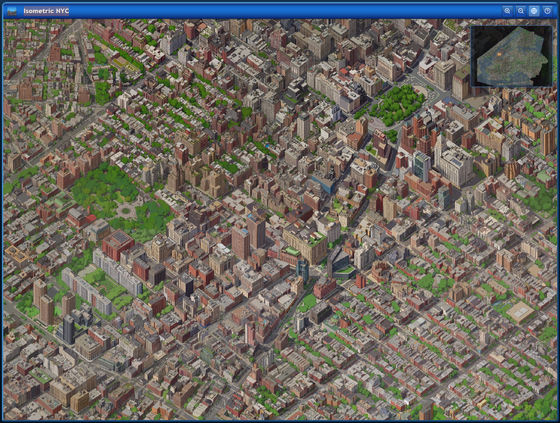

'Isometric NYC' creates a bird's-eye view of New York using pixel art

' Isometric NYC ' is a pixelated

Isometric NYC

https://cannoneyed.com/isometric-nyc/

cannoneyed.com/projects/isometric-nyc

https://cannoneyed.com/projects/isometric-nyc

Isometric NYC looks like this. You can move the display position by dragging and dropping with the mouse, and zoom in and out with the mouse wheel.

When reduced to the maximum it looks like this.

When you zoom in to the maximum, it looks like this. I accidentally discovered the Statue of Liberty.

'Isometric NYC' was created by New York-based engineer and artist

First, Coenen used Nano Banana to create pixel art from a map image of New York. The map image was ultimately obtained from the Google Maps Tile API . This API is said to be excellent in that it provides precise geometry and textures in a single renderer. Below is an example of a 3D map obtained from the Google Maps Tile API converted into pixel art using Nano Banana Pro.

However, when generating pixel art using Nano Banana, there were problems with the generated pixel art becoming inconsistent. Also, Nano Banana was slow, and it seemed that generating the necessary amount of images would be prohibitively expensive.

So, Coenen decided to use Qwen/Image-Edit, which is smaller, faster, and less expensive. He trained the model to ensure consistent pixel art output, which took about four hours and cost about $12 (about 1,900 yen), but this was within his means.

Coenen said, 'Existing AI is good at generating text and code, but compared to this, image generation has a long way to go. When you assign AI to create software, it can execute code, read stack traces, check for errors and self-correct. It has a tight feedback loop and understands the system it is building. However, image models have not yet reached that level. If you manage human artists, you can instruct them to 'make the trees this particular style,' and they can achieve that. Image models can certainly do that, but they cannot reliably execute. Even smart models like Gemini 3 Pro can look at the output and reliably determine that 'there is a seam here' or 'the texture of this tree is wrong.' Image models cannot reliably 'see' failure modes, so the quality assurance process could not be automated. Because the model could not understand its own mistakes, we had no choice but to abandon fully automatic generation. '

Related Posts:

in AI, Web Service, Posted by logu_ii