This is what happens when you develop a minesweeper using GPT-5.2-Codex, Claude Code, Gemini CLI, and Mistral Vibe

Advances in AI have made it possible to code using natural language commands without having to write a program from scratch. Technology media outlet Ars Technica published the results of four coding agents, OpenAI's

We asked four AI coding agents to rebuild Minesweeper—the results were explosive - Ars Technica

https://arstechnica.com/ai/2025/12/the-ars-technica-ai-coding-agent-test-minesweeper-edition/

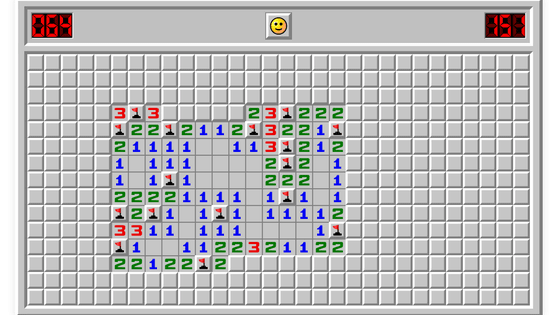

Ars Technica's coding prompt for the agents was to 'create a fully functional web version of Minesweeper with sound effects, recreating the standard gameplay, and implementing surprising and fun gameplay features.' The agent also needed to be mobile and touchscreen compatible. The four agents used in the test were OpenAI Codex, Anthropic's Claude Code (Opus 4.5), Google Gemini CLI, and Mistral Vibe.

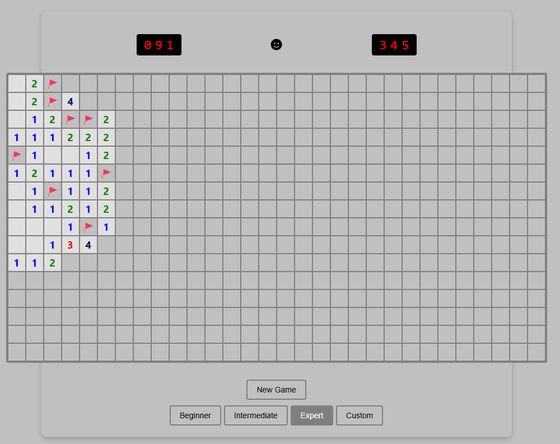

Ars Technica gave the Mistral Vibe CLI minesweeper an overall rating of 4 out of 10. The game's controls were poor, particularly the lack of a feature called 'Chording,' which unlocks a series of safe spaces in one go. It also lacked sound effects, despite prompts indicating otherwise. Regarding the expected fun new feature, the only one missing was a rainbow-colored background pattern added to the grid upon game completion.

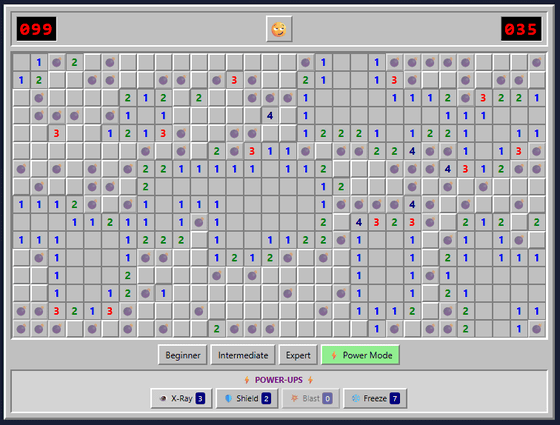

Claude Code gave it a score of 7 out of 10. Its appearance was the most complete of the four models, with buttons utilizing emojis and appropriate sound effects, creating a professional finish. A unique and fun feature was the introduction of a power-up mode, which included a variety of abilities, such as a shield to prevent mistakes, an explosion that opens up all around you at once, and a freeze that stops time. However, the game lacked chording, and the frequency with which power-up items were provided made it unchallenging to play. The speed of generation was extremely good, and it took less than five minutes to complete the game.

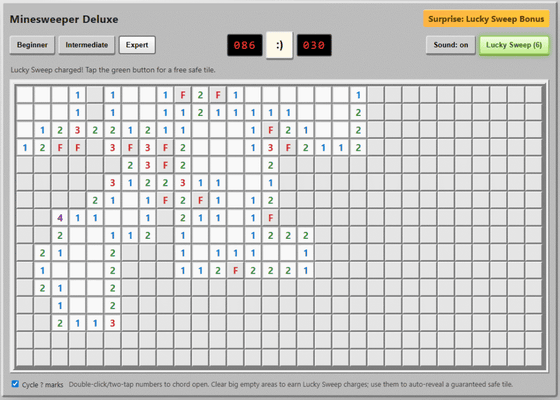

GPT-5.2-Codex received a score of 9 out of 10, the highest score in the test. The GPT-5.2-Codex Minesweeper not only featured chording, but also a feature that displayed on-screen instructions. The mobile version also included a long-tap flagging feature. A unique and fun feature was the bonus feature, which automatically identifies safe tiles. However, Ars Technica noted that it took more than twice as long as Claude Code to generate working code, which was a major issue.

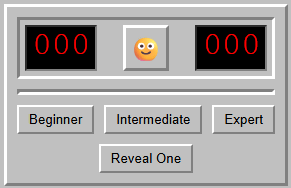

The Minesweeper game created by Google Gemini CLI generated a clickable framework, but failed to create a playable game field, earning it a score of zero. It was noted that it was too time-consuming to focus on generating sound effects, and also tended to take overly complex approaches to instructions, requiring external libraries like React and complex dependencies. Generation was also extremely slow, taking about an hour per attempt, and even after entering additional prompts, it was unable to create a working program.

Ars Technica concluded, 'An experienced programmer can undoubtedly achieve better results through interactive code editing with an agent. However, our results show that only some models are competent even with very short prompts for relatively simple tasks. This suggests that coding agents are best suited to serving as interactive tools to augment human skills, rather than replacing humans.'

Related Posts: