76% of scientists believe that 'we cannot develop general artificial intelligence by scaling up current AI.'

Companies like OpenAI and Google are working on 'general artificial intelligence' (AGI), a machine learning system that can efficiently acquire and adapt to new skills in unknown situations like humans. However, a new survey by the American Association for Artificial Intelligence found that 76% of 475 AI researchers said they believe it is unlikely that AGI can be developed by scaling up current large-scale language models.

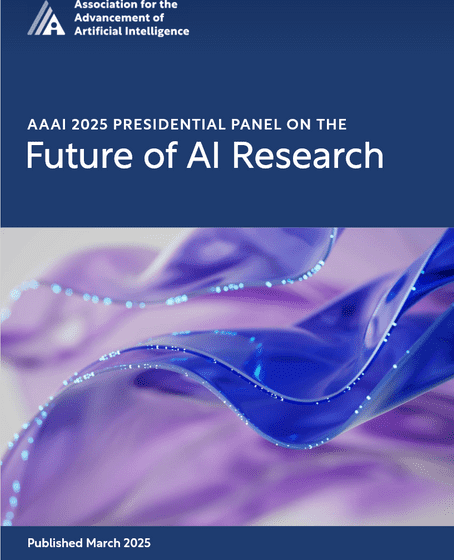

AAAI 2025 PRESIDENTIAL PANEL ON THE Future of AI Research

(PDF file)

Current AI models a 'dead end' for human-level intelligence, scientists agree | Live Science

https://www.livescience.com/technology/artificial-intelligence/current-ai-models-a-dead-end-for-human-level-intelligence-expert-survey-claims

In recent years, a lot of money and energy has been invested in developing AI with higher performance, and the budget allocated to venture capital establishment by the generative AI industry worldwide is $56 billion (about 8.44 trillion yen) in 2024 alone . In addition, many huge data center complexes for AI development are being built, and carbon emissions from data centers in the United States have increased by about three times from 2018 to 2024.

In addition, while developing AI models requires datasets to serve as learning materials, it has been pointed out that large-scale models have already learned from most of the data they have access to, and that they may run out of data by 2028.

Finally, data for AI training is running out, and AI companies lacking datasets are likely to be forced to move from large, general-purpose LLMs to small, highly specialized models - GIGAZINE

In response to this situation, a survey by the American Association for Artificial Intelligence found that 76% of 475 AI researchers said that 'the likelihood of developing AGI is low/very low even if we scale up current large-scale language models.' Stuart Russell, a computer scientist at the University of California, Berkeley, pointed out that 'AI companies are already overinvesting, and we've passed the tipping point where they can admit they've made too much money.'

Russell also criticized, 'I think the fundamental problem with current approaches to AI development is that they all involve training large feed-forward circuits, where data flows one way from input to output. Current AI architectures have fundamental limitations in how they represent concepts, so they can only generate fragmented representations even when trained with huge amounts of data.'

Furthermore, the release of DeepSeek R1 , which can perform on par with AI models such as OpenAI o1 while at a fraction of the training cost of major AI developers, overturned the assumption that scaling, which increases AI development costs, is essential to improving AI. In addition, due to the emergence of these cheap and high-performance models, 79% of AI researchers surveyed said that their 'perception of AI capabilities does not match reality.' Russell said, 'There are many experts who believe that the current AI development race is a bubble, especially when high-performance models such as DeepSeek R1 are available as open source.'

Chinese AI development company 'DeepSeek' is rapidly emerging as a hot topic in the technology industry, and has also ranked first in the App Store's free app rankings - GIGAZINE

On the other hand, the success of DeepSeek shows that there is still room for engineering innovation in how we design AI systems. Some experts say that probabilistic programming could get us closer to AGI than current architectures. They also say that AGI could be advanced by combining inference models with other machine learning systems that generate more accurate responses than traditional AI models.

Related Posts:

in Software, Posted by log1r_ut