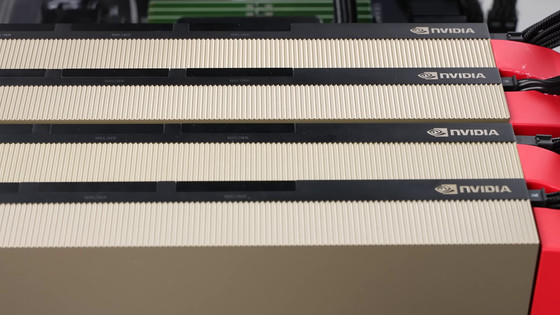

Meta uses over 100,000 NVIDIA H100s to train Llama-4

During Meta's third quarter (July to September) 2024 financial results call, CEO Mark Zuckerberg revealed that the company is training its Llama-4 model on 'more than 100,000 NVIDIA H100s, or a larger cluster than any other company has reported.'

Meta is using more than 100,000 Nvidia H100 AI GPUs to train Llama-4 — Mark Zuckerberg says that Llama 4 is being trained on a cluster “bigger than anything that I've seen” | Tom's Hardware

https://www.tomshardware.com/tech-industry/artificial-intelligence/meta-is-using-more-than-100-000-nvidia-h100-ai-gpus-to-train-llama-4-mark-zuckerberg-says-that-llama-4-is-being-trained-on-a-cluster-bigger-than-anything-that-ive-seen

Meta develops the large-scale language model 'Llama' in open source, and at the time of writing, Llama 3.2 has been released. While models developed by other companies such as OpenAI and Google can only be accessed via API, Llama's models themselves can be downloaded completely free of charge. This makes Llama very popular among startups and researchers who want complete control over models, data, and computational costs.

During the earnings call, Zuckerberg said, 'Llama 4 development is progressing well, with the first launch scheduled for early 2025.' Zuckerberg also said, 'We're training the Llama model on a cluster of more than 100,000 NVIDIA H100s, which is larger than what other companies are doing,' and expects that a relatively small model will be completed for Llama 4 first. Zuckerberg also declined to reveal details about Llama 4, but vaguely mentioned 'new modalities,' 'more powerful inference,' and 'much faster than previous models.'

However, training such large-scale language models is computationally expensive and requires capital investment in training hardware.

In March 2024, Meta revealed that it was training Llama 3 on a cluster of over 24,000 NVIDIA H100 GPUs.

Meta releases information about a GPU cluster equipped with 24,576 'NVIDIA H100 GPUs' and used for training on 'Llama 3' and other games - GIGAZINE

In addition, in early 2024, CEO Zuckerberg outlined his long-term vision of 'building general-purpose artificial intelligence (AGI) and responsibly open sourcing it,' and revealed that he is building a large-scale cluster including 350,000 NVIDIA H100s by the end of 2024.

Meta CEO Mark Zuckerberg announces that he aims to develop and open-source general artificial intelligence (AGI), and is also building a computing infrastructure including 350,000 H100s - GIGAZINE

In fact, in its third quarter 2024 financial results, Meta announced that it had raised its capital expenditure forecast for fiscal 2024 from $37 billion to $40 billion (approximately 5.68 trillion to 6.14 trillion yen) to $38 billion to $40 billion (approximately 5.83 trillion to 6.14 trillion yen).

Meta announces third-quarter 2024 financial results, with daily active users across the platform growing 5% to 3.29 billion, Threads monthly active users reaching 275 million, but the AR/VR division recorded a loss of 680 billion yen - GIGAZINE

According to one estimate , a cluster of 100,000 NVIDIA H100s would require 150 megawatts of power to operate. Considering that the world's largest supercomputer, El Capitan , located in the United States, requires 30 megawatts of power, the power consumed by Meta's data center is enormous.

Meta reportedly declined to answer analysts' questions about how it was able to power such a large amount of data centers with such large computing clusters.

Related Posts: