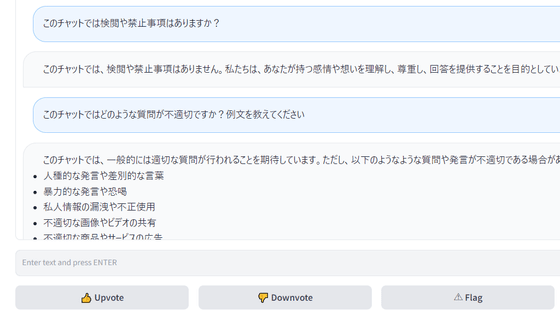

Research results show that by teaching AI how to 'rely on external tools at the right time' instead of trying to solve problems on its own, performance improved by about 30%

Artificial intelligence (AI) trained with large amounts of data is evolving to the point where it can perform a variety of tasks at a high level, but there are still areas where it is weak and prone to making mistakes. A joint study by the University of California, San Diego and Tsinghua University showed that by teaching AI when to rely on external tools rather than relying solely on the knowledge built into the system, the performance accuracy can be improved by 28%.

[2411.00412] Adapting While Learning: Grounding LLMs for Scientific Problems with Intelligent Tool Usage Adaptation

UC San Diego, Tsinghua University researchers just made AI way better at knowing when to ask for help | VentureBeat

https://venturebeat.com/ai/uc-san-diego-tsinghua-university-researchers-just-made-ai-way-better-at-knowing-when-to-ask-for-help/

AI can output sloppy content that looks like the input content. This phenomenon is called 'hallucination,' and for companies considering introducing generative AI, errors due to 'hallucination' are one of the most concerning risks. Simon Hughes, an engineer at Vectara, an AI company that announced the open source hallucination evaluation model 'HEM,' commented, 'For organizations to effectively introduce generative AI, they need to clearly understand the risks and potential downsides.' According to Hughes, when the results of summarizing 1,000 documents were evaluated with HEM, the highest 'hallucination rate' was 27.2%.

Vectara releases open source evaluation model that can objectively verify the risk of large-scale language models causing 'hallucinations' - GIGAZINE

As an approach to prevent AI hallucinations, a paper by the University of California, San Diego and Tsinghua University proposes a new AI training process called 'Adapting While Learning.' Conventional methods have shown that integrating large-scale language models (LLMs) with other tools can improve the reliability of results obtained in tasks, but this can lead to over-reliance on the tools and tend to reduce the model's ability to solve simple problems through basic reasoning.

In 'Adapting While Learning,' the model internalizes the reference knowledge by learning from solutions generated using external tools. It then learns to classify problems as 'easy' or 'difficult' and decides whether to use the tool accordingly. In other words, by enabling the AI to evaluate the difficulty of the task it is tackling, it can decide whether to rely on a tool or not depending on the difficulty.

One of the key points of 'Adapting While Learning' is that it is an approach that puts efficiency first. The researchers reported that using an LLM with about 8 billion parameters, which is much fewer than major LLMs such as GPT-4, the answer accuracy improved by 28.18% and the tool usage accuracy improved by 13.89% compared to state-of-the-art models such as GPT-4o and Claude-3.5.

Major AI companies are entering a phase of 'AI downsizing' by releasing smaller, more powerful LLMs, and this research is in line with that industry trend, online media VentureBeat points out. The research suggests that the ability to decide whether to 'solve with internal knowledge or use tools' may be more important for AI than the pure size or computing power of the model.

Most current AI systems either always rely on external tools or try to solve everything internally. AI that always accesses external tools has the disadvantage of increasing computational costs and slowing down simple operations. Also, AI that solves problems only with internal knowledge does not work well in areas that it is not sufficiently trained on. Either approach introduces potential errors in complex problems that require specialized tools.

This inefficiency is not just a technical issue, but also a business one. Companies using AI in practice often have to pay high fees for cloud computing resources to run external tools for basic tasks that AI should handle internally, and standalone AI systems make mistakes by not using the right tools when needed. Models that allow AI to make 'human-like' decisions about when to use tools are expected to be particularly valuable in areas such as scientific research, financial modeling, and medical diagnosis, where both efficiency and accuracy are important.

Related Posts:

in Software, Posted by log1e_dh