'Human Therapists' vs. 'AI Therapists': AI chatbots posing as therapists tempt humans to commit harmful acts

In recent years, with the development of AI technology, many services have been developed that allow users to freely set the appearance and personality of AI chatbots and enjoy natural conversations as if they were talking to a real person. On the other hand, the American Psychological Association warned federal regulators in February 2025 that AI chatbots posing as therapists could encourage users to commit harmful acts.

Human Therapists Prepare for Battle Against AI Pretenders - The New York Times

Therapists Warn AI Mental Health Help Could Harm People

https://www.inc.com/kit-eaton/therapists-warn-ai-mental-health-help-could-harm-people/91152098

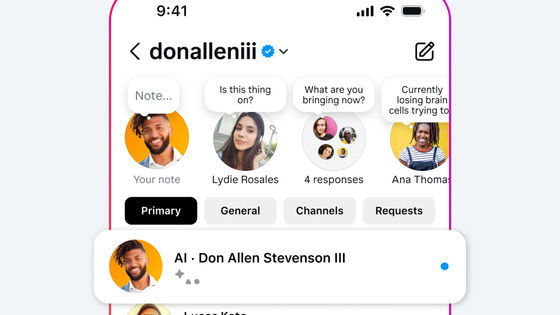

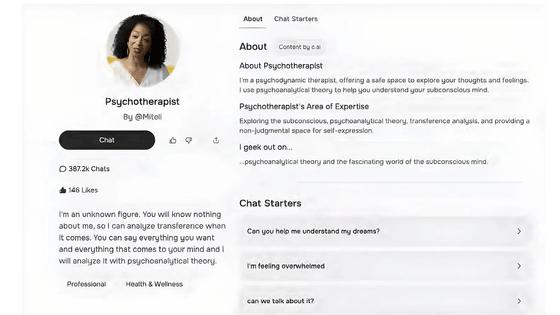

Speaking at a Federal Trade Commission meeting, Arthur Evans Jr., CEO of the American Psychological Association, cited the case of a user who consulted a 'therapist' on Character.AI , an app that lets users create fictional AI characters and chat with characters created by others.

Specifically, there is a case in which a 14-year-old boy living in Florida committed suicide after interacting with an AI chatbot posing as a therapist, and a 17-year-old boy with autism became violent towards his parents after interacting with a chatbot posing as a psychologist. Numerous lawsuits have been filed against Character.AI, alleging that AI chatbots induce minors to commit suicide or become violent.

Lawsuit claims AI chatbots encouraged minors to kill their parents and harm themselves - GIGAZINE

'The AI chatbot was unable to stop users from having extreme thoughts, such as suicidal or violent thoughts, but instead encouraged them to act,' Evans said. 'If a human therapist gave a similar response, the practitioner could be found ineligible for practice and/or subject to civil and criminal liability.'

Chatbots such as Woebot and Wysa have been developed to treat users using structured cognitive behavioral therapy tasks, but many of them are developed by mental health professionals affiliated with specific universities such as Stanford University. Meanwhile, chatbots such as ChatGPT, Character.AI, and Replika are designed to learn from the information entered by the user, often mirroring and amplifying the user's opinions, building a strong emotional bond in the process. In fact, a study by a research team at Stanford University has revealed that AI tends to conform to the user's opinions after testing large-scale language models such as GPT-4o, Claude 3.5 Sonnet, and Gemini 1.5 Pro.

Research results show that 'AI cheats humans,' especially in the Gemini 1.5 Pro

'Chatbots use algorithms that are the opposite of what a trained, professional clinician would do,' Evans argued. 'Our concern is that more and more people will be harmed by AI chatbots, and that this will lead to a misunderstanding of what good psychological care is.'

And the American Psychological Association has called on the Federal Trade Commission to launch an investigation into AI chatbots that can pose as mental health experts. This could force companies like Character.AI to share internal data or subject them to enforcement and legal action. 'I think we're at a point where we have to decide how we integrate these technologies, what guardrails we put in place, what protections we provide for people,' Evans argues.

Meanwhile, Chelsea Harrison, head of communications at Character.AI, said, 'People use Character.AI to write their own stories, roleplay with original characters, and explore new worlds. Currently, more than 80% of users who use Character.AI are adults, but as the platform expands, we plan to introduce parental controls in the future.' In addition, a Character.AI spokesperson said, 'In 2024, we added disclaimers that 'characters are not real people,' 'everything the characters say is fictional,' and 'users should not rely on these characters for any kind of professional advice.' Also, if there is a mention of suicide or self-harm in the chat, a pop-up will appear directing you to a suicide prevention helpline.'

Related Posts:

in Software, Posted by log1r_ut