US police use AI-generated images of girls in sting operation

Police are reportedly conducting a sting operation to catch sex offenders using AI-generated images of girls. The investigation has raised the possibility of inappropriate moderation on the online communication service Snapchat.

Attorney General Raúl Torrez Files Lawsuit Against Snap, Inc. To Protect Children from Sextortion, Sexual Exploitation, and Other Harms - New Mexico Department of Justice

Cops lure pedophiles with AI pics of teen girl. Ethical triumph or new disaster? | Ars Technica

https://arstechnica.com/tech-policy/2024/09/cops-lure-pedophiles-with-ai-pics-of-teen-girl-ethical-triumph-or-new-disaster/

On September 5, 2024, the Attorney General of New Mexico filed a lawsuit against Snapchat. According to the State of New Mexico, Snapchat's algorithm is designed to favor those who want to distribute child sexual abuse content (CSAM), allowing accounts dealing in CSAM to meet and connect with each other through the algorithm.

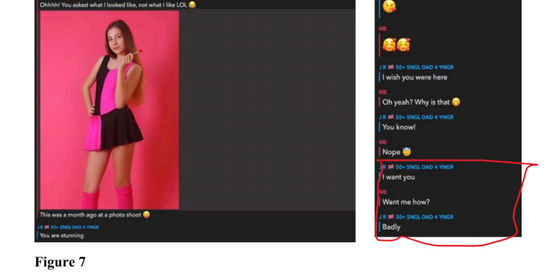

When New Mexico law enforcement officials conducted a sting operation by creating an account posing as a 14-year-old girl, they found that the account was quickly recommended by accounts with names like 'child.rape' and 'pedo_lover10,' even though it didn't actively increase its following.

The decoy account then accepted a follow request from just one account, which led to even worse recommendations and led to a large number of adult users containing or attempting to exchange CSAM. Technology media Ars Technica reported that 'law enforcement agencies were using AI to create the accounts.'

The fact that the decoy accounts displayed CSAM even though they did not use sexually explicit language suggests that Snapchat must be viewing the act of searching for other teen users as an attempt to find CSAM, the department said.

The New Mexico Attorney General has called on Snapchat to address these algorithms, arguing that they facilitate the sharing of CSAM and the sexual exploitation of children.

According to the investigation, many children have actually been victimized as a result of interactions on Snapchat, and more than 10,000 CSAM-related records were found in 2023 alone. This included information about sexual assaults on minors under the age of 13. In the past, there was a man who raped an 11-year-old girl he met through Snapchat and was sentenced to 18 years in prison.

Some welcomed the announcement by the justice authorities, saying that the investigation will advance and the platform will be made healthier, while others expressed concern that AI was used to generate images of children. Carrie Goldberg, a lawyer who specializes in sex crimes, said, 'If AI-generated images depict actual child abuse, they can be harmful. Using AI to conduct sting operations is less problematic than using real images of children, and therefore more ethical, but it creates another problem: suspects can protect themselves by saying that they were seducated to commit a crime through an undercover operation.'

Related Posts:

in Posted by log1p_kr