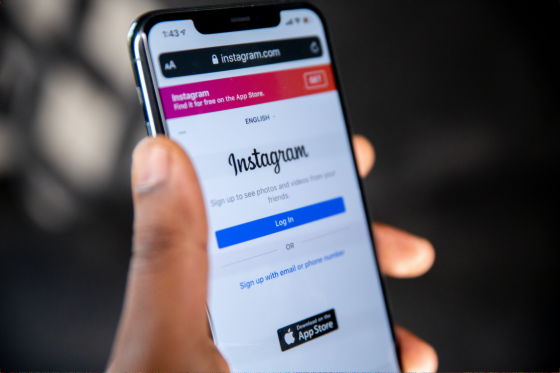

It turns out that Instagram's algorithm recommends ``a network that sells children's sexual content'' to users

Instagram, an image and video sharing SNS operated by Meta, has expanded the ``pedophile network'' that leads to accounts that sell children's sexual content. A study by Stanford University found this out.

Addressing the distribution of illicit sexual content by minors online | FSI

Instagram Connects Vast Pedophile Network - WSJ

https://www.wsj.com/articles/instagram-vast-pedophile-network-4ab7189

Some pedophiles have been selling and sharing illegal sexual content on the Internet long before the advent of social media. However, unlike file transfer services and forums that are accessed only by a select few, SNSs, with their interest-based content and user recommendation systems, allow potential users to become part of a “pedophile network.” It may encourage you to connect.

Technical and legal barriers make it difficult to study networks on social media from outside a company, but experts at the Stanford University Internet Observatory and the University of Massachusetts Amherst Rescue Lab have found illegal pedophilia content We have successfully identified a large community to promote.

Researchers reported that they were able to find accounts selling children's sexual content by searching for explicit hashtags such as '#pedohore' and '#preteensex' on Instagram. doing. When browsing one of the accounts included in the pedophile network from the test account created for research, the account that sells and purchases sexual content of minors was displayed as 'recommended'.

In fact, Sarah Adams, who posts about the dangers of child exploitation on Instagram, said her followers reported a suspicious account using the term 'incest toddlers' and accessed that account. About. A few days later, Mr. Adams' parents reported that 'when you look at your Instagram profile,' incest toddlers 'is displayed in the recommended column.'

Accounts that sell sexual content to pedophiles often claim that 'children create sexual content themselves' and use pseudonyms that incorporate sexual language. It seems that there is The Wall Street Journal reports that it is common for ``sexual content menus'' to be posted on SNS, and that content is bought and sold by directing them to external trading sites.

The Internet Observatory used hashtags related to underage sex to identify 405 sexual content sales accounts that were labeled as 'self-generated' or claimed to be run by children themselves. discovered. We also know that 112 of these sales accounts had a total of 22,000 followers.

In response to a Wall Street Journal inquiry, Meta claimed to have set up an internal task force acknowledging the enforcement problem, stating, 'Child exploitation is a horrific crime. We need to find ways to proactively prevent these actions.' We are continuing to investigate,' he said. In addition, Meta has suspended 27 pedophile networks in the past two years, and is working on a system that recommends blocking hashtags that treat children sexually and searching for terms that lead to sexual content. It is said that it is.

According to Instagram's internal statistics, users see content related to child exploitation less than 1 in 10,000 times. However, it has been pointed out that Instagram is particularly important for pedophile networks compared to other big social networks.

A survey by the Internet Observatory found 128 accounts on Twitter claiming to sell child sexual content, less than a third of those found on Instagram. Also, on Twitter, these accounts were less likely to be recommended to other users, and the accounts were deleted quickly.

Among the popular platforms among young people, Snapchat is mainly used for direct messaging, so it is not conducive to building a pedophile network. The Internet Observatory also reports that TikTok does not appear to have seen a surge in underage sexual content.

David Thiel, chief technologist at the Internet Observatory and former Meta employee, said, 'The problem with Instagram is how content discovery works, how topics are recommended, and how much the platform relies on search and links between accounts. ``It is necessary to have guardrails in place to keep things that are growing rapidly, but Instagram is not doing that,'' he said.

Related Posts:

in Mobile, Software, Web Service, Posted by log1h_ik