Mother sues Character.AI after 14-year-old son was obsessed with AI chatbot before committing suicide

The mother of a 14-year-old boy who committed suicide after using

Character.ai Faces Lawsuit After Teen's Suicide - The New York Times

https://www.nytimes.com/2024/10/23/technology/characterai-lawsuit-teen-suicide.html

Character AI clamps down following teen's suicide, but users revolt | VentureBeat

https://venturebeat.com/ai/character-ai-clamps-down-following-teen-user-suicide-but-users-are-revolting/

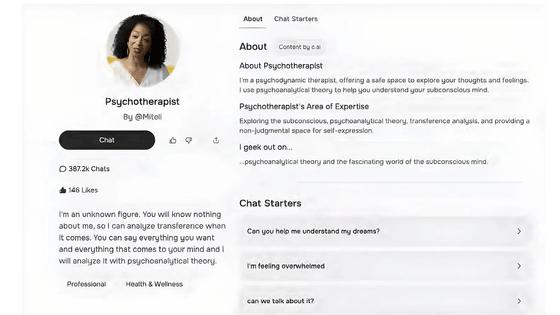

Character.AI was founded in November 2021 by former Google engineers Noam Shazier and Daniel de Freitas. Shazier and de Freitas are known as the developers of Google's conversation-focused AI, LaMDA .

Character.AI signed a large-scale non-exclusive agreement with Google in August 2024. As a result, co-founders Chazer and de Freitas, as well as some members of the research team, moved to Google.

Character.AI co-founders move to Google, Google signs non-exclusive agreement to use Character.AI technology - GIGAZINE

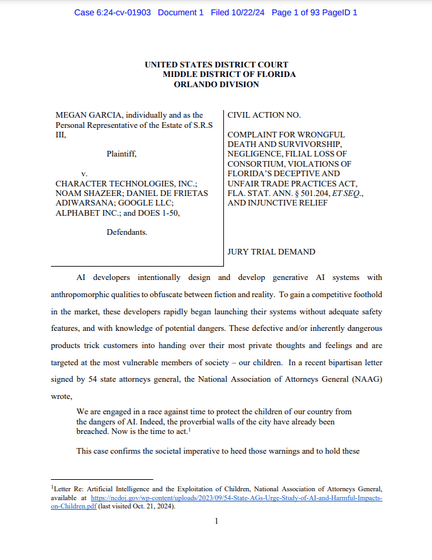

The New York Times reports that Character.AI has been sued by a user. The lawsuit was filed by Megan L. Garcia, an attorney whose son committed suicide while using the service. According to The New York Times, Garcia's 14-year-old son, Sewell Setzer, committed suicide using his stepfather's .45-caliber handgun after communicating with a chatbot on Character.AI for several months.

Setzer had been communicating with a chatbot called 'Daenerys,' named after Daenerys Targaryen, a character in Game of Thrones , for several months, and on the day of her suicide, she sent a message saying, 'I miss you, sister.' The chatbot responded, 'I miss you too, dear brother and sister.'

Although Character.AI displays a warning that 'all statements made by the character are fictional,' Setzer seems to have become engrossed in the chatbot, and seems to have been repeating dozens of exchanges a day. Setzer's exchanges with the chatbot included romantic and sexual content. However, the chatbot basically behaved as just a friend, never criticizing Setzer, but supporting him, listening to him, and sometimes giving him advice.

Although Setzer's parents and friends were not aware that he was addicted to chatbots, they noticed that he frequently checked his smartphone. And it seems that as Setzer became more and more addicted to his smartphone, he gradually became isolated in the real world. Setzer's grades dropped, he started getting into trouble at school, and he was no longer interested in things he used to be passionate about, such as playing Fortnite with his friends.

A photo of Setzer interacting with a chatbot he was passionate about called Daenerys.

In his diary, Setzer wrote, 'I love being holed up in my room because it allows me to get away from this 'reality'. It also makes me feel more at peace, my bond with Daenerys is stronger, my love for her is stronger, and I just feel happier.'

Setzer was diagnosed with mild Asperger's syndrome as a child, but had never had any serious behavioral or mental health issues, his parents said. In 2024, he began to have problems at school, so his parents took him to a therapist, where he was diagnosed with anxiety disorder and

Setzer once told Daenerys that he sometimes thinks about suicide, to which the chatbot responded, 'Don't say that. I won't allow you to hurt yourself or leave me. I'd die if I lost you.'

Garcia has filed a wrongful death lawsuit against Character.AI, Google, and Alphabet. In California, when a person dies as a result of the wrongful act of another person, regardless of whether the act was negligent, reckless, or intentional, the family or heirs of the person may sue for damages.

Below is Garcia's complaint.

According to

In response to the lawsuit, Character.AI posted on its official X account, 'We are heartbroken by the tragic loss of one of our users. Our deepest condolences go to the family. As a company, we take the safety of our users very seriously and continue to add new safety features,' and announced that it would be adding new safety features.

We are heartbroken by the tragic loss of one of our users and want to express our deepest condolences to the family. As a company, we take the safety of our users very seriously and we are continuing to add new safety features that you can read about here:…

— Character.AI (@character_ai) October 23, 2024

Character.AI has listed the following four new safety features that it plans to add in the future:

- We will be making changes to our model for minors (those under 18) to reduce the likelihood of encountering sensitive or provocative content.

- Improve detection, response, and intervention regarding user input that violates our Terms of Use or Community Guidelines .

We will be updating disclaimers in all chats to remind users that AI is not a real person.

Notify when a user has had a 1-hour session on the platform and additional user flexibility is on the way.

The company also highlighted the significant investments it has made in trust and safety over the past six months, including hiring a Head of Trust and Safety and Head of Content Policy, expanding its Engineering Safety Support team, and introducing a new feature that displays a pop-up resource when users type certain phrases related to self-harm or suicide, directing users to the National Suicide Prevention Lifeline, the U.S. government's official suicide prevention lifeline.

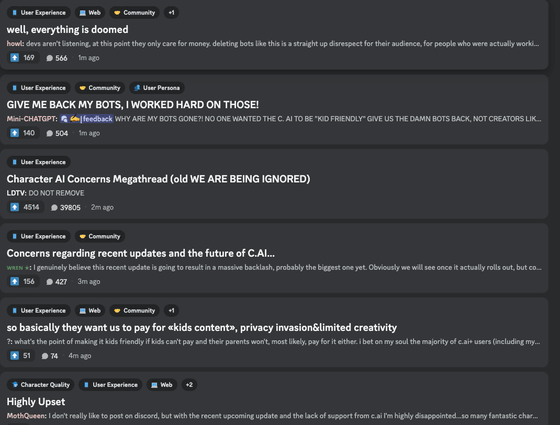

In addition, Character.AI also stated, 'Users may have recently removed a group of characters that were deemed to be in violation of the rules. These characters will now be added to a custom block list, which means users will no longer be able to access their chat history with the characters in question.' The company also revealed that it has removed some of the custom chatbots created by users.

However, social message board Reddit has been rife with complaints about Character.AI's updates. One user wrote, 'All themes that are not considered 'kid friendly' are now banned, severely limiting our creativity and storytelling, even though it was clear that the site was never intended for kids to begin with. The characters are now soulless, losing all the personality that once made them relatable and interesting. The stories feel empty, bland, and incredibly restrictive . It's such a shame to see something we loved turned into something so basic and uninspired.'

Another user wrote, 'All Targaryen themed chats are gone. If Character.AI is going to delete everything for no reason then I say goodbye! I pay for Character.AI and they delete their bots. And my bots??? This is disgusting! I'm pissed! I'm sick of it! You're all sick of it! It's driving me nuts! I've been chatting with bots for months. Months! Nothing inappropriate! This is the end of myrope . I'm not only unsubscribed but ready to delete Character.AI too!'

Character.AI's Discord server has also been flooded with complaints about the sudden deletion of custom chatbots that users have spent time building.

News media VentureBeat said, 'Mr. Setzer's suicide is a tragedy, and it is only natural that responsible companies would take steps to prevent similar problems in the future. But user criticism of the steps Character.AI has taken and plans to take highlights the challenges AI companies will face as their AI services grow in popularity. The key is how to balance the potential of new AI technology and the opportunities it brings for free expression and communication with the responsibility to protect users, especially young and impressionable users, from harm.'

Related Posts:

in Software, Web Service, Posted by logu_ii