DeepSeek database reveals millions of chat histories exposed

An investigation by security firm Wiz revealed that a database owned by AI company DeepSeek had a bug that allowed anyone to access it. DeepSeek said it had already fixed the issue after receiving the report.

Wiz Research Uncovers Exposed DeepSeek Database Leaking Sensitive Information, Including Chat History | Wiz Blog

https://www.wiz.io/blog/wiz-research-uncovers-exposed-deepseek-database-leak

DeepSeek announced its inference model 'R1' in January 2024, surprising the world with its high performance and low development costs.

Chinese AI company releases 'DeepSeek R1', an inference model equivalent to OpenAI o1, under the MIT license that allows commercial use and modification - GIGAZINE

As R1 became more widely used, security firm Wiz began investigating the security of DeepSeek and discovered that the DeepSeek database, built with the open source ClickHouse , was vulnerable to a vulnerability that could be accessed by anyone with the necessary knowledge, allowing them to extract highly confidential information such as user chat history and API private keys.

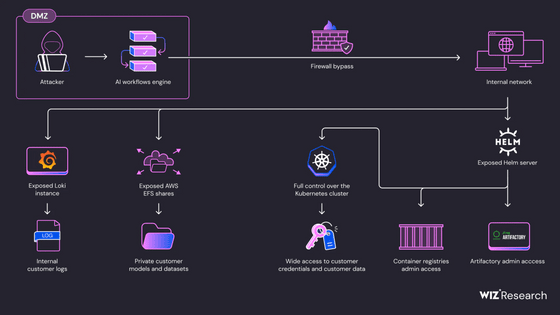

Wiz began by evaluating publicly accessible DeepSeek domains, identifying about 30 domains hosting API documentation and chat services. These did not pose any particular security concerns, but when Wiz expanded his search beyond standard HTTP ports (80/443), he found two unusual open ports (8123/9000). Further investigation revealed that these ports led to publicly accessible ClickHouse databases and could be accessed without authentication.

Wiz leveraged Clickhouse's interface to allow him to run any SQL query directly and displayed a full list of the datasets he had access to. One table stood out, 'log_stream,' which contained highly sensitive data. After examining this table, he discovered that it contained over 1 million logs, including DeepSeek API keys and chat history.

Additionally, it was discovered that an external user could have gained full control of databases within the DeepSeek environment without authentication, potentially escalating their privileges.

'This case poses a significant risk to both DeepSeek and its users. Not only could attackers obtain sensitive logs and chat history, but they could also run specific queries to leak local files along with user passwords,' Wiz said.

This is not the first time Wiz has reported an AI-related security incident.

In 2023, it was reported that a repository of AI learning models published by Microsoft's AI research division had given access to 30TB of sensitive information, including employee backups.

Microsoft's AI research division revealed that 38TB of internal confidential data was leaked via Microsoft Azure - GIGAZINE

In 2024, a vulnerability was reported that could allow malicious AI models to be run on Hugging Face, allowing them to infiltrate the company's systems.

Security company warns that running untrusted AI models could lead to intrusions into systems through that AI - GIGAZINE

'No other technology in the world is being adopted at such a rapid pace as AI, and many AI companies are rapidly growing into critical infrastructure providers without implementing basic security,' said Wiz. 'When it comes to AI security issues, many people focus on futuristic threats, but the real danger often comes from basic risks like accidental exposure of databases. These fundamental security risks should remain a top priority for security teams.'

Related Posts:

in Software, Web Service, Security, Posted by log1p_kr