Microsoft's AI research department was found to have leaked 38TB of internal confidential data via Microsoft Azure

Cloud security company Wiz has announced that 38TB of confidential data was leaked when Microsoft's AI research department published an open source AI learning model to a GitHub repository in July 2020. Sensitive data included passwords, private keys, and over 30,000 internal Microsoft Teams messages.

38TB of data accidentally exposed by Microsoft AI researchers | Wiz Blog

Microsoft mitigated exposure of internal information in a storage account due to overly-permissive SAS token | MSRC Blog | Microsoft Security Response Center

https://msrc.microsoft.com/blog/2023/09/microsoft-mitigated-exposure-of-internal-information-in-a-storage-account-due-to-overly-permissive-sas-token/

Microsoft leaks 38TB of private data via unsecured Azure storage

https://www.bleepingcomputer.com/news/microsoft/microsoft-leaks-38tb-of-private-data-via-unsecured-azure-storage/

While investigating whether data hosted in the cloud has been leaked, Wiz's research team discovered a repository that published the source code of an open source image recognition AI model published by Microsoft's AI research division. Did.

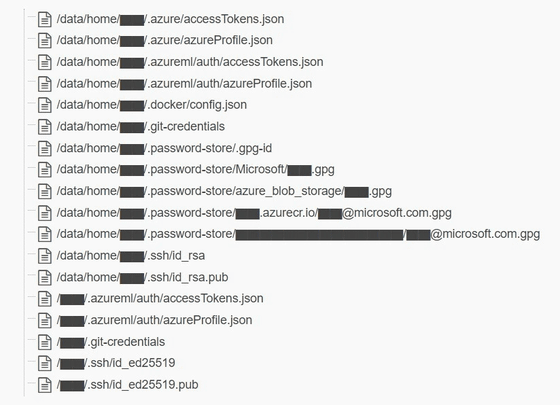

File sharing was done using Microsoft Azure's feature called Shared Access Signature (SAS) tokens . However, this token was configured to allow full control permissions instead of read-only. This means that a malicious attacker with access could not only view all files in your Azure storage account, but also be able to delete or overwrite existing files.

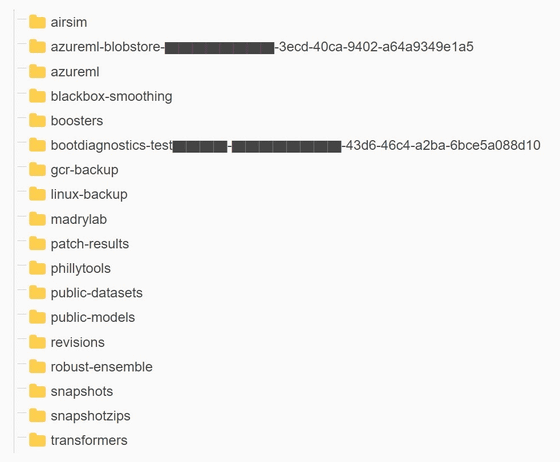

And it turns out that the discovered repositories contain links to storage accounts. In addition to the source code for the AI models, this Azure storage was found to have access to 30TB of sensitive information, including backups of PCs owned by Microsoft employees.

The backups in storage contained passwords and private keys to Microsoft services.

It was also discovered that the breach contained over 30,000 Microsoft Teams messages from 359 Microsoft employees.

Wiz reported its findings to the Microsoft Security Response Center on June 22, 2023, and on June 24, 2023, the SAS token was revoked, blocking all external access to the Azure storage account. About. Microsoft reports, ``There is no record of customer data being leaked as a result of this incident, and no other internal services were at risk.''

The AI model is in ckpt format generated by the TensorFlow library and formatted with Python's Pickle module , so by design it is possible to execute arbitrary code. This means that an attacker may have injected malicious code into all AI models in this storage account, and all users who trust Microsoft's GitHub repository may also be affected by that code. Wiz points out.

Wiz says, 'SAS tokens pose a security risk due to a lack of oversight and governance, and their use should be limited as much as possible. Microsoft provides a way to centrally manage tokens within the Azure portal. It is very difficult to track these tokens because they are not shared with SAS tokens externally.Furthermore, these tokens have no expiration limit and are effectively configured to be persistent.Therefore, you cannot share SAS tokens externally. Its use is unsafe and should be avoided.'

Ami Rutvak, co-founder and CTO of Wiz, told the security news site Bleeping Computer: ``AI offers huge potential for technology companies. As new AI solutions race to go into production, the large amounts of data they work with require additional security checks and safeguards. Many development teams manipulate large amounts of data and share it with colleagues. 'This makes it increasingly difficult to monitor and prevent incidents like this.'

Related Posts:

in Web Service, Security, Posted by log1i_yk